A Systematic Guide to Initial Data Analysis (IDA): Foundational Steps for Robust Drug Development Research

This guide provides researchers, scientists, and drug development professionals with a comprehensive framework for Initial Data Analysis (IDA), a critical but often overlooked phase that ensures data integrity before formal...

A Systematic Guide to Initial Data Analysis (IDA): Foundational Steps for Robust Drug Development Research

Abstract

This guide provides researchers, scientists, and drug development professionals with a comprehensive framework for Initial Data Analysis (IDA), a critical but often overlooked phase that ensures data integrity before formal statistical testing. It systematically addresses the foundational principles of IDA, distinguishing it from exploratory analysis and emphasizing its role in preparing analysis-ready datasets. The article details methodological workflows for data cleaning and screening, offers solutions for common troubleshooting scenarios, and establishes validation protocols to ensure compliance and reproducibility. By synthesizing these four core intents, the guide aims to equip professionals with the tools to enhance research transparency, prevent analytical pitfalls, and build a solid foundation for reliable, data-driven decisions in biomedical and clinical research.

What is Initial Data Analysis? Core Principles and Workflow for Biomedical Researchers

Within the rigorous framework of initial rate data analysis research, the first analytical step is not merely exploration but precise, hypothesis-driven quantification. This step is Initial Data Analysis (IDA), a confirmatory process fundamentally distinct from Exploratory Data Analysis (EDA). While EDA involves open-ended investigation to "figure out what to make of the data" and tease out patterns [1], IDA is a targeted, quantitative procedure designed to extract a specific, model-ready parameter—the initial rate—from the earliest moments of a reaction or process. In chemical kinetics, the initial rate is defined as the instantaneous rate of reaction at the very beginning when reactants are first mixed, typically measured when reactant concentrations are highest [2]. This guide frames IDA within a broader thesis on research methodology, arguing that correctly defining and applying IDA is the cornerstone for generating reliable, actionable kinetic and pharmacological models, particularly for researchers and drug development professionals who depend on accurate rate constants and efficacy predictions for decision-making.

Core Definitions: IDA vs. EDA

The conflation of IDA with EDA represents a critical misunderstanding of the data analysis pipeline. Their purposes, methods, and outputs are distinctly different, as summarized in the table below.

Table 1: Fundamental Distinctions Between Initial Data Analysis (IDA) and Exploratory Data Analysis (EDA)

| Aspect | Initial Data Analysis (IDA) | Exploratory Data Analysis (EDA) |

|---|---|---|

| Primary Goal | To accurately determine a specific, quantitative parameter (the initial rate) for immediate use in model fitting and parameter estimation. | To understand the data's broad structure, identify patterns, trends, and anomalies, and generate hypotheses [1]. |

| Theoretical Drive | Strongly hypothesis- and model-driven. Analysis is guided by a predefined kinetic or pharmacological model. | Data-driven and open-ended. Seeks to discover what the data can reveal without a rigid prior model [1]. |

| Phase in Workflow | The crucial first step in confirmatory analysis, following immediate data collection. | The first stage of the overall analysis process, preceding confirmatory analysis [1]. |

| Key Activities | Measuring slope at t=0 from high-resolution early time-course data; calculating rates from limited initial points; applying the method of initial rates [3]. | Visualizing distributions, identifying outliers, checking assumptions, summarizing data, and spotting anomalies [1]. |

| Outcome | A quantitative estimate (e.g., rate ± error) for a key parameter, ready for use in subsequent modeling (e.g., determining reaction order). | Insights, questions, hypotheses, and an informed direction for further, more specific analysis. |

| Analogy | Measuring the precise launch velocity of a rocket. | Surveying a landscape to map its general features. |

Methodological Foundation: The Quantitative Basis of IDA

The mathematical and procedural rigor of IDA is exemplified by the Method of Initial Rates in chemical kinetics. This method systematically isolates the effect of each reactant's concentration on the reaction rate.

Protocol: The Method of Initial Rates [3]

- Design Experiments: Perform a series of reactions where the initial concentration of only one reactant is varied at a time, while all others are held constant.

- Measure Initial Rate: For each reaction, measure the concentration of a reactant or product over a very short period immediately after mixing. The initial rate is calculated from the slope of the tangent to the concentration-versus-time curve at time zero [2].

- Analyze Data: Assume a rate law of the form:

rate = k [A]^α [B]^β. Compare rates from two experiments where only[A]changes:rate_2 / rate_1 = ([A]_2 / [A]_1)^αSolve for the orderα. Repeat for reactantB. - Determine Rate Constant: Once orders (

α,β) are known, substitute the initial rate and concentrations from any run into the rate law to solve for the rate constantk.

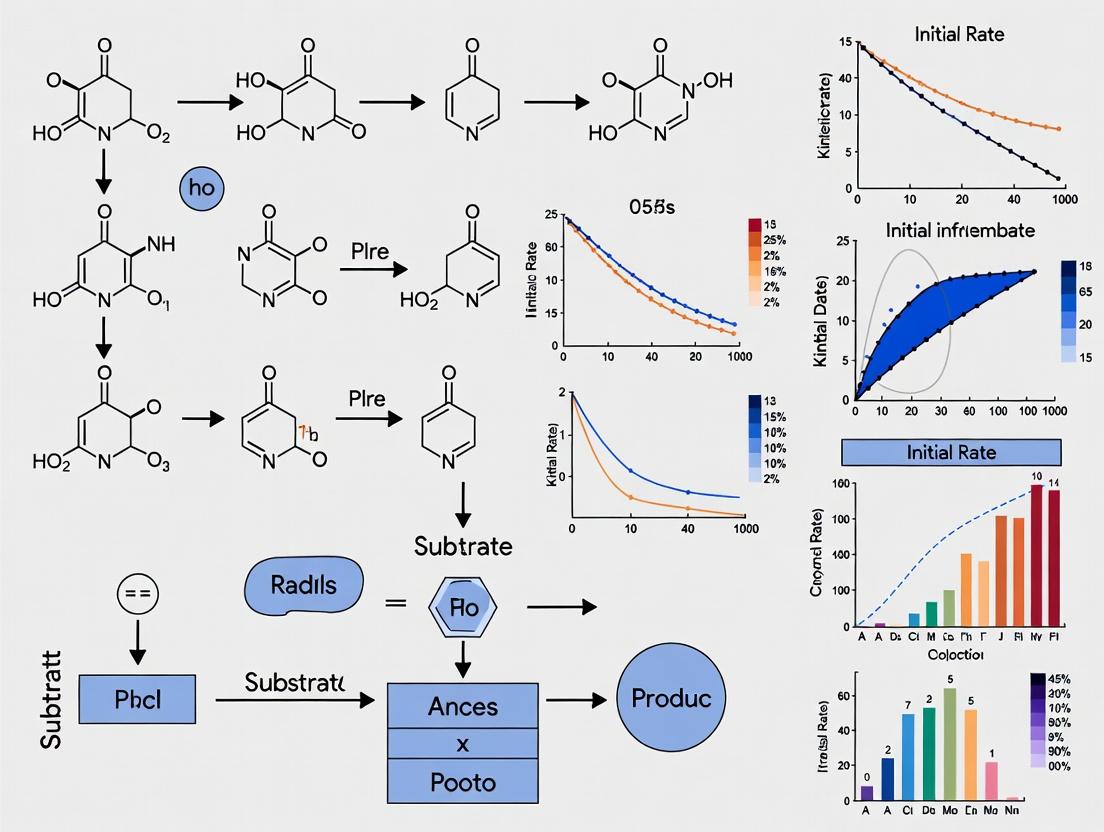

The following diagram illustrates this core IDA workflow, highlighting its sequential, confirmatory logic.

Table 2: Example Initial Rate Data and Analysis for a Reaction A + B → Products [3]

| Run | [A]₀ (M) | [B]₀ (M) | Initial Rate (M/s) | Analysis Step |

|---|---|---|---|---|

| 1 | 0.0100 | 0.0100 | 0.0347 | Baseline |

| 2 | 0.0200 | 0.0100 | 0.0694 | Compare Run 2 & 1: 0.0694/0.0347 = (0.02/0.01)^α → 2 = 2^α → α = 1 |

| 3 | 0.0200 | 0.0200 | 0.2776 | Compare Run 3 & 2: 0.2776/0.0694 = (0.02/0.01)^β → 4 = 2^β → β = 2 |

| Result | Rate Law: rate = k [A]¹[B]² |

Overall Order: 3 |

Advanced Applications: IDA in Drug Discovery and Development

The principle of IDA extends beyond basic kinetics into high-stakes drug development, where early, accurate quantification is paramount.

4.1 Predicting Drug Combination Efficacy with IDACombo A pivotal application is the IDACombo framework for predicting cancer drug combination efficacy. It operates on the principle of Independent Drug Action (IDA), which hypothesizes that a patient's benefit from a combination is equal to the effect of the single most effective drug in that combination for them [4]. The IDA-based analysis uses monotherapy dose-response data to predict combination outcomes, bypassing the need for exhaustive combinatorial testing.

Protocol: IDACombo Prediction Workflow [4]

- Input Monotherapy Data: Collect high-throughput screening data for individual drugs across a panel of cancer cell lines (e.g., from GDSC or CTRPv2 databases).

- Model Dose-Response: For each drug-cell line pair, fit a curve (e.g., sigmoidal Emax model) to calculate viability at a clinically relevant concentration.

- Apply IDA Principle: For a given combination in a specific cell line, the predicted combination viability is the minimum viability (i.e., best effect) observed among the individual drugs in the combination.

- Validate Predictions: Compare predicted combination viabilities against experimentally measured ones from separate combination screens (e.g., NCI-ALMANAC).

This workflow translates a qualitative concept (independent action) into a quantitative, predictive IDA tool, as shown below.

Table 3: Validation Performance of IDACombo Predictions [4]

| Validation Dataset | Comparison | Correlation (Pearson's r) | Key Conclusion |

|---|---|---|---|

| NCI-ALMANAC (In-sample) | Predicted vs. Measured (~5000 combos) | 0.93 | IDA model accurately predicts most combinations in vitro. |

| Clinical Trials (26 first-line trials) | Predicted success vs. Actual trial outcome | >84% Accuracy | IDA framework has strong clinical relevance for predicting trial success. |

4.2 IDA in Model-Informed Drug Development (MIDD) IDA principles are integral to MIDD, where quantitative models inform development decisions. A key impact is generating resource savings by providing robust early parameters that optimize later trials.

- Example: A population PK analysis (an IDA step) early in development can characterize elimination pathways, potentially waiving the need for a dedicated renal impairment study. One review found such MIDD applications yielded average savings of 10 months and $5 million per program [5].

The Scientist's Toolkit: Essential Reagents and Materials for IDA

Conducting robust IDA requires specialized tools to ensure precision, reproducibility, and scalability.

Table 4: Key Research Reagent Solutions for Initial Rate Studies

| Item | Function in IDA | Example Application / Note |

|---|---|---|

| Stopped-Flow Apparatus | Rapidly mixes reactants and monitors reaction progress within milliseconds. Essential for measuring true initial rates of fast biochemical reactions. | Studying enzyme kinetics or binding events. |

| High-Throughput Screening (HTS) Microplates | Enable parallel measurement of initial rates for hundreds of reactions under varying conditions (e.g., substrate concentration, inhibitor dose). | Running the method of initial rates for enzyme inhibitors. |

| Quenched-Flow Instruments | Mixes reactants and then abruptly stops (quenches) the reaction at precise, very short time intervals for analysis. | Capturing "snapshots" of intermediate concentrations at the initial reaction phase. |

| Precise Temperature-Controlled Cuvettes | Maintains constant temperature during rate measurements, as the rate constant k is highly temperature-sensitive (per Arrhenius equation). |

Found in spectrophotometers and fluorimeters for kinetic assays. |

| Rapid-Kinetics Software Modules | Analyzes time-course data from the first few percent of reaction progress to automatically calculate initial velocities via tangent fitting or linear regression. | Integrated with instruments like plate readers or stopped-flow systems. |

| Validated Cell Line Panels & Viability Assays | Provide standardized, reproducible monotherapy response data, which is the critical input for IDA-based prediction models like IDACombo. | GDSC, CTRPv2, or NCI-60 panels with ATP-based (e.g., CellTiter-Glo) readouts [4]. |

Within the rigorous domain of drug development, the analysis of initial rate data from enzymatic or cellular assays is a cornerstone for elucidating mechanism of action, calculating potency (IC50/EC50), and predicting in vivo efficacy. Intelligent Data Analysis (IDA) transcends basic statistical computation, representing a systematic philosophical framework for extracting robust, reproducible, and biologically meaningful insights from complex kinetic datasets [6]. This guide delineates a standardized IDA workflow, framing it as an indispensable component of a broader thesis on initial rate data analysis. The core mission of this approach aligns with the IDA principle of promoting insightful ideas over mere performance metrics, ensuring that each analytical step is driven by a solid scientific motivation and contributes to a coherent narrative [6] [7]. For the researcher, implementing this workflow mitigates the risks of analytical bias, ensures data integrity, and transforms raw kinetic data into defensible conclusions that can guide critical development decisions.

Foundational Stage: Comprehensive Metadata and Data Architecture

The integrity of any IDA process is established before the first data point is collected. A meticulously designed metadata framework is non-negotiable for ensuring traceability, reproducibility, and context-aware analysis.

Metadata Schema Definition: A hierarchical metadata schema must be established, encompassing experimental context, sample provenance, and instrumental parameters. This is not merely administrative but a critical analytical asset.

Table 1: Essential Metadata Categories for Initial Rate Experiments

| Metadata Category | Specific Fields | Purpose in Analysis |

|---|---|---|

| Experiment Context | Project ID, Hypothesis, Analyst, Date | Links data to research question and responsible party for audit trails. |

| Biological System | Enzyme/Cell Line ID, Passage Number, Preparation Protocol | Controls for biological variability and informs model selection (e.g., cooperative vs. Michaelis-Menten). |

| Compound Information | Compound ID, Batch, Solvent, Stock Concentration | Essential for accurate dose-response modeling and identifying compound-specific artifacts. |

| Assay Conditions | Buffer pH, Ionic Strength, Temperature, Cofactor Concentrations | Enables normalization across batches and investigation of condition-dependent effects. |

| Instrumentation | Plate Reader Model, Detection Mode (Absorbance/Fluorescence), Gain Settings | Critical for assessing signal-to-noise ratios and validating data quality thresholds. |

| Data Acquisition | Measurement Interval, Total Duration, Replicate Map (technical/biological) | Defines the temporal resolution for rate calculation and the structure for variance analysis. |

Data Architecture & Pre-processing: Raw time-course data must be ingested into a structured environment. The initial step involves automated validation checks for instrumental errors (e.g., out-of-range absorbance, failed wells). Following validation, the primary feature extraction occurs: calculation of initial rates (v₀). This is typically achieved via robust linear regression on the early, linear phase of the progress curve. The resulting v₀ values, along with their associated metadata, form the primary dataset for all downstream analysis. This stage benefits from principles of workflow automation, where standardized scripts ensure consistent processing and eliminate manual transcription errors [8].

Core Analytical Phase: Modeling, Validation, and Interpretation

With a curated dataset, the core analytical phase applies statistical and kinetic models to test hypotheses and quantify effects.

Exploratory Data Analysis (EDA): Before formal modeling, EDA techniques are employed. This includes visualizing dose-response curves, assessing normality and homoscedasticity of residuals, and identifying potential outliers using methods like Grubbs' test. EDA informs the choice of appropriate error models for regression (e.g., constant vs. proportional error).

Kinetic & Statistical Modeling: The selection of the primary model is hypothesis-driven.

- Inhibitor Potency: Data is fitted to a four-parameter logistic (4PL) model to derive IC50 and Hill slope (nH). A nH ≠ 1 suggests cooperativity or multiple binding sites.

- Enzyme Kinetics: Substrate-velocity data is fitted to the Michaelis-Menten model to obtain KM and Vmax. More complex models (e.g., allosteric, substrate inhibition) are tested if statistically justified by an F-test comparing residual sums of squares.

- Advanced Context: For time-dependent inhibition, data is modeled using progress curve analysis or the kobs/KI method.

Model Validation & Selection: A model's validity is not assumed but tested. Key techniques include:

- Residual Analysis: Plotting residuals vs. predicted values to detect systematic misfit.

- Bootstrap Confidence Intervals: Re-sampling the data (e.g., 1000 iterations) to generate robust confidence intervals for parameters like IC50, which are more reliable than asymptotic standard errors from simple regression.

- Information Criteria: Using metrics like the Akaike Information Criterion (AIC) for objective comparison of non-nested models, balancing goodness-of-fit with model complexity.

Sensitivity and Robustness Analysis: This involves testing how key conclusions (e.g., "Compound A is 10x more potent than B") change with reasonable variations in data preprocessing (e.g., baseline correction method) or model assumptions. This step quantifies the analytical uncertainty surrounding biological findings.

Reporting and Knowledge Integration

The final phase transforms analytical results into actionable knowledge, ensuring clarity, reproducibility, and integration into the broader research continuum.

Dynamic Reporting: Modern IDA leverages tools that automate the generation of dynamic reports [8]. Using platforms like R Markdown or Jupyter Notebooks, analysis code, results (tables, figures), and interpretive text are woven into a single document. A change in the raw data or analysis parameter automatically updates all downstream results, ensuring report consistency. Key report elements include:

- A summary of the experimental hypothesis and metadata.

- Clear presentation of primary results (e.g., a table of fitted parameters with confidence intervals).

- Diagnostic plots (fitted curves with data points, residual plots).

- A statement of conclusions and limitations.

Metadata-Enabled Knowledge Bases: The structured metadata from the initial phase allows results to be stored not as isolated files but as queriable entries in a laboratory information management system (LIMS) or internal database. This enables meta-analyses, such as tracking the potency of a lead compound across different assay formats or cell lines over time, directly feeding into structure-activity relationship (SAR) campaigns.

Table 2: Comparison of Common Statistical Models for Initial Rate Data

| Model | Typical Application | Key Output Parameters | Assumptions & Considerations |

|---|---|---|---|

| Four-Parameter Logistic (4PL) | Dose-response analysis for inhibitors/agonists. | Bottom, Top, IC50/EC50, Hill Slope (nH). | Assumes symmetric curve. Hill slope ≠ 1 indicates cooperativity. |

| Michaelis-Menten | Enzyme kinetics under steady-state conditions. | KM (affinity), Vmax (maximal velocity). | Assumes rapid equilibrium, single substrate, no inhibition. |

| Substrate Inhibition | Enzyme kinetics where high [S] reduces activity. | KM, Vmax, KSI (substrate inhibition constant). | Used when velocity decreases after optimal [S]. |

| Progress Curve Analysis | Time-dependent inhibition kinetics. | kinact, KI (inactivation parameters). | Models the continuous change of rate over time. |

| Linear Mixed-Effects | Hierarchical data (e.g., replicates from multiple days). | Fixed effects (mean potency), Random effects (day-to-day variance). | Explicitly models sources of variance, providing more generalizable estimates. |

The Scientist's Toolkit: Essential Reagents and Materials

Implementing a robust IDA workflow requires both analytical software and precise laboratory materials.

Table 3: Key Research Reagent Solutions for Initial Rate Analysis

| Category | Item | Function in IDA Workflow |

|---|---|---|

| Measurement & Detection | Purified Recombinant Enzyme / Validated Cell Line | The primary biological source; consistency here is paramount for reproducible kinetics. |

| Chromogenic/Fluorogenic Substrate (e.g., pNPP, AMC conjugates) | Generates the measurable signal proportional to activity. Must have high specificity and turnover rate. | |

| Reference Inhibitor (e.g., well-characterized inhibitor for the target) | Serves as a positive control for assay performance and validates the analysis pipeline. | |

| Sample Preparation | Assay Buffer with Cofactors/Mg²⁺ | Maintains optimal and consistent enzymatic activity. Variations directly impact KM and Vmax. |

| DMSO (High-Quality, Low Water Content) | Universal solvent for test compounds. Batch consistency prevents compound precipitation and activity artifacts. | |

| Liquid Handling Robotics (e.g., pipetting workstation) | Ensures precision and accuracy in serial dilutions and plate setup, minimizing technical variance. | |

| Data Analysis | Statistical Software (e.g., R, Python with SciPy/Prism) | Platform for executing nonlinear regression, bootstrapping, and generating publication-quality plots. |

| Intelligent Document Automation (IDA) Software [8] | For automating report generation, ensuring results are dynamically linked to data and analyses. | |

| Laboratory Information Management System (LIMS) | Central repository for linking raw data, metadata, analysis results, and final reports. | |

| Reporting & Collaboration | Electronic Laboratory Notebook (ELN) | Captures the experimental narrative, protocols, and links to analysis files for full reproducibility. |

| Data Visualization Tools | Enables creation of clear, informative graphs that accurately represent the statistical analysis. |

The "Zeroth Problem" in scientific research refers to the fundamental and often overlooked challenge of ensuring that collected data is intrinsically aligned with the core research objective from the outset. This precedes all subsequent analysis (the "first" problem) and represents a critical alignment phase between experimental design, data generation, and the hypothesis to be tested. In the specific context of initial rate data analysis, the Zeroth Problem manifests as the meticulous process of designing kinetic experiments to produce data that can unambiguously reveal the mathematical form of the rate law, which describes how reaction speed depends on reactant concentrations [9].

Failure to solve the Zeroth Problem results in data that is structurally misaligned—it may be precise and reproducible but ultimately incapable of answering the key research question. For instance, in drug development, kinetic studies of enzyme inhibition provide the foundation for dosing and efficacy predictions. Misaligned initial rate data can lead to incorrect mechanistic conclusions about a drug candidate's behavior, with significant downstream costs [9]. This guide frames the Zeroth Problem within the broader thesis of rigorous initial rate research, providing methodologies to align data generation with the objective of reliable kinetic parameter determination.

Methodological Frameworks for Data Alignment

Solving the Zeroth Problem requires a framework that connects the conceptual research goal to practical data structure. This involves two aligned layers: the experimental design layer, which governs how data is generated, and the analytical readiness layer, which ensures the data's properties are suited for robust statistical inference.

Experimental Design for Alignment: The core principle is the systematic variation of parameters. In initial rate analysis, this translates to the method of initial rates, where experiments are designed to isolate the effect of each reactant [9]. One reactant's concentration is varied while others are held constant, and the initial reaction rate is measured. This design generates a data structure where the relationship between a single variable and the rate is clearly exposed, directly serving the objective of determining individual reaction orders.

Analytical Readiness and Preprocessing: Data must be structured to meet the assumptions of the intended analytical models. For kinetic data, this involves verifying conditions like the constancy of the measured initial rate period and the absence of product inhibition. In other fields, such as text-based bioactivity analysis, data can suffer from zero-inflation, where an excess of zero values (e.g., most compounds show no effect against a target) violates the assumptions of standard count models. Techniques like strategic undersampling of the majority zero-class can rebalance the data, improving model fit and interpretability without altering the underlying analytical goal, thus solving a common Zeroth Problem in high-dimensional biological data [10].

The following table summarizes key data alignment techniques applicable across domains:

Table 1: Techniques for Aligning Data with Analytical Objectives

| Technique | Core Principle | Application Context | Addresses Zeroth Problem By |

|---|---|---|---|

| Systematic Parameter Variation [9] | Isolating the effect of a single independent variable by holding others constant. | Experimental kinetics, dose-response studies. | Generating data where causal relationships are distinguishable from correlation. |

| Undersampling for Zero-Inflated Data [10] | Strategically reducing over-represented classes (e.g., zero counts) to balance the dataset. | Text mining, rare event detection, sparse biological activity data. | Creating a data distribution that meets the statistical assumptions of count models (Poisson, Negative Binomial). |

| Exploratory Data Analysis (EDA) [11] | Using visual and statistical summaries to understand data structure, patterns, and anomalies before formal modeling. | All research domains, as a first step in analysis. | Identifying misalignment early, such as unexpected outliers, non-linear trends, or insufficient variance. |

| Cohort Analysis [11] | Grouping subjects (e.g., experiments, patients) by shared characteristics or time periods and analyzing their behavior over time. | Clinical trial data, longitudinal studies, user behavior analysis. | Ensuring temporal or group-based trends are preserved and can be interrogated by the analytical model. |

Experimental Protocol: Initial Rate Determination for Kinetic Order

This protocol provides the definitive method for generating data aligned with the objective of deducing a rate law. It solves the Zeroth Problem for chemical kinetics by design [9].

Materials and Reagents

- Reactants: High-purity compounds of known concentration. Prepare stock solutions accurately.

- Solvent: Consistent, purified solvent for all trials. Control for pH and ionic strength if relevant.

- Instrumentation: Spectrophotometer, pH meter, calorimeter, or chromatograph capable of rapid data acquisition in the first 2-10% of reaction progress.

- Environment: Thermostated water bath or chamber to maintain constant temperature (±0.1°C) across all experiments.

Step-by-Step Procedure

- Formulate Hypothetical Rate Law: Propose a general rate law:

Rate = k[A]^x[B]^y, wherexandyare the unknown orders to be determined [9]. - Design Experiment Matrix: Create a series of at least 5-7 experimental trials.

- Trials 1-3: Vary the concentration of reactant A (e.g., 0.01 M, 0.02 M, 0.04 M) while holding B at a fixed, excess concentration.

- Trials 4-6: Vary the concentration of reactant B while holding A at the same fixed concentration.

- Trial 7: A repeat of a baseline condition to assess reproducibility.

- Execute Kinetic Runs:

- Thermostat all solutions to the target temperature for at least 10 minutes prior to mixing.

- Initiate the reaction by rapid mixing of reactant solutions.

- Immediately begin monitoring the chosen signal (absorbance, pH, etc.) with high temporal resolution.

- Record data for a duration not exceeding 10% of the estimated total reaction time to ensure the "initial rate" condition.

- Calculate Initial Rate:

- Plot the concentration of a reactant or product versus time for the initial segment.

- Fit a straight line to the early, linear portion of the curve (typically R² > 0.99).

- The slope of this line is the initial rate (e.g., in M s⁻¹).

Data Alignment and Order Determination

- For Reactant A: Compare trials where

[B]is constant. The orderxis found from the ratio:(Rate₂/Rate₁) = ([A]₂/[A]₁)^x. For example, if doubling[A]doubles the rate,x=1; if it quadruples the rate,x=2[9]. - For Reactant B: Use trials where

[A]is constant and apply the same ratio method to findy. - Calculate Rate Constant (k): Substitute the determined orders and the data from any single trial into the rate law to solve for

k. Report the averagekfrom all trials. - State Final Rate Law: Express the complete, experimentally determined rate law with numerical values for

k,x, andy.

Initial Rate Analysis Workflow

Analytical Strategies for Aligned Data

Once aligned data is obtained through proper experimental design, selecting the correct analytical model is crucial. The choice depends on the data's structure and the research objective [11] [10].

Table 2: Analytical Models for Aligned Initial Rate and Sparse Data

| Model | Best For | Key Assumption | Solution to Zeroth Problem |

|---|---|---|---|

| Linear Regression on Transformed Data [9] | Initial rate data where orders are suspected to be simple integers (0,1,2). Plotting log(Rate) vs log(Concentration) yields a line. | The underlying relationship is a power law. Linearization does not distort the error structure. | Transforms the multiplicative power law into an additive linear relationship, making order determination direct and visual. |

| Non-Linear Least Squares Fitting | Directly fitting the rate law k[A]^x[B]^y to raw rate vs. concentration data. More robust for fractional orders. |

Error in rate measurements is normally distributed. | Uses the raw, aligned data directly to find parameters that minimize overall error, providing statistically sound estimates of k, x, y. |

| Zero-Inflated Models (ZIP, ZINB) [10] | Sparse count data where excess zeros arise from two processes (e.g., a compound has no effect OR it has an effect but zero counts were observed in a trial). | Zero observations are a mixture of "structural" and "sampling" zeros. | Explicitly models the dual source of zeros, preventing the inflation from biasing the estimates of the count process parameters. |

Data Alignment Strategy Logic

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Research Reagent Solutions for Initial Rate Studies

| Item | Function | Critical Specification/Note |

|---|---|---|

| High-Purity Substrates/Inhibitors | Serve as reactants in the kinetic assay. Their concentration is the independent variable. | Purity >98% (HPLC). Stock solutions made gravimetrically. Stability under assay conditions must be verified. |

| Buffers | Maintain constant pH, ionic strength, and enzyme stability throughout the reaction. | Use a buffer with a pKa within 1 unit of desired pH. Pre-equilibrate to assay temperature. |

| Detection Reagent (e.g., NADH, Chromogenic substrate) | Allows spectroscopic or fluorometric monitoring of reaction progress over time. | Must have a distinct signal change, be stable, and not inhibit the reaction. Molar extinction coefficient must be known. |

| Enzyme/Protein Target | The catalyst whose activity is being measured. Its concentration is held constant. | High specific activity. Store aliquoted at -80°C. Determine linear range of rate vs. enzyme concentration before main experiments. |

| Quenching Solution | Rapidly stops the reaction at precise time points for discontinuous assays. | Must be 100% effective instantly and compatible with downstream detection (e.g., HPLC, MS). |

| Statistical Software Packages (R, Python with SciPy/Statsmodels) | Implement nonlinear regression, zero-inflated models, and error analysis [11] [10]. | Essential for moving beyond graphical analysis to robust parameter estimation and uncertainty quantification. |

Pitfalls and Validation: Ensuring Sustained Alignment

Common pitfalls originate from lapses in solving the Zeroth Problem, leading to analytically inert data [9].

Table 4: Common Pitfalls and Corrective Validation Measures

| Pitfall | Consequence | Corrective Validation Measure |

|---|---|---|

| Inadequate Temperature Control | The rate constant k changes, introducing uncontrolled variance that obscures the concentration-rate relationship. |

Use a calibrated thermostatic bath. Monitor temperature directly in the reaction cuvette/vessel. |

| Measuring Beyond the "Initial Rate" Period | Reactant depletion or product accumulation alters the rate, so the measured rate does not correspond to the known initial concentrations. | Confirm linearity of signal vs. time for the duration used for slope calculation. Use ≤10% conversion rule. |

| Insufficient Concentration Range | The data does not span a wide enough range to reliably distinguish between different possible orders (e.g., 1 vs. 2). | Design experiments to vary each reactant over at least a 10-fold concentration range, if solubility and detection allow. |

| Ignoring Data Sparsity/Zero-Inflation | Applying standard regression to zero-inflated bioactivity data yields biased, overly confident parameter estimates [10]. | Perform EDA to characterize zero frequency. Compare standard model fit (AIC/BIC) with zero-inflated model fit. |

Validation is an ongoing process. After analysis, use the derived rate law to predict the initial rate for a new set of reactant concentrations not used in the fitting. A close match between predicted and experimentally measured rates provides strong validation that the data was properly aligned and the model is correct, closing the loop on the Zeroth Problem.

The Critical Importance of Metadata and Data Dictionaries in IDA

In the data-driven landscape of modern scientific research, particularly in drug development and biomedical studies, the integrity of the final analysis is wholly dependent on the initial groundwork. Initial Data Analysis (IDA) is the critical, yet often undervalued, phase that takes place between data retrieval and the formal analysis aimed at answering the research question [12]. Its primary aim is to provide reliable knowledge about data properties to ensure transparency, integrity, and reproducibility, which are non-negotiable for accurate interpretation [13] [12]. Within this framework, metadata and data dictionaries emerge not as administrative afterthoughts, but as the essential scaffolding that supports every subsequent step.

Metadata—literally "data about data"—provides the indispensable context that transforms raw numbers into meaningful information [13]. A data dictionary is a structured repository of this metadata, documenting the contents, format, structure, and relationships of data elements within a dataset or integrated system [14]. In the context of complex research environments, such as integrated data systems (IDS) that link administrative health records or longitudinal clinical studies, the role of these tools escalates from important to critical [14] [12]. They are the keystone in the arch of ethical data use, analytical reproducibility, and research efficiency, ensuring that data are not only usable but also trustworthy.

This guide positions metadata and data dictionary development as the foundational first step in a disciplined IDA process, framed within the broader thesis that rigorous initial analysis is a prerequisite for valid scientific discovery. We will explore their theoretical importance, provide practical implementation protocols, and demonstrate how they underpin the entire research lifecycle.

Theoretical Framework and Core Concepts

The Role of IDA in the Research Pipeline

Initial Data Analysis is systematically distinct from both data management and exploratory data analysis (EDA). While data management focuses on storage and access, and EDA seeks to generate new hypotheses, IDA is a systematic vetting process to ensure data are fit for their intended analytic purpose [13] [12]. The STRATOS Initiative framework outlines IDA as a six-phase process: (1) metadata setup, (2) data cleaning, (3) data screening, (4) initial data reporting, (5) refining the analysis plan, and (6) documentation [12]. The first phase—metadata setup—is the cornerstone upon which all other phases depend.

A common and costly mistake is underestimating the resources required for IDA. Evidence suggests researchers can expect to spend 50% to 80% of their project time on IDA activities, which includes metadata setup, cleaning, screening, and documentation [13]. This investment is non-negotiable for ensuring data quality and preventing analytical errors that can invalidate conclusions.

Table 1: Core Components of Initial Data Analysis (IDA)

| IDA Phase | Primary Objective | Key Activities Involving Metadata/Dictionaries |

|---|---|---|

| 1. Metadata Setup | Establish data context and definitions. | Creating data dictionaries; documenting variable labels, units, codes, and plausibility limits [13]. |

| 2. Data Cleaning | Identify and correct technical errors. | Using metadata to define validation rules (e.g., value ranges, permissible codes) [12]. |

| 3. Data Screening | Examine data properties and quality. | Using dictionaries to understand variables for summary statistics and visualizations [12]. |

| 4. Initial Reporting | Document findings from cleaning & screening. | Reporting against metadata benchmarks; highlighting deviations from expected data structure [13]. |

| 5. Plan Refinement | Update the statistical analysis plan (SAP). | Informing SAP changes based on data properties revealed through metadata-guided screening [12]. |

| 6. Documentation | Ensure full reproducibility. | Preserving the data dictionary, cleaning scripts, and screening reports as part of the research record [13]. |

Metadata and Data Dictionaries: Operationalizing the FAIR Principles

The FAIR Guiding Principles (Findable, Accessible, Interoperable, Reusable) provide a powerful framework for evaluating data stewardship, and data dictionaries are a primary tool for their implementation [14].

- Findability: A well-organized, publicly accessible data dictionary acts as a "menu" of available data elements, allowing researchers to discover what data exists without unnecessary access to sensitive information itself [14].

- Accessibility: Dictionaries enable precise, element-specific data requests. This supports ethical guidelines like HIPAA's "minimum necessary" standard by allowing researchers to request only the specific variables needed, minimizing privacy risk [14].

- Interoperability: Dictionaries achieve interoperability by using shared, accessible language to describe data. A key technique is including a crosswalk between technical variable names (e.g.,

DIAG_CD_ICD10) and programmatic names understandable to domain experts (e.g.,primary_diagnosis_code) [14]. - Reusability: By documenting the provenance of data elements, including transformation rules (e.g., how a "prematurity indicator" is derived from birth and due dates), dictionaries enable future researchers to understand and reliably reuse data [14].

Table 2: How Data Dictionaries Implement the FAIR Principles

| FAIR Principle | Challenge in IDS/Research | How Data Dictionaries Provide a Solution |

|---|---|---|

| Findable | Data elements are hidden within complex, secure systems. | Serves as a publicly accessible catalog or index of available data elements and their descriptions [14]. |

| Accessible | Requesting full datasets increases privacy risk and review burden. | Enables specific, targeted data requests (e.g., only race and gender, not full demographics), facilitating approvals [14]. |

| Interoperable | Cross-disciplinary teams use different terminologies. | Provides a controlled vocabulary and crosswalks between technical and colloquial variable names [14]. |

| Reusable | Data transformations and lineage are lost over time. | Documents derivation rules, version history, and data quality notes (e.g., "field completion rate ~10%"), ensuring future understanding [14]. |

Experimental Protocols and Implementation

Protocol: Developing a Research Data Dictionary

A comprehensive data dictionary is more than a simple list of variable names. The following protocol outlines a method for its creation.

Objective: To create a machine- and human-readable document that fully defines the structure, content, and context of a research dataset. Materials: Source dataset(s); data collection protocols; codebooks; collaboration software (e.g., shared documents, GitHub). Procedure:

- Inventory Variables: List every variable in the dataset, using its exact technical name.

- Define Core Attributes: For each variable, populate the following fields:

- Label: A human-readable, descriptive name (e.g., "Body Mass Index at Baseline").

- Type: Data storage type (e.g., integer, float, string, date).

- Units: Measurement units (e.g., "kg/m²", "mmol/L").

- Permissible Values/Range: Define valid codes (e.g.,

1=Yes, 2=No, 99=Missing) or a plausible numerical range (e.g., "18-120" for age). - Source: Origin of the data (e.g., "Patient Questionnaire, Section 2, Q4", "Electronic Health Record: LabSerumCreatinine").

- Derivation Rule: If calculated, provide the exact algorithm (e.g., "BMI = weightkg / (heightm 2)").

- Missingness Codes: Explicitly define codes for missing data (e.g.,

-999,NA,NULL) and their reason if known (e.g., "Not Applicable", "Not Answered"). - Notes: Any quality issues, changes over time, or specific handling instructions (e.g., "High proportion of missing values post-2020 due to change in assay").

- Incorporate Process Metadata: Document dataset-level information: study purpose, collection dates, principal investigator, version number, and a description of any cleaning or preprocessing steps already applied.

- Review and Validate: Domain experts must review definitions for accuracy. Technical staff should verify labels and types against the actual data.

- Publish and Version: Store the dictionary in an open, non-proprietary format (e.g., CSV, JSON, PDF) alongside the data. Implement version control to track changes.

Protocol: Systematic Data Screening for Longitudinal Studies

Longitudinal studies, common in clinical trial analysis and cohort studies, present unique IDA challenges. This protocol, extending the STRATOS checklist, uses metadata to guide screening [12].

Objective: To systematically examine properties of longitudinal data to inform the appropriateness and specification of the planned statistical model. Pre-requisites: A finalized data dictionary and a pre-planned statistical analysis plan (SAP) must be in place [12]. Procedure: Conduct the following five explorations, generating both summary statistics and visualizations:

- Participation Profiles: Use metadata on visit dates and study waves to chart the flow of participants over time. Create a visualization showing the number of participants contributing data at each time point and pathways of dropout.

- Missing Data Evaluation: Extend the dictionary's missingness codes to evaluate patterns over time. Distinguish between intermittent missingness and monotonic dropout. Assess if missingness is related to observed variables (e.g., worse baseline health predicting dropout).

- Univariate Description: Summarize each variable at each relevant time point (e.g., mean/median, SD, range). Use the data dictionary's plausibility ranges to flag outliers. Plot histograms or boxplots to visualize distributions across time.

- Multivariate Description: Examine correlations between key variables within and across time points, as anticipated in the SAP.

- Longitudinal Depiction: Plot individual trajectories and aggregate trends for primary outcomes over time to visualize within- and between-subject variability, informing the choice of covariance structures in models.

Output: An IDA report that summarizes findings from steps 1-5, explicitly links them to the original SAP, and proposes data-driven refinements to the analysis plan (e.g., suggesting a different model for missing data, or a transformation for a skewed variable) [12].

Diagram: Systematic Data Screening Workflow for Longitudinal Studies [12]

Implementing robust IDA with metadata at its core requires a combination of conceptual tools, software, and collaborative practices.

Table 3: Research Reagent Solutions for IDA and Metadata Management

| Tool Category | Specific Tool/Technique | Function in IDA & Metadata Management |

|---|---|---|

| Documentation & Reproducibility | R Markdown, Jupyter Notebook | Literate programming environments that integrate narrative text, code, and results to make the entire IDA process reproducible and self-documenting [13]. |

| Version Control | Git, GitHub, GitLab | Tracks changes to analysis scripts, data dictionaries, and documentation over time, enabling collaboration and preserving provenance [13]. |

| Data Validation & Profiling | R (validate, dataMaid), Python (PandasProfiling, Great Expectations) |

Software packages that use metadata rules to automatically screen data for violations, missing patterns, and generate quality reports. |

| Metadata Standardization | CDISC SDTM, OMOP CDM, ISA-Tab | Domain-specific standardized frameworks that provide predefined metadata structures, ensuring consistency and interoperability in clinical (SDTM, OMOP) or bioscience (ISA) research. |

| Collaborative Documentation | Static Dictionaries (CSV, PDF), Wiki Platforms, Electronic Lab Notebooks (ELNs) | Centralized, accessible platforms for hosting and maintaining live data dictionaries, facilitating review by cross-disciplinary teams [14]. |

Advanced Considerations and Specialized Contexts

Ethical Data Stewardship and Community Engagement

In studies using integrated administrative data or involving community-based participatory research, metadata transcends technical utility to become an instrument of ethical practice and data sovereignty [14]. A transparent data dictionary allows community stakeholders and oversight bodies to understand exactly what data is being collected and how it is defined. This practice builds trust and enables a form of democratic oversight. Furthermore, documenting data quality metrics (e.g., "completion rate for sensitive question is 10%") can reveal collection problems rooted in ethical or cultural concerns, prompting necessary changes to protocols [14].

Visualization for Data Screening and Reporting

Effective visualization is a core IDA activity for data screening and initial reporting [12]. The choice of chart must be guided by the metadata and the specific screening objective.

- Histograms & Frequency Plots: Essential for univariate description to check the distribution of continuous variables against expected ranges [15].

- Line Charts & Spaghetti Plots: Critical for the "longitudinal depiction" phase to visualize individual and aggregate trends over time [16] [12].

- Bar Charts: Useful for comparing summary statistics (e.g., mean, count) of categorical variables across groups or time points [16].

- Flow Diagrams: Vital for illustrating participant inclusion and attrition over time in longitudinal studies [12].

All visualizations must adhere to accessibility standards, including sufficient color contrast. For standard text within diagrams, a contrast ratio of at least 4.5:1 is required, and for large text, at least 3:1 [17]. The palette specified (#4285F4, #EA4335, #FBBC05, #34A853, #FFFFFF, #F1F3F4, #202124, #5F6368) must be applied with these ratios in mind, ensuring text is legible against its background [18] [19].

Diagram: The Central Role of the Data Dictionary in Enabling FAIR Principles for Research [14]

Metadata and data dictionaries are the silent, foundational pillars of credible scientific research. They operationalize ethical principles, enforce methodological discipline during Initial Data Analysis, and are the primary mechanism for achieving the FAIR goals that underpin open and reproducible science. For researchers, scientists, and drug development professionals, investing in their creation is not a bureaucratic task but a profound scientific responsibility. Integrating the protocols and tools outlined in this guide into the IDA plan ensures that research is built upon a solid, transparent, and trustworthy foundation, ultimately safeguarding the validity and impact of its conclusions.

Within the rigorous framework of initial rate data analysis research in drug development, the Initial Data Assessment (IDA) phase is critically resource-intensive. This phase, often consuming between 50-80% of the total analytical effort for a project, encompasses the foundational work of validating, processing, and preparing raw experimental data for robust pharmacokinetic/pharmacodynamic (PK/PD) and statistical analysis. The substantial investment is not merely procedural but strategic, forming the essential bedrock upon which all subsequent dose-response modeling, safety evaluations, and final dosage recommendations are built. This guide details the technical complexities, methodological protocols, and resource drivers that define this pivotal stage.

Technical Complexity and Resource Drivers of IDA

The IDA phase is protracted due to the confluence of multidimensional data complexity, stringent quality requirements, and iterative analytical processes. The primary drivers of resource consumption are systematized in the table below.

Table 1: Key Resource Drivers in the Initial Data Assessment (IDA) Phase

| Resource Driver Category | Specific Demands & Challenges | Estimated Impact on Timeline |

|---|---|---|

| Data Volume & Heterogeneity | Integration of high-throughput biomarker data (e.g., ctDNA, proteomics), continuous PK sampling, digital patient-reported outcomes, and legacy format historical data. | 25-35% |

| Quality Assurance & Cleaning | Anomaly detection, handling of missing data, protocol deviation reconciliation, and cross-validation against source documents. | 30-40% |

| Biomarker & Assay Validation | Establishing sensitivity, specificity, and dynamic range for novel pharmacodynamic biomarkers; reconciling data from multiple laboratory sites. | 15-25% |

| Iterative Protocol Refinement | Feedback loops between statisticians, pharmacologists, and clinical teams to refine analysis plans based on initial data structure. | 10-15% |

A central challenge is the management of diverse biomarker data, which is crucial for establishing a drug's Biologically Effective Dose (BED) range alongside the traditional Maximum Tolerated Dose (MTD). Biomarkers such as circulating tumor DNA (ctDNA) serve multiple roles—as predictive, pharmacodynamic, and potential surrogate endpoint biomarkers—each requiring rigorous validation and context-specific analysis plans [20]. The integration of this multi-omics data with classical PK and clinical safety endpoints creates a complex data architecture that demands sophisticated curation and harmonization before any formal modeling can begin.

Furthermore, modern dose-optimization strategies, encouraged by recent FDA guidance, rely on comparing multiple dosages early in development. This generates larger, more complex datasets from innovative trial designs (e.g., backfill cohorts, randomized dose expansions) that must be meticulously assessed to inform go/no-go decisions [20]. The shift from a simple MTD paradigm to a multi-faceted optimization model inherently expands the scope and depth of the IDA.

Methodological Protocols for Core IDA Workflows

A standardized yet flexible methodology is required to manage the IDA process efficiently. The following protocols outline critical workflows.

Protocol for Integrated Biomarker and PK Data Validation

This protocol ensures the reliability of primary data streams used for dose-response modeling.

- Source Data Reconciliation: For each patient, match biomarker sample IDs (e.g., from paired tumor biopsies or serial blood draws) with corresponding PK sampling timepoints and clinical event logs. Discrepancies must be documented and resolved via query with the clinical operations team.

- Assay Performance Verification: For each biomarker batch (e.g., a ctDNA sequencing run), verify that control samples fall within pre-defined ranges of sensitivity and specificity. Data from batches failing quality control (QC) are flagged and excluded from primary analysis.

- PK Data Non-Compartmental Analysis (NCA): Perform initial, non-model-based PK analysis (e.g., using WinNonlin or R

PKNCA) to calculate key parameters (AUC, C~max~, T~max~, half-life) for each dosage cohort. This provides a preliminary view of exposure and identifies potential outliers or anomalous absorption profiles. - Data Fusion for Exploratory Analysis: Merge validated biomarker levels (e.g., target engagement markers) with individual PK parameters (e.g., AUC). Generate exploratory scatter plots (exposure vs. biomarker response) to visually assess potential relationships and inform the structure of subsequent PK/PD models.

Protocol for "What-If" Scenario Planning via Resource Optimization Models

IDA must evaluate resource trade-offs for future studies. Computational models enable this [21] [22].

- Define Model Inputs: Quantify available resources (e.g., FTEs, analytical instrument time, budget) and project requirements for pipeline candidates. Assign a dynamically updated Probability of Success (POS) to each program based on internal and external factors [22].

- Configure Optimization Objective: Set the model's goal, such as maximizing the net present value of the portfolio or the number of candidates advancing to the next phase, subject to resource constraints.

- Run Scenario Analysis: Use the model (e.g., a Linear or Dynamic Programming framework [21]) to simulate outcomes under different scenarios. Key scenarios include reallocating resources from a stalled program, evaluating the impact of outsourcing a assay suite, or assessing the benefit of adding a new dosage cohort to an ongoing trial.

- Generate Allocation Outputs: The model outputs an optimal resource allocation plan. This plan is reviewed by the Safety Assessment and Development teams to ensure feasibility before being adopted for pipeline planning [22].

The logical flow of data and decisions through the IDA phase, culminating in inputs for advanced modeling, is visualized below.

Diagram 1: IDA Workflow and Its Central Role in Analysis

Protocol for Clinical Utility Index (CUI) Preliminary Calculation

A Clinical Utility Index integrates diverse endpoints into a single metric to aid dosage selection [20].

- Endpoint Selection & Normalization: Select key early endpoints (e.g., a biomarker response change, incidence of a key Grade 2+ toxicity, preliminary tumor response rate). Rescale each endpoint to a 0-1 scale, where 1 is most desirable.

- Weight Assignment: Assign preliminary weights to each normalized endpoint based on therapeutic area priorities and clinical input (e.g., efficacy weight = 0.6, safety weight = 0.4). These weights are refined during the analysis.

- CUI Calculation & Ranking: For each dosage cohort, calculate the weighted sum: CUI = Σ (Weight~i~ × Normalized Score~i~). Rank dosage cohorts by their preliminary CUI.

- Sensitivity Analysis: Perform a sensitivity analysis on the weights to determine if the ranking of dosage cohorts is robust. This identifies critical data gaps to address in the final analysis.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Research Reagent & Resource Solutions for IDA

| Tool/Resource Category | Specific Item/Platform | Primary Function in IDA |

|---|---|---|

| Data Integration & Management | AIMMS Optimization Platform [22], Oracle Clinical DB | Provides robust data interchange, houses source data, and enables resource allocation scenario modeling. |

| Biomarker Assay Kits | Validated ctDNA NGS Panels, Multiplex Immunoassays (e.g., MSD, Luminex) | Generates high-dimensional pharmacodynamic and predictive biomarker data essential for BED determination [20]. |

| PK/PD Analysis Software | WinNonlin (Certara), R Packages (PKNCA, nlmixr2, mrgsolve) |

Performs non-compartmental analysis and foundational PK/PD modeling on curated data. |

| Statistical Computing Environment | R, Python (with pandas, NumPy, SciPy libraries), SAS |

Executes data cleaning, statistical tests, and the creation of custom analysis scripts for unique trial designs. |

| Decision Support Framework | Clinical Utility Index (CUI) Model, Pharmacological Audit Trail (PhAT) [20] | Provides structured frameworks to integrate disparate data types (efficacy/safety) into quantitative dosage selection metrics. |

The interplay between data generation, resource management, and decision-making frameworks within IDA is complex. The following diagram maps these critical relationships and dependencies.

Diagram 2: IDA System Inputs, Core Engine, and Outputs

The demand for 50-80% of analytical time and resources by the Initial Data Assessment is not an inefficiency but a strategic imperative in modern drug development. This investment directly addresses the challenges posed by complex biomarker-driven trials and the regulatory shift towards earlier dosage optimization [20]. By rigorously validating data, exploring exposure-response relationships, and simulating resource scenarios through structured protocols, the IDA phase transforms raw data into a credible foundation. It de-risks subsequent modeling and ensures that pivotal decisions on dosage selection and portfolio strategy are data-driven, robust, and ultimately capable of accelerating the delivery of optimized therapies to patients.

Executing Initial Data Analysis: A Step-by-Step Workflow for Data Cleaning and Screening

Within the rigorous framework of initial rate data analysis for drug development, Phase 1: Data Cleaning establishes the foundational integrity of the dataset. This phase involves the systematic identification, correction, and removal of errors and inconsistencies to ensure that subsequent pharmacokinetic (PK), pharmacodynamic (PD), and safety analyses are accurate and reliable. For researchers and scientists, this process is not merely preparatory; it is a critical scientific step that safeguards against erroneous conclusions that could derail a compound's development path [23] [24]. Dirty data—containing duplicates, missing values, formatting inconsistencies, and outliers—directly jeopardizes the determination of crucial parameters like maximum tolerated dose (MTD), bioavailability, and safety margins [25] [24].

The following table summarizes the core techniques employed in this phase, their specific applications in early-phase clinical research, and the associated risks of neglect.

Table 1: Core Data Cleaning Techniques in Initial Rate Data Analysis

| Technique | Description & Purpose | Common Issues in Research Data | Consequence of Neglect |

|---|---|---|---|

| Standardization [23] [26] | Transforming data into a consistent format (dates, units, categorical terms) to enable accurate comparison and aggregation. | Inconsistent lab unit reporting (e.g., ng/mL vs. μg/L), date formats, or terminology across sites. | Inability to pool data; errors in PK calculations (e.g., AUC, C~max~). |

| Missing Value Imputation [23] [26] | Addressing blank or null values using statistical methods to preserve dataset size and statistical power. | Missing pharmacokinetic timepoints, skipped safety lab results, or unreported adverse event details. | Biased statistical models; reduced power to identify safety or PD signals; data loss from complete-case analysis. |

| Deduplication [23] [26] | Identifying and merging records that refer to the same unique entity (e.g., subject, sample). | Duplicate subject entries from data transfer errors or repeated sample IDs from analytical runs. | Inflated subject counts; skewed summary statistics and dose-response relationships. |

| Validation & Correction [26] [27] | Checking data against predefined rules (range checks, logic checks) and correcting typos or inaccuracies. | Pharmacokinetic concentrations outside possible range, heart rate values incompatible with life, or illogical dose-time sequences. | Invalid safety and efficacy analyses; failure to detect data collection or assay errors. |

| Outlier Detection & Treatment [23] [26] | Identifying values that deviate significantly from the rest, followed by investigation, transformation, or removal. | Extreme PK values due to dosing errors or sample mishandling; anomalous biomarker readings. | Skewed estimates of central tendency and variability; masking of true treatment effects. |

Experimental Protocols for Data Validation in Early-Phase Trials

Implementing data cleaning requires methodical protocols integrated into the research workflow. The following detailed methodologies are essential for maintaining data quality from collection through analysis.

Protocol 1: Systematic Data Validation and Range Checking This protocol ensures data plausibility and logical consistency before in-depth analysis.

- Define Validation Rules: Prior to database lock, establish rule sets based on the study protocol and biological plausibility. Examples include:

- Range Checks: Serum creatinine must be within 0.5-2.0 mg/dL for healthy volunteers [25].

- Logic Checks: The date of a reported adverse event must be on or after the first dose date.

- Dose-PK Consistency: A subject's PK sample concentration should be zero at pre-dose timepoints.

- Automated Flagging: Implement these rules within the Electronic Data Capture (EDC) system or using data quality tools (e.g., Great Expectations) [26] [27]. Queries are automatically generated for violations.

- Source Data Verification (SDV): A clinical data coordinator reviews each flagged entry against the original source document (e.g., clinical chart, lab report) to confirm or correct the data [24].

- Audit Trail: Document all changes, including the reason for change, the user, and timestamp, to maintain a complete audit trail for regulatory compliance [27].

Protocol 2: Handling Missing Pharmacokinetic Data Missing PK data can bias estimates of exposure. The imputation method must be pre-specified in the statistical analysis plan (SAP).

- Categorize Missingness: Determine the pattern: Missing Completely at Random (MCAR), at Random (MAR), or Not at Random (MNAR) [26].

- Select Imputation Method:

- For isolated missing timepoints (MCAR/MAR): Use interpolation for intermediate timepoints or PK modeling (e.g., population PK) to predict missing concentrations based on the subject's overall profile [26].

- For samples below the limit of quantification (BLQ): Apply a pre-defined rule, such as setting to zero, using LLOQ/√2, or treating as missing, as justified in the SAP.

- No imputation for critical omissions: If an entire profile or a key endpoint (e.g., C~max~) is missing, the subject's data may be excluded from PK summaries, with justification [28].

- Sensitivity Analysis: Conduct a parallel analysis using an alternative imputation method (e.g., complete-case analysis) to assess the robustness of the primary PK conclusions [26].

Data Cleaning Workflow in Drug Development Analysis

Protocol 3: Outlier Analysis for Safety and PK Data Outliers require scientific investigation, not automatic deletion.

- Detection: Use statistical (e.g., IQR method) and graphical (e.g., box plots, PK concentration-time plots) methods to identify extreme values in safety labs, vital signs, and PK concentrations [26].

- Investigation: Trace each outlier to source documents. Determine if it is due to:

- Data Entry Error: Correct if a source document error is found.

- Procedural Error: e.g., sample drawn at wrong time, dose miscalculation. Document and decide on inclusion/exclusion per SAP.

- Biological Variability: A true, extreme physiological response. This data must typically be retained.

- Reporting: Document all identified outliers, the investigation results, and the final decision (include/transform/exclude) in the clinical study report appendix.

Data Validation Protocol for Clinical Trials

The Scientist's Toolkit: Essential Reagents & Solutions

Table 2: Key Research Reagent Solutions for Data Cleaning & Analysis

| Tool / Solution | Function in Data Cleaning & Analysis | Application Example in Phase 1 Research |

|---|---|---|

| Electronic Data Capture (EDC) System | Provides a structured, validated interface for clinical data entry with built-in range and logic checks, ensuring data quality at the point of entry [25]. | Capturing subject demographics, dosing information, adverse events, and lab results in real-time during a Single Ascending Dose (SAD) trial [25]. |

| Laboratory Information Management System (LIMS) | Tracks and manages bioanalytical sample lifecycle, ensuring chain of custody and linking sample IDs to resulting PK/PD data, preventing misidentification [27]. | Managing thousands of plasma samples from a Multiple Ascending Dose (MAD) study, from aliquot preparation to LC-MS/MS analysis output. |

| Statistical Analysis Software (e.g., SAS, R) | Performs automated data validation, imputation, outlier detection, and statistical analysis per a pre-specified SAP. Essential for generating PK parameters and summary tables [26] [28]. | Calculating PK parameters (AUC, C~max~, t~½~) using non-compartmental analysis and performing statistical tests for dose proportionality. |

| Data Visualization Tools (e.g., Spotfire, ggplot2) | Creates graphs for exploratory data analysis, enabling visual identification of outliers, trends, and inconsistencies in PK/PD and safety data [26]. | Plotting individual subject concentration-time profiles to visually detect anomalous curves or unexpected absorption patterns. |

| Validation Rule Engine (e.g., Great Expectations, Pydantic) | Allows for the codification of complex business and scientific rules (e.g., "QTcF must be < 500 ms for dosing") to automatically validate datasets post-transfer [26] [27]. | Running quality checks on the final analysis dataset before generating tables, figures, and listings (TFLs) for the clinical study report. |

Initial Data Analysis (IDA) is a systematic framework that precedes formal statistical testing and ensures the integrity of research findings [13]. It consists of multiple phases, with Phase 2—Data Screening—serving as the critical juncture where researchers assess the fundamental properties of their dataset. This phase is distinct from Exploratory Data Analysis (EDA), as its primary aim is not hypothesis generation but rather to verify data quality and ensure that preconditions for planned analyses are met [13]. In the context of drug development, rigorous data screening is non-negotiable; it safeguards against biased efficacy estimates, flawed safety signals, and ultimately, protects patient well-being and regulatory submission integrity.

The core pillars of Data Screening are the assessment of data distributions, the identification and treatment of outliers, and the understanding of missing data patterns. Proper execution requires a blend of statistical expertise and deep domain knowledge to distinguish true biological signals from measurement artifact or data collection error [13]. Researchers should anticipate spending a significant portion of project resources (estimated at 50-80% of analysis time) on data setup, cleaning, and screening activities [13]. This investment is essential, as decisions made during screening directly influence the validity of all subsequent conclusions.

Assessing Data Distributions: Graphical and Quantitative Methods

The distribution of variables is a primary determinant of appropriate analytical methods. Assessing distribution shapes, central tendency, and spread forms the foundation for choosing parametric versus non-parametric tests and for identifying potential data anomalies.

Graphical Methods for Distribution Assessment: Visual inspection is the first and most intuitive step. Histograms are the most common tool for visualizing the distribution of continuous quantitative data [29] [30]. They group data into bins, and the height of each bar represents either the frequency (count) or relative frequency (proportion) of observations within that bin [31]. A density histogram scales the area of all bars to sum to 1 (or 100%), allowing direct interpretation of proportions [32] [31]. Key features to assess via histogram include:

- Modality: The number of peaks (unimodal, bimodal).

- Skewness: Asymmetry of the distribution (positive/right-skewed, negative/left-skewed).

- Kurtosis: The "tailedness" or heaviness of the tails relative to a normal distribution.

For smaller datasets, stem-and-leaf plots and dot plots offer similar distributional insights while preserving the individual data values, which histograms do not [30].

Protocol for Creating and Interpreting a Histogram:

- Determine Bins/Classes: Define a series of consecutive, non-overlapping intervals that cover the data range. The choice of bin width is critical; too few bins oversimplifies the structure, while too many bins creates excessive noise [32].

- Tally Frequencies: Count the number of observations falling into each bin.

- Construct the Plot: Draw a bar for each bin, with the bin interval on the x-axis and the frequency (or density) on the y-axis. Bars should be contiguous, reflecting the continuous nature of the data [29] [30].

- Interpretation: Analyze the shape for modality, skewness, and potential gaps or outliers. Overlaying a theoretical distribution (e.g., a normal curve) can aid in assessing fit.

Quantitative Measures for Distribution Assessment: Graphical methods should be supplemented with numerical summaries.

Table 1: Quantitative Measures for Assessing Distributions and Scale

| Measure Type | Specific Metric | Interpretation in Screening | Sensitive to Outliers? |

|---|---|---|---|

| Central Tendency | Mean | Arithmetic average. The expected value if the distribution is symmetric. | Highly sensitive. |

| Median | Middle value when data is ordered. The 50th percentile. | Robust (resistant). | |

| Mode | Most frequently occurring value(s). | Not sensitive. | |

| Spread/Dispersion | Standard Deviation (SD) | Average distance of data points from the mean. | Highly sensitive. |

| Interquartile Range (IQR) | Range of the middle 50% of data (Q3 - Q1). | Robust (resistant). | |

| Range | Difference between maximum and minimum values. | Extremely sensitive. | |

| Distribution Shape | Skewness Statistic | Quantifies asymmetry. >0 indicates right skew. | Sensitive. |

| Kurtosis Statistic | Quantifies tail heaviness. >3 indicates heavier tails than normal. | Sensitive. |

A large discrepancy between the mean and median suggests skewness. Similarly, if the standard deviation is much larger than the IQR, it often indicates the presence of outliers or a heavily tailed distribution [33]. For normally distributed data, approximately 68%, 95%, and 99.7% of observations fall within 1, 2, and 3 standard deviations of the mean, respectively—a rule sometimes misapplied for outlier detection due to the sensitivity of the mean and SD [33].

Identifying and Treating Outliers

Outliers are extreme values that deviate markedly from other observations in the sample [33]. They may arise from measurement error, data entry error, sampling anomaly, or represent a genuine but rare biological phenomenon. Distinguishing between these causes requires domain expertise.

Methods for Identifying Outliers:

- Graphical Methods: The box plot (or box-and-whisker plot) is a standard tool. Outliers are typically defined as points lying beyond 1.5 * IQR above the third quartile (Q3) or below the first quartile (Q1) [33]. Histograms and dot plots also visually reveal extreme values [30].

- Statistical Distance Methods:

- Z-score: For approximately normal data, an absolute Z-score > 3 is a common threshold. This method is less reliable for small samples or non-normal data as the mean and SD are themselves influenced by outliers [33].

- Modified Z-score: Uses the median and Median Absolute Deviation (MAD), making it more robust.

- Multivariate Methods: For models considering multiple variables, leverage statistics, Cook's distance, and Mahalanobis distance can identify observations that have an undue influence on the model fit.

Experimental Protocols for Outlier Treatment: Once identified, the rationale for handling each potential outlier must be documented. Common strategies include:

- Investigation: Before any action, attempt to verify the correctness of the value. If it is a confirmed error (e.g., data entry), correction may be possible.

- Trimming (Deletion): Removing the outlier(s) from the dataset. This is straightforward but reduces sample size and can introduce bias if the outlier is a valid observation [33].

- Winsorization: Replacing the extreme values with the nearest "non-outlier" values (e.g., the value at the 1.5*IQR boundary). This reduces influence without discarding the data point entirely [33].

- Robust Statistical Methods: Using analytical techniques (e.g., median regression, trimmed means) that are inherently less sensitive to extreme values [33].

- Segmented Analysis: Analyzing data with and without outliers to determine their impact on conclusions. Both results should be reported transparently.

Diagram: Decision Pathway for Handling Outliers

Handling Missing Data

Missing data is ubiquitous in research and can introduce significant bias if its mechanism is ignored. The approach must be guided by the missing data mechanism.

Table 2: Types of Missing Data and Their Implications [33] [34]

| Mechanism | Acronym | Definition | Example in Clinical Research | Impact on Analysis |

|---|---|---|---|---|

| Missing Completely at Random | MCAR | The probability of missingness is unrelated to both observed and unobserved data. | A sample is lost due to a freezer malfunction. | Reduces statistical power but does not introduce bias. |

| Missing at Random | MAR | The probability of missingness is related to observed data but not to the unobserved missing value itself. | Older patients are more likely to miss a follow-up visit, and age is recorded. | Can introduce bias if ignored, but bias can be corrected using appropriate methods. |

| Missing Not at Random | MNAR | The probability of missingness is related to the unobserved missing value itself. | Patients with severe side effects drop out of a study, and their final outcome score is missing. | High risk of bias; most challenging to handle. |

Methods for Handling Missing Data:

- Complete Case Analysis: Using only subjects with complete data for all variables. This is the default in many statistical packages but leads to loss of power and can cause severe bias if data are not MCAR [33].

- Available Case Analysis: Using all available data for each specific analysis, leading to varying sample sizes across analyses. This can produce inconsistent results [33].

- Single Imputation: Replacing a missing value with a single plausible estimate (e.g., mean, median, regression prediction). Simple but underestimates variability [33].

- Multiple Imputation (Gold Standard): Creating multiple (

m) complete datasets by imputing missing valuesmtimes, reflecting the uncertainty about the missing data. Analyses are run on each dataset and results are pooled. This provides valid standard errors and is appropriate for data that are MCAR or MAR [33]. - Model-Based Methods: Using maximum likelihood estimation or Bayesian methods that model the data and missingness process simultaneously.

Protocol for Assessing Missing Data Patterns:

- Quantify: Use summary functions (e.g.,

summary()in R) or missingness matrices to countNAs per variable [34]. - Visualize: Create a missingness pattern plot or leverage exploratory plots to see if missingness in one variable is associated with values of another.

- Diagnose Mechanism: Use domain knowledge and statistical tests (e.g., Little's MCAR test) to hypothesize the missing data mechanism (MCAR, MAR, MNAR).

- Select & Apply Method: Choose a handling method commensurate with the mechanism and the analysis goal. For MAR data planned for a regression, multiple imputation is often optimal.

- Sensitivity Analysis: Conduct analyses under different plausible assumptions about the missing data (e.g., different imputation models, MNAR scenarios) to assess the robustness of conclusions.

Practical Implementation and Workflow Integration

Data screening should be a planned, reproducible, and documented component of the research pipeline, not an ad-hoc activity [13]. An IDA plan should be developed in conjunction with the study protocol and Statistical Analysis Plan (SAP) [13].

Reproducible Screening Workflow:

- Protect Raw Data: Never modify the original source data. All screening steps should be performed on a copy via executable code [13].

- Code-Based Rules: Implement all data cleaning, transformation, and outlier rules in scripted code (e.g., R, Python) rather than manual edits in a spreadsheet [13].

- Version Control: Use systems like Git to track changes to analysis scripts.

- Literate Programming: Integrate screening code, outputs (tables, graphs), and narrative documentation in tools like R Markdown or Jupyter Notebooks to ensure full reproducibility [13].

- Create an Audit Trail: Log all decisions, including the justification for transforming a variable, Winsorizing an outlier, or selecting an imputation model.

Diagram: Reproducible Data Screening Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Toolkit for Data Screening in Statistical Software

| Tool/Reagent Category | Specific Examples (R packages highlighted) | Primary Function in Screening | Key Considerations |

|---|---|---|---|

| Data Wrangling & Inspection | dplyr, tidyr (R); pandas (Python) |

Subsetting, summarizing, and reshaping data for screening. | Facilitates reproducible data manipulation. |

| Distribution Visualization | ggplot2 (R); matplotlib, seaborn (Python) |

Creating histograms, density plots, box plots, and Q-Q plots. | ggplot2 allows layered, publication-quality graphics. |

| Missing Data Analysis | naniar, mice (R); fancyimpute (Python) |

Visualizing missing patterns and performing multiple imputation. | mice is a comprehensive, widely validated multiple imputation package. |