Kron Reduction Method for Parameter Estimation: Solving Ill-Posed Problems in Biochemical Kinetic Modeling

This article provides a comprehensive guide to the Kron reduction method for parameter estimation in kinetic models of chemical reaction networks (CRNs), specifically addressing the common challenge of incomplete experimental...

Kron Reduction Method for Parameter Estimation: Solving Ill-Posed Problems in Biochemical Kinetic Modeling

Abstract

This article provides a comprehensive guide to the Kron reduction method for parameter estimation in kinetic models of chemical reaction networks (CRNs), specifically addressing the common challenge of incomplete experimental data. Aimed at researchers and drug development professionals, it covers foundational theory, practical implementation steps, troubleshooting for optimization, and comparative validation against traditional methods. By transforming ill-posed estimation problems into well-posed ones, Kron reduction, combined with least squares optimization, offers a robust framework for accurately inferring kinetic parameters from partial time-series concentration data, which is critical for reliable systems biology modeling and drug discovery.

Understanding Kron Reduction: From Network Theory to Ill-Posed Parameter Problems

A critical step in understanding the dynamics of a Chemical Reaction Network (CRN) is translating biological knowledge into a mathematical model, typically a system of Ordinary Differential Equations (ODEs) governed by kinetic rate laws like Mass Action Kinetics [1]. The parameters of these models—such as reaction rate constants—are often unknown and must be estimated from experimental data. This process is foundational to the bottom-up modelling approach in systems biology, where constructing a comprehensive model from experimental data is the ultimate goal [1].

The problem transforms from standard to fundamentally challenging under conditions of partial observability. In most real-world experiments, it is technically or biologically impossible to measure the time-course concentrations of all chemical species involved in a network. When experimental data is available for only a subset of species, the parameter estimation problem becomes ill-posed [1]. Direct application of conventional optimization techniques, such as (weighted) least squares, is infeasible because the mismatch between model predictions and data cannot be fully evaluated for the unobserved states. This partial observability is a central obstacle in creating predictive models for complex biological systems, from cellular signaling pathways to pharmacokinetic-pharmacodynamic (PK/PD) models used in drug development [1].

This Application Note addresses this challenge by detailing a methodology centered on the Kron reduction method. This approach, integrated with least squares optimization, provides a mathematically rigorous framework to convert an ill-posed problem into a well-posed one, enabling parameter estimation from partial time-series data [1]. The protocols herein are framed within ongoing thesis research aimed at advancing robust parameter estimation techniques for biochemical systems.

Foundational Methodology: The Kron Reduction Framework

The Kron reduction method is chosen for model reduction due to two key properties critical for parameter estimation: kinetics preservation and observability alignment [1]. If the original CRN follows Mass Action Kinetics, the reduced model is also a CRN governed by the same law. Furthermore, the method systematically reduces the model so that the dependent variables in the reduced model correspond precisely to the set of species whose concentration data is available.

2.1 Mathematical Formulation Consider an original CRN model with state vector ( x \in \mathbb{R}^n ) (concentrations of all species) and parameter vector ( p \in \mathbb{R}^m ), described by ODEs: ( \dot{x} = f(x, p) ). Experimental data ( \hat{y}(t_k) ) is available for a subset ( y = Cx ), where ( C ) is a selection matrix, and ( k ) denotes discrete time points.

The Kron reduction process eliminates a subset of complexes from the network's graph representation, producing a reduced-order model with state vector ( z \in \mathbb{R}^r ) (where ( r ) is the number of observed species). The dynamics are given by ( \dot{z} = g(z, \theta) ), where the new parameters ( \theta ) are explicit functions of the original parameters ( p ): ( \theta = \Phi(p) ) [1].

2.2 The Three-Step Estimation Protocol The overall parameter estimation workflow is automated and can be implemented in computational environments like MATLAB [1].

- Model Reduction: Apply Kron reduction to the original full model ( f(x, p) ) to derive the reduced model ( g(z, \theta) ), where ( z ) corresponds to the measurable species.

- Reduced Model Fitting: Solve a well-posed least squares problem using the available data ( \hat{y} ): ( \min{\theta} \sum{k} \| \hat{y}(tk) - z(tk; \theta) \|^2 ). This yields an estimate for the reduced parameters, ( \hat{\theta} ).

- Full Parameter Recovery: Solve a second optimization problem to find the original parameters ( p ) that satisfy the derived functional relationship while minimizing a dynamical difference between the original and reduced models: ( \min_{p} \mathcal{D}(f(x, p), g(z, \Phi(p))) \quad \text{subject to} \quad \theta = \Phi(p) ). The measure ( \mathcal{D} ) quantifies the dynamical difference; for linear systems, it relates to the settling time of the network [1].

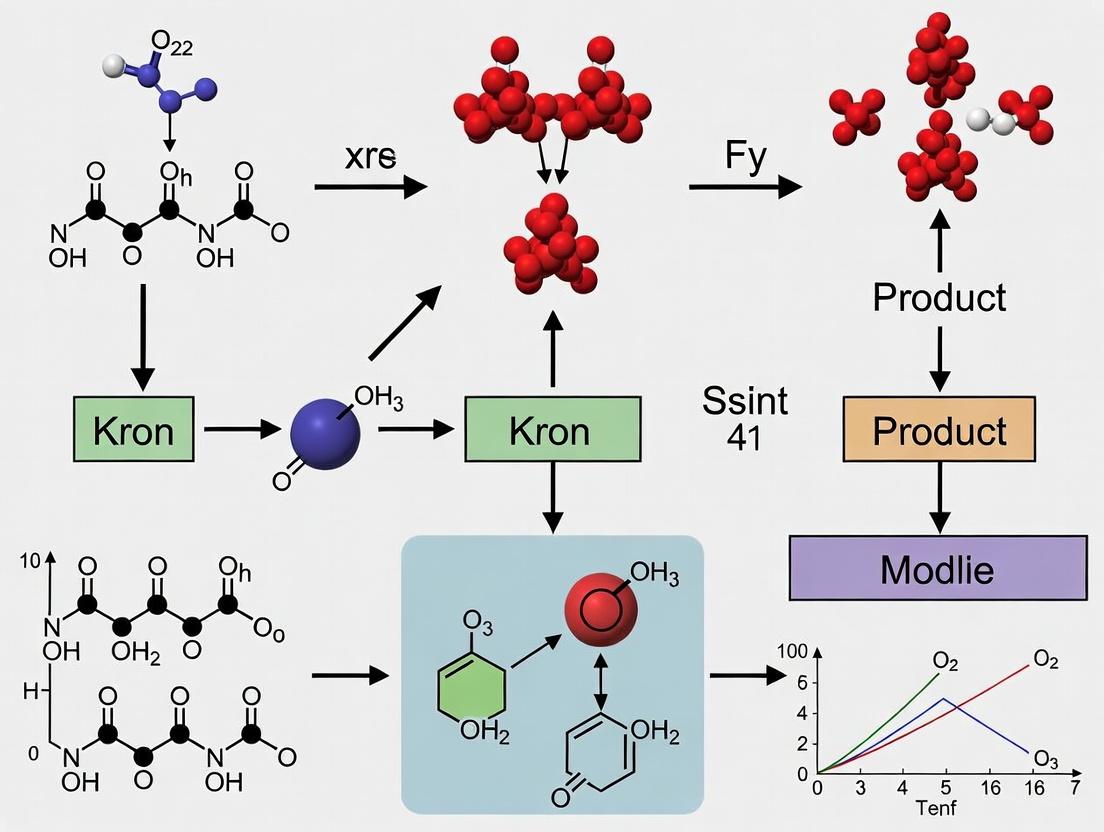

Diagram: Workflow for Parameter Estimation via Kron Reduction

Application Notes & Experimental Protocols

The following protocols detail the application of the Kron reduction method to two distinct CRNs, demonstrating its utility in biologically relevant contexts.

3.1 Protocol 1: Nicotinic Acetylcholine Receptor (nAChR) Model

- Biological Context & Objective: Nicotinic acetylcholine receptors are ligand-gated ion channels critical to neuromuscular and neuronal signaling. The objective is to estimate kinetic parameters governing receptor conformational states (e.g., resting, active, desensitized) from limited electrophysiological or binding assay data [1].

- Experimental Design:

- Data Generation: Using a reference model (e.g., from Biomodels Database) [1], simulate time-series data for a subset of observable species (e.g., open channel concentration, ligand-bound receptor concentration). Add Gaussian noise to mimic experimental error.

- Model Reduction: Apply Kron reduction to the full-state model (including unobserved closed and desensitized states) to obtain a reduced model whose states are only the observable species.

- Parameter Estimation: a. Perform weighted least squares fitting on the reduced model. Weights can be inversely proportional to the variance of the measurement noise. b. Use leave-one-out cross-validation to compare the performance of weighted vs. unweighted least squares and prevent overfitting [1]. c. Execute the full parameter recovery optimization to estimate the original kinetic rate constants.

- Key Quantitative Results: Table 1: Parameter Estimation Results for nAChR Model [1]

| Estimation Method | Training Error | Key Estimated Parameter (Example: Opening rate k_open) | Cross-Validation Score |

|---|---|---|---|

| Unweighted Least Squares | 3.22 | Value ± Std. Err. | [Value] |

| Weighted Least Squares | 3.61 | Value ± Std. Err. | [Value] |

- Interpretation: The slightly higher training error for the weighted method may indicate a trade-off between fitting precision and robustness, emphasizing the need for validation [1].

3.2 Protocol 2: Trypanosoma Brucei Trypanothione Synthetase Model

- Biological Context & Objective: Trypanothione synthetase is a vital enzyme for the parasite Trypanosoma brucei (causing African sleeping sickness), making it a prime drug target. The goal is to estimate enzyme kinetic parameters (e.g., ( Km ), ( V{max} )) from time-course data of substrate and product concentrations, which are more readily measurable than enzyme-substrate complex concentrations [1].

- Experimental Design:

- Data Source: Use published enzymatic assay data or generate synthetic data from a validated model.

- Kron Reduction Strategy: The original model includes intermediate complexes. Kron reduction is used to eliminate these unobserved complexes, deriving a reduced model directly relating substrate depletion and product formation rates.

- Identifiability Analysis: Prior to estimation, address parameter identifiability—assessing whether the available data type can uniquely determine the parameters. This involves analyzing the structure of the reduced model ( g(z, \theta) ) [1].

- Estimation & Validation: Follow the three-step protocol. Validate estimated parameters by predicting the dynamics of the original full model under new initial conditions not used in the training data.

- Key Quantitative Results: Table 2: Parameter Estimation Results for Trypanothione Synthetase Model [1]

| Estimation Method | Training Error | Key Estimated Parameter (Example: Catalytic rate k_cat) | Identifiability Status |

|---|---|---|---|

| Unweighted Least Squares | 0.82 | Value ± Std. Err. | Structurally Globally Identifiable |

| Weighted Least Squares | 0.70 | Value ± Std. Err. | Structurally Globally Identifiable |

- Interpretation: The lower training errors compared to the nAChR example suggest the problem is better conditioned. The weighted least squares method provided a better fit for this network [1].

Diagram: Simplified Trypanothione Synthesis Pathway & Observation

The Scientist's Toolkit: Research Reagent & Computational Solutions

Successful implementation of these protocols requires both wet-lab reagents and computational tools. Table 3: Essential Research Reagent Solutions for CRN Kinetic Studies

| Item / Reagent | Function / Role in Protocol | Example & Notes |

|---|---|---|

| Purified Enzyme/Receptor | The core catalytic or binding protein of interest. Required for in vitro kinetic assays to generate time-series data. | Recombinant Trypanothione Synthetase (TbTS); nAChR purified from Torpedo electroplax or expressed in cell lines. |

| Fluorogenic/Luminescent Substrates | Enable real-time, continuous measurement of product formation or substrate depletion without stopping reactions. | For oxidoreductases, substrates like NAD(P)H (absorbance/fluorescence). For kinases/ATPases, coupled assays detecting ADP. |

| Rapid-Quench Flow Apparatus | For reactions too fast for continuous monitoring. Allows precise stopping of reactions at millisecond intervals for point-by-point data. | Essential for measuring rapid pre-steady-state kinetics of enzymatic or receptor-ligand binding events. |

| Computational Biomodels Database | Source of curated, peer-reviewed mathematical models of biological systems for validation and hypothesis testing. | Biomodels Database [1] provides reference models for nAChR and TbTS used in protocol development. |

| Kron Reduction & ODE Simulation Software | Performs the core mathematical operations: model reduction, simulation, and least squares optimization. | Custom MATLAB libraries (as provided in supplementary material of research) [1]. Alternatives: Python with SciPy, COPASI. |

| Parameter Identifiability Analysis Toolbox | Assesses whether parameters can be uniquely estimated from a given experimental design and observable set. | Tools like STRIKE-GOLDD (for nonlinear systems) or COMBOS help avoid ill-posed estimation problems [1]. |

Discussion: Integration into Broader Research

The Kron reduction-based method directly addresses the fundamental challenge of partial observability, a ubiquitous limitation in wet-lab biology and drug development. Its integration into a broader thesis on parameter estimation research opens several avenues:

- Connecting to Control Theory & Drug Dosing: Advanced control strategies for personalized medicine, such as Model Predictive Control (MPC) for diabetes management or closed-loop anesthesia [2], require precise, individualized models. The ability to estimate parameters from sparse clinical data (partial observability) is a critical enabling step.

- Synergy with Machine Learning Approaches: The method provides a physics-informed structure for parameter estimation. This contrasts with and can be complementary to purely data-driven chemogenomic approaches for predicting drug-target interactions [3]. Hybrid models could use Kron-reduced structures as foundational elements, with neural networks learning residual dynamics or unmodeled effects.

- Bridging Systems Biology and PK/PD Modeling: In drug development, translating in vitro kinetic parameters (estimated via these protocols) to predict in vivo pharmacokinetics and pharmacodynamics is key. The reduction framework can be extended to simplify complex full-body PBPK (Physiologically-Based Pharmacokinetic) models to core drug-target interaction modules for easier identification and validation.

Conclusion: The challenge of parameter estimation with partial observability is central to advancing quantitative systems biology and rational drug development. The detailed Application Notes and Protocols presented here, centered on the Kron reduction method, provide researchers and drug development professionals with a rigorous, practical framework to overcome this obstacle. By transforming ill-posed problems into tractable ones, this methodology enables the creation of predictive mathematical models from realistic, incomplete experimental data, thereby bridging the gap between theoretical systems biology and practical biomedical application.

The Kron reduction method serves as a powerful graph-theoretic tool for simplifying complex network models while preserving essential dynamic characteristics. Within the broader context of parameter estimation research, this technique transforms ill-posed estimation problems into well-posed ones by systematically reducing the model to only the observed variables [4]. This document provides detailed application notes and experimental protocols for employing Kron reduction in conjunction with optimization techniques, such as weighted least squares, to estimate parameters in kinetic models of biological systems, directly supporting drug development research [4].

Parameter estimation for kinetic models of chemical reaction networks (CRNs) is a fundamental challenge in systems biology and drug development. Frequently, experimental data are incomplete, with time-series concentrations available for only a subset of species in a network. This partial data renders the parameter estimation problem mathematically ill-posed [4]. The broader thesis of this research posits that Kron reduction is a critical enabling methodology for parameter estimation under such practical constraints.

Kron reduction operates on the graphical or matrix representation of a network. By performing a Schur complement on the admittance (or stoichiometric) matrix, it eliminates internal nodes and produces a simplified, equivalent network comprising only the boundary nodes of interest [4] [5]. For parameter estimation, its principal advantage is kinetics preservation: if the original CRN is governed by mass-action kinetics, the reduced model is also a mass-action CRN involving only the measured species [4]. This allows researchers to fit a well-defined reduced model to the available partial data and subsequently map the optimized parameters back to the original, full-scale model.

Methodology and Experimental Protocols

This section outlines the core three-stage protocol for parameter estimation using Kron reduction, as applied to kinetic models of biological networks [4].

Core Three-Stage Kron Reduction Workflow for Parameter Estimation

The following workflow is generalized for kinetic models of CRNs where time-series concentration data is available for a specific subset of species.

Protocol 1: General Framework for Parameter Estimation via Kron Reduction

Objective: To estimate unknown kinetic parameters in a full network model using partial time-series experimental data. Input: A kinetic model (ODE system) for the full CRN with unknown parameters θ, and experimental concentration data for a target subset of species. Output: Estimated parameter vector θ for the original full model.

Stage 1: Model Reduction via Kron Reduction

- Identify Sets: Partition all species (nodes) in the network into two sets:

- Boundary Nodes (B): Species for which experimental concentration data is available.

- Internal Nodes (I): All other species to be eliminated.

- Formulate System Matrices: Express the network's dynamics in a form amenable to reduction. For electrical network analogs, this is the admittance matrix. For CRNs, this involves the stoichiometric matrix and rate laws.

- Perform Kron Reduction: Compute the Schur complement to eliminate internal nodes. The result is a mathematically equivalent reduced model whose dynamic variables correspond exactly to the boundary node set B [4] [5]. The parameters of the reduced model are functions of the original parameters θ.

Stage 2: Parameter Estimation on the Reduced Model

- Define Objective Function: Formulate a least squares objective function comparing the experimental data for the B species against the trajectories simulated from the reduced model.

- Perform Optimization: Execute a (weighted) nonlinear least squares optimization to find the parameter values for the reduced model that minimize the error between simulation and experiment [4]. Weighting can be applied to account for varying scales or confidence in different measurements.

Stage 3: Back-Translation to Original Model

- Solve Inverse Problem: Using the optimized parameters from the reduced model, solve an inverse optimization problem to find the parameters θ of the original model. This minimizes a distance metric (e.g., a measure of dynamical difference) between the original model and the Kron-reduced model parameterized by the optimized values [4].

- Validation: Validate the final estimated parameters θ by simulating the full original model and comparing its predictions for the B species against the held-out experimental data.

Protocol Application: Case Study in Trypanosoma brucei Metabolism

Objective: Estimate kinetic parameters for the Trypanosoma brucei trypanothione synthetase reaction network using synthetic partial concentration data [4].

Specific Experimental Steps:

- Data Generation: Synthetic time-series data for a selected subset of metabolites (e.g., ATP, glutathione) are generated by simulating the full model with reference parameters.

- Model Reduction: The Kron reduction method is applied to the trypanothione synthetase ODE model, eliminating all unmeasured metabolite nodes.

- Optimization Setup: Both unweighted and weighted least squares techniques are configured. The weighted approach assigns weights based on the inverse variance of the synthetic data.

- Execution & Cross-Validation: The optimization is run. Leave-one-out cross-validation is used to determine whether weighted or unweighted least squares is more appropriate for this specific network [4].

- Analysis: The final training error and parameter identifiability are assessed.

Logical Workflow Diagram

The following diagram illustrates the logical flow of the three-stage parameter estimation protocol.

Diagram 1: Kron Reduction Parameter Estimation Workflow

Results and Data Analysis

Quantitative Performance of Estimation Methods

The following table summarizes the performance of unweighted vs. weighted least squares optimization within the Kron reduction framework for two biological case studies, as reported in the literature [4].

Table 1: Training Error Comparison for Kron-Based Parameter Estimation Methods

| Case Study (Chemical Reaction Network) | Unweighted Least Squares Training Error | Weighted Least Squares Training Error | Preferred Method (via Cross-Validation) |

|---|---|---|---|

| Nicotinic Acetylcholine Receptors [4] | 3.22 | 3.61 | Unweighted |

| Trypanosoma brucei Trypanothione Synthetase [4] | 0.82 | 0.70 | Weighted |

Structural Impact of Node Elimination

Kron reduction's graphical interpretation is the contraction of the network graph. Eliminating an internal node creates new direct edges between all its neighboring nodes, with weights recalculated to preserve the overall network dynamics [5].

Diagram 2: Network Graph Contraction via Kron Reduction

The Scientist's Toolkit: Research Reagent Solutions

This table details key computational tools and methodological concepts essential for implementing Kron reduction and related parameter estimation research.

Table 2: Key Reagents and Methods for Kron Reduction & Parameter Estimation

| Tool/Method | Type/Function | Application in Research |

|---|---|---|

| Kron Reduction (Schur Complement) | Graph-theoretic/Matrix Algorithm. Eliminates internal nodes from a network while preserving the electrical or dynamic equivalence between boundary nodes [4] [5]. | Core model simplification step to convert an ill-posed parameter estimation problem into a well-posed one. |

| Weighted/Unweighted Least Squares | Optimization Technique. Minimizes the sum of squared residuals between model predictions and experimental data to estimate parameters [4]. | Core optimization engine used to fit the Kron-reduced model to partial time-series data. |

| Leave-One-Out Cross-Validation | Model Validation Protocol. Sequentially uses all but one data point for training and the omitted point for testing to assess model generalizability [4]. | Used to select between weighted and unweighted least squares approaches for a given dataset. |

| KronRLS | Computational Method. A Kronecker Regularized Least Squares method for Drug-Target Interaction (DTI) prediction [6]. | Demonstrates the extension of Kronecker-based methods to bioinformatics and drug discovery, a related application field. |

| Parameter Identifiability Analysis | Theoretical Framework. Assesses whether model parameters can be uniquely determined from available output data [4]. | Critical pre-step to ensure the parameter estimation problem is well-defined before applying the Kron reduction workflow. |

| MATLAB/Python Libraries for Network Analysis | Software Environment. Provide built-in functions for matrix operations (Schur complement) and nonlinear optimization [4]. | Implementation platform for automating the three-stage workflow described in the protocols. |

Theoretical Foundations: Kron Reduction in Kinetic Systems

The Kron reduction method provides a mathematically rigorous framework for simplifying complex network models while preserving essential dynamic properties [7]. In the context of Chemical Reaction Networks (CRNs), its most critical feature is kinetics preservation. When the original network is governed by Mass Action Kinetics (MAK), the Kron-reduced model corresponds to another CRN whose dynamics are also described by MAK [4]. This is a distinctive advantage over other reduction techniques, which may not maintain the kinetic structure, leading to models that are not chemically interpretable.

The mathematical procedure partitions the system's stoichiometric and kinetic matrices. For a system with states partitioned into retained (A) and eliminated (B) species, the reduction of the dynamics is achieved through the Schur complement of the associated Laplacian or admittance matrix [8] [9]. The core operation is given by:

Y_red = Y_AA - Y_AB * (Y_BB)^-1 * Y_BA [9],

where Y represents the system matrix encoding connections and kinetics. This operation eliminates the internal states (B) while exactly preserving the input-output dynamics between the retained species (A) [7]. The result is a lower-dimensional model whose parameters are functions of the original model's parameters, maintaining the DC-gain or zero-moment of the full-order system [8].

This property is paramount for parameter estimation, as it allows the transformation of an ill-posed problem (where data for some species is missing) into a well-posed one by reducing the model to only the species for which experimental time-series concentration data is available [4].

Application Notes & Protocols for Parameter Estimation

The following protocols integrate Kron reduction with optimization techniques to solve parameter estimation problems from partial experimental data. The overarching workflow is summarized in the diagram below.

Protocol 1: Core Parameter Estimation via Kron Reduction & Least Squares

Objective: To estimate unknown kinetic parameters of a mass-action CRN from partial time-series concentration data.

Materials: Time-course concentration data for a subset of species, a hypothesized full CRN model structure, computational software (e.g., MATLAB, Python with SciPy).

Procedure:

- Model Reduction: Apply Kron reduction to the full ODE model of the CRN. Eliminate all complexes/species for which no experimental data exists [4]. The output is a reduced ODE model where the state variables correspond exactly to the measured species.

- Formulate Estimation Problem: Let

θ_redbe the vector of parameters in the reduced model (which are functions of the original parametersθ). For time pointst_kand measured concentrationsy_exp(t_k), define the residuals as the difference betweeny_expand model predictionsy_model(t_k, θ_red). - Optimization: Solve the nonlinear least-squares problem:

min_θ_red Σ_k || y_exp(t_k) - y_model(t_k, θ_red) ||^2. Use a trust-region or Levenberg-Marquardt algorithm for robustness [4]. - Back-Translation & Refinement: Map the estimated

θ_redback to the original parameter space to obtain an initial guess forθ. Optionally, perform a final global optimization on the original model, usingθ_redestimates as constraints to ensure the Kron reduction-preserved property (e.g., DC-gain) is maintained [4]. - Validation: Employ leave-one-out cross-validation. Sequentially exclude a data point, estimate parameters on the remaining data, predict the excluded point, and calculate the prediction error [4].

Protocol 2: Workflow for Pharmacometric Application (e.g., PK/PD)

Objective: To integrate Kron-reduced system models with pharmacometric analysis for drug development, enhancing population PK/PD models with mechanistic detail while remaining identifiable [10] [11].

Materials: Clinical or preclinical PK/PD data, a QSP or systems pharmacology model, pharmacometric software (e.g., Pumas, NONMEM, Monolix) [10].

Procedure:

- Mechanistic Model Reduction: Identify the core signaling or metabolic pathway within a larger QSP network relevant to the drug's mechanism. Apply Kron reduction to this subsystem to obtain a simplified, kinetics-preserving module with fewer states [8].

- Model Coupling: Integrate the reduced mechanistic module into a standard pharmacometric framework. The reduced module's output (e.g., a signaling activity) serves as the driver for a PD effect model. This hybrid approach is shown in the diagram below.

- Hierarchical Estimation: Use the clinical PK/PD data to estimate:

- Population parameters for the PK and reduced mechanistic models.

- Inter-individual variability (IIV) on key parameters.

- Covariate effects linking patient factors to parameters in the reduced model [10].

- Simulation for Decision-Making: Use the validated hybrid model to simulate clinical trials, optimize dosing regimens, or predict outcomes in sub-populations [10] [11].

Case Studies & Data Presentation

The following case studies demonstrate the application and performance of the methodology.

Table 1: Parameter Estimation Performance in Case Studies [4]

| Case Study System | Original Model Complexity | Reduced Model Complexity | Estimation Method | Training Error (RMSE) | Key Preserved Property |

|---|---|---|---|---|---|

| Nicotinic Acetylcholine Receptor Kinetics | 7 states, 10 parameters | 4 states, 6 parameters | Unweighted Least Squares | 3.22 | Receptor activation time constant |

| Same as above | 7 states, 10 parameters | 4 states, 6 parameters | Weighted Least Squares | 3.61 | Receptor activation time constant |

| Trypanosoma brucei Trypanothione Synthetase | 8 states, 12 parameters | 5 states, 8 parameters | Unweighted Least Squares | 0.82 | Metabolic flux at steady state |

| Same as above | 8 states, 12 parameters | 5 states, 8 parameters | Weighted Least Squares | 0.70 | Metabolic flux at steady state |

Table 2: Impact of Reduction Strategy on Model Fidelity (Synthetic Example) [9]

| Reduction Scenario | Buses/Species Eliminated | Voltage/Concentration Max Error | Power Loss/Flux Mean Error | Computational Speed-up |

|---|---|---|---|---|

| Random Elimination | 7 of 14 | 12.5% | 8.7% | ~3x |

| Electrical Centrality-Based | 7 of 14 | 5.2% | 3.1% | ~3x |

| Loss-Aware Optimal (Proposed) | 7 of 14 | 2.8% | 1.5% | ~3x |

Note: Data adapted from power systems research [9], illustrating a universal principle: the choice of which states to eliminate (bus/species selection) is critical for preserving dynamic fidelity in the reduced model.

The Scientist's Toolkit

Essential reagents, software, and data required to implement the protocols.

Table 3: Research Reagent Solutions & Essential Materials

| Item | Specification / Example | Primary Function in Protocol |

|---|---|---|

| Time-Series Concentration Data | Partial dataset for key species. Must span dynamic phases (rise, peak, decay). | Serves as the target for fitting the Kron-reduced model. Quality dictates identifiability [4]. |

| Hypothesized Full CRN Model | System of ODEs based on biochemical knowledge. Includes all known interactions. | The starting point for structural reduction. Must be based on MAK or a compatible formalism [8] [4]. |

| Computational Environment | MATLAB with Optimization Toolbox; Python with SciPy, NumPy, and model reduction libraries. | Platform for performing matrix-based Kron reduction and executing least-squares optimization algorithms [4]. |

| Pharmacometric Software | Pumas, NONMEM, Monolix, or R with nlmixr2 [10]. |

For population parameter estimation, covariate analysis, and simulation in drug development contexts [10] [11]. |

| Validation Dataset | Hold-out experimental data not used for estimation (e.g., from a different dose or condition). | Used for external validation of the predictive capability of the final, parameterized model. |

| Synthetic Data Generator | ODE solver capable of simulating the full model with added controlled noise. | For testing and validating the parameter estimation pipeline before applying it to real, noisy experimental data. |

The Challenge of Ill-Posed Estimation in Complex Networks: The analysis and control of modern, large-scale networked systems—from three-phase unbalanced power distribution grids to biological signaling pathways—are fundamentally challenged by high dimensionality and complexity. Detailed models with thousands of nodes make tasks like real-time optimal power flow, parameter estimation, and dynamic stability analysis computationally intractable or "ill-posed" for practical application [12]. An ill-posed problem here refers to one where the solution is highly sensitive to small perturbations in input data, computational resources are prohibitive, or the model is too complex for reliable inference.

Kron Reduction as a Structuring Principle: This article frames the Kron Reduction Method (KRM) not merely as a numerical simplification tool, but as a critical mathematical operation that restructures and regularizes ill-posed estimation problems into well-posed ones. By systematically eliminating internal nodes via the Schur complement of the network's admittance (or analogous coupling) matrix, KRM produces a reduced, equivalent model that preserves the external electrical behavior between retained nodes [13]. Within the broader thesis on parameter estimation research, this reduction is pivotal: it transforms an infeasible full-network parameter estimation into a tractable problem on a condensed model, provided the reduction itself is performed optimally to preserve fidelity.

Bridging Static and Dynamic Regimes: Traditional applications of Kron reduction often assume static conditions or perfect timescale separation between reduced and retained nodes [14]. However, for robust parameter estimation—especially in systems with fluctuating renewable generation or dynamic biological responses—this assumption can break down. A reduction that ignores the dynamics and correlated noise in eliminated nodes can lead to biased estimates and inaccurate uncertainty quantification [14]. Therefore, the core research thrust is to develop advanced, optimal Kron reduction frameworks that explicitly manage the trade-off between model complexity (well-posedness) and accuracy, even in dynamic regimes. The transition from an ill-posed to a well-posed estimation problem is achieved by strategically determining which nodes to eliminate and how to aggregate their influence, thereby creating a lower-dimensional but information-rich representation suitable for efficient and reliable parameter inference.

Mathematical Foundations: The Kron Reduction Operation

At its core, the Kron Reduction is an exact matrix operation based on the Schur complement. It is applied to a linear (or linearized) system description where the network coupling is defined by an admittance matrix Y (or a Laplacian matrix L). Consider a network with node set partitioned into retained nodes (𝒦) and eliminated nodes (ℛ). The system equation I = Y V is partitioned as [12]:

Assuming zero current injection at nodes slated for elimination (I_ℛ = 0), the voltage V_ℛ can be expressed in terms of V_𝒦. Substituting this back yields the Kron-reduced model [12] [13]:

Here, ⁺ denotes the Moore-Penrose pseudoinverse, which handles singularities arising from missing phases or structural zeros [12]. The matrix Y_Kron is a dense, equivalent admittance matrix that captures the effective electrical coupling between the retained nodes, as if all eliminated nodes and their connecting infrastructure had been resolved into equivalent branches.

Critical Implications for Estimation:

- Dimensionality Reduction: The state space for estimation shrinks from the dimension of all nodes to only the retained nodes (

|𝒦|). - Parameter Transformation: The physical parameters (line impedances, conductances) of the original network are transformed into the (often non-physical) entries of

Y_Kron. Estimating the original parameters from the reduced model becomes an inverse problem that requires understanding this transformation. - Ill-Posedness Source: The fundamental ill-posedness in network estimation often stems from collinearity and insufficient excitation across a vast network. Kron reduction, if done naively by eliminating critical observable nodes, can exacerbate this. The Opti-KRON framework addresses this by formulating the choice of

𝒦as a mixed-integer optimization problem (MILP) that maximizes reduction while bounding the resulting voltage approximation error across a set of representative operating scenarios [12].

Table 1: Key Formulae in Kron Reduction

| Concept | Mathematical Expression | Interpretation in Estimation Context | |

|---|---|---|---|

| Full Network Model | I = Y V |

Original ill-posed problem: high-dimensional V, unknown parameters in Y. |

|

| System Partition | [I𝒦; 0] = [Y𝒦𝒦 Y𝒦ℛ; Yℛ𝒦 Yℛℛ] [V𝒦; Vℛ] |

Separation into observed/estimated (𝒦) and eliminated (ℛ) subspaces. |

|

| Kron Reduction (Schur Complement) | Y_Kron = Y𝒦𝒦 - Y𝒦ℛ Yℛℛ⁺ Yℛ𝒦 |

Core restructuring operation. Creates a well-posed, lower-dimensional equivalent model. | |

| Reduced Model | I𝒦 = Y_Kron V𝒦 |

Well-posed estimation problem: state dimension reduced to `|V𝒦 | . Parameters are entries ofY_Kron`. |

| Voltage Reconstruction | Vℛ = -Yℛℛ⁺ Yℛ𝒦 V𝒦 |

Allows estimation of internal, non-instrumented node states from estimates at retained nodes. |

Application Notes: Power Systems as a Primary Use Case

The theoretical framework finds direct and critical application in power systems, which serve as an exemplary domain for testing parameter estimation methodologies.

1. Static Model Reduction for Steady-State Estimation: The Opti-KRON framework has been successfully extended to unbalanced three-phase distribution feeders [12]. The method clusters electrically similar nodes, assigning reduced nodes to a retained "super-node," and moves the net load of the cluster to that super-node before applying Kron reduction. This process preserves the radial topology through a radialization step. Validation on real utility feeders with 5,991 and 8,381 nodes demonstrated reductions of 90% and 80%, respectively, while maintaining a maximum voltage magnitude error below 0.003 per unit (p.u.) [12]. For parameter estimation, this means a 10x smaller model can be used to estimate grid parameters from supervisory control and data acquisition (SCADA) or phasor measurement unit (PMU) data with high fidelity, making the problem computationally well-posed.

2. Dynamic Model Reduction and its Perils: In dynamic studies (e.g., frequency stability), Kron reduction is commonly used to eliminate "fast" load buses, retaining only "slow" generator buses, based on an assumed timescale separation [14]. However, research shows this can be misleading. While noise/disturbances at the original load buses may be uncorrelated, their aggregate effect on the retained generator network through the reduction process can become correlated [14]. Ignoring the dynamics and stochastic forcing of the reduced subsystem leads to an inaccurate assessment of grid resilience and biased parameter estimates for generator dynamics. The Mori-Zwanzig formalism provides a rigorous method to incorporate the "memory" of the reduced fast dynamics into the equations for the slow variables, offering a path to a dynamically consistent well-posed reduction [14].

3. Loss-Aware Reduction for Parameter Sensitivity: Indiscriminate bus elimination can distort key system features like power loss profiles. An experimental study on the IEEE 14-bus system sequentially reduced the network from 14 to 7 buses under seven scenarios [9]. It integrated Kron's Loss Equation (KLE) with electrical centrality measures to guide elimination. The results starkly showed that poor reduction strategies could induce significant errors, whereas a loss-aware optimal reduction preserved the fidelity of both voltage profile and loss estimation, which is crucial for accurate parameter estimation related to line resistances and system efficiency [9].

Table 2: Performance of Kron Reduction in Power System Applications

| Application Context | Test System / Scale | Key Reduction Metric | Accuracy Preservation Metric | Source |

|---|---|---|---|---|

| Unbalanced Three-Phase Feeder (Steady-State) | Real utility feeders (5,991 & 8,381 nodes) | 90%, 80% node reduction | Max voltage error < 0.003 p.u. | [12] |

| Optimal Power Flow & Control | 1,000-node test feeder | GPU speedup: 15x faster than CPU | Voltage profile approximated with low error | [12] |

| Loss-Aware Steady-State Reduction | IEEE 14-bus system | Reduction to 7 buses (50% reduction) | Minimal deviation in loss calculation & voltage profile | [9] |

| Dynamic Stability Analysis | Synthetic & test grids | Retention of generator buses only | Highlights correlated noise injection from reduced loads; necessitates Mori-Zwanzig correction | [14] |

Kron Reduction Restructures the Estimation Workflow

Experimental Protocols for Validation and Benchmarking

Validating a Kron-based estimation pipeline requires protocols to assess both the reduction step and the subsequent parameter estimation step.

Protocol 1: Benchmarking Reduction Accuracy for Static Feeders

- Objective: Quantify the trade-off between reduction level and steady-state voltage/power flow accuracy.

- Materials: Full three-phase unbalanced feeder model (e.g., from IEEE test cases or utility data), power flow solver (e.g., OpenDSS, MATPOWER), Opti-KRON or similar reduction algorithm [12].

- Procedure:

- Baseline Simulation: Run a balanced three-phase power flow on the full model for a range of representative loading conditions (e.g., light, nominal, peak load).

- Apply Reduction: Execute the Kron reduction algorithm for a series of target reduction percentages (e.g., 70%, 80%, 90%). The Opti-KRON framework uses a MILP or exhaustive search to select the optimal set

𝒦[12]. - Solve Reduced Model: For the same loading conditions, solve the power flow using the Kron-reduced admittance matrix

Y_Kron. - Quantify Error: Calculate the maximum and root-mean-square (RMS) error for voltage magnitude (p.u.) at all retained nodes across all loading scenarios. The performance target is often a max error < 0.01 p.u. [12].

- Radialization Check: Verify that the reduced network graph remains radial if the original feeder was radial [12].

Protocol 2: Parameter Estimation on a Reduced Model

- Objective: Estimate the line parameters of a reduced network model from simulated measurement data and assess error propagation from the full network.

- Materials: Known full network model (as a "ground truth" simulator), reduced model from Protocol 1, simulated PMU/SCADA data (voltage & current phasors) with added Gaussian noise.

- Procedure:

- Data Generation: Use the full model simulator to generate time-series or multi-scenario data for voltages

V(t)and current injectionsI(t)at the retained nodes only. - Estimation Problem Formulation: Formulate a least-squares or maximum likelihood estimation problem to find

Y_Kronthat minimizes|| I_measured(t) - Y_Kron * V_measured(t) ||². - Solve and Reconstruct: Solve for

Y_Kron. Optionally, use the known reduction mapping to "back out" estimates for the original line parameters (this is an ill-posed inverse problem). - Validation: Compare the estimated

Y_Kronagainst the "true"Y_Kroncomputed directly via Schur complement from the full model. Assess the accuracy of power flows calculated with the estimated model on a held-out test loading scenario.

- Data Generation: Use the full model simulator to generate time-series or multi-scenario data for voltages

Protocol 3: Dynamic Consistency Evaluation using Mori-Zwanzig

- Objective: Evaluate the error incurred by standard Kron reduction in dynamic studies and demonstrate the correction offered by the Mori-Zwanzig formalism [14].

- Materials: Dynamic grid model (e.g., structure-preserving swing equations), synthetic colored noise sources for load buses, numerical ODE integrator.

- Procedure:

- Full Dynamic Simulation: Simulate the full network dynamics under stochastic load variations (modeled as time-correlated noise).

- Standard Kron Reduction: Eliminate load buses to create a generator-only model. Simulate its dynamics driven only by noise at generator buses.

- Mori-Zwanzig Reduction: Apply the Mori-Zwanzig formalism to derive a reduced model for generators that includes a memory kernel and colored effective noise representing the aggregated influence of reduced loads.

- Comparison: Compute the variance of frequency deviations at generator buses from all three simulations. The standard Kron-reduced model will underestimate the true variance, while the Mori-Zwanzig model should closely match the full model results, demonstrating its necessity for well-posed dynamic estimation [14].

Protocol for Kron-Based Parameter Estimation

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools and Datasets for Kron Reduction Research

| Tool/Reagent | Function in Research | Example/Notes |

|---|---|---|

| Benchmark Network Datasets | Provide ground-truth models for developing and testing reduction algorithms. | IEEE test feeders (14, 33, 123-bus), real utility feeder models (e.g., 5,991-node feeder) [12], synthetic large-scale grids. |

| Power Flow & Simulation Engines | Generate accurate "measurement" data from full and reduced models for validation. | OpenDSS (for unbalanced distribution), MATPOWER & PYPOWER (for transmission), GridLAB-D. |

| Optimal Reduction Solver | Implements the core MILP or optimization for selecting the optimal set of retained nodes 𝒦. |

Custom implementations of the Opti-KRON framework [12], using solvers like Gurobi, CPLEX, or MATLAB's intlinprog. |

| GPU-Accelerated Search Code | Enables exhaustive or large-scale heuristic search for optimal reduction clusters. | CUDA/OpenCL code as demonstrated in [12], achieving 15x speedup for 1000-node networks. |

| Mori-Zwanzig Formalism Code | Corrects standard Kron reduction for dynamic studies by incorporating memory effects. | Custom numerical code to compute the memory kernel and effective noise statistics as derived in [14]. |

| Parameter Estimation Suite | Solves the well-posed inverse problem on the reduced model. | MATLAB/ Python with optimization toolboxes (e.g., scipy.optimize, lsqnonlin), Bayesian inference tools (Stan, PyMC). |

| Visualization & Analysis Toolkit | Analyzes topological changes, error distributions, and parameter sensitivity. | NetworkX (graph analysis), Matplotlib/Plotly (plotting), custom functions for visualizing Y-matrix sparsity patterns. |

The Kron reduction method serves as a powerful mathematical scaffold to transform ill-posed, high-dimensional network estimation problems into well-posed, tractable ones. By strategically applying the Schur complement, it restructures the problem domain, trading detailed internal resolution for actionable insight at the system level. However, this power must be wielded with care. As evidenced in power systems research, naive reduction distorts loss profiles [9] and standard dynamic reduction introduces correlated noise and underestimates variance [14].

The path forward for robust parameter estimation lies in advanced reduction frameworks like Opti-KRON, which optimize the trade-off, and theoretically grounded corrections like the Mori-Zwanzig formalism, which ensure dynamic consistency. Future research within this thesis will likely focus on:

- Integrating machine learning with Kron reduction to learn optimal reduction patterns for specific estimation tasks.

- Extending these principles beyond power engineering to biological network inference and large-scale cyber-physical system identification, where similar challenges of dimensionality and partial observability prevail.

- Developing open-source, standardized toolkits that integrate optimal reduction, dynamic correction, and parameter estimation into a seamless workflow for the research community.

Ultimately, moving "From Ill-Posed to Well-Posed" is not an automatic result of applying Kron reduction, but the outcome of a deliberate, optimized, and theoretically informed process of model restructuring, which lies at the heart of modern parameter estimation research for complex networks.

A Step-by-Step Workflow: Implementing Kron Reduction for Parameter Estimation

The construction of a predictive kinetic model is a cornerstone of quantitative systems biology and drug development, enabling the simulation of complex biochemical network behavior over time. This process is intrinsically linked to the broader challenge of parameter estimation, where unknown rate constants, binding affinities, and other kinetic parameters must be deduced from experimental observations. The Kron reduction method offers a powerful mathematical framework to address a common, ill-posed scenario in this field: estimating parameters when experimental data is available only for a subset of chemical species in the network, not all [4]. This application note details the critical first step in this pipeline—precisely defining the original kinetic model and systematically identifying which species can be reliably measured. This foundational work directly informs the subsequent application of Kron reduction, which preserves the kinetic structure of the original system while creating a reduced model whose variables correspond exclusively to the measured species, thereby transforming an ill-posed into a well-posed estimation problem [4].

- The Need for Kinetic Models: Genome-scale metabolic models often operate at steady-state and cannot predict dynamic cellular responses to stimuli, such as drug treatments [15]. Kinetic models, defined by systems of ordinary differential equations (ODEs), bridge this gap by describing the time-dependent evolution of metabolite concentrations, enzyme activities, and signaling fluxes [15]. They are essential for understanding mechanism, predicting cellular physiology, and optimizing biotechnological and therapeutic interventions.

- The Parameter Estimation Challenge: A kinetic model's parameters are frequently unknown and must be inferred from experimental data. The problem becomes mathematically ill-posed when time-series concentration data is available for only some species (partial experimental data), as there is no unique mapping from the limited observations to the full set of model parameters [4]. Traditional sampling-based methods can be computationally inefficient, often generating large populations of models that are dynamically inconsistent with observed physiology [15].

- Kron Reduction as a Solution: The Kron reduction method is a model reduction technique uniquely suited for kinetic networks governed by laws like mass action kinetics. Its key advantage is kinetics preservation; it produces a reduced, simpler network that is itself a valid chemical reaction network with the same kinetic formalism [4]. By reducing the model to only the nodes (species) for which data exists, it enables the application of standard parameter fitting techniques, such as weighted least squares optimization, to a now well-defined problem [4].

Defining the Original Kinetic Model

The "original kinetic model" is a precise mathematical representation of the biochemical system under study. Its definition requires integrating prior biological knowledge with a structured mathematical formulation.

Core Components and Mathematical Representation

A kinetic model is typically defined by a system of coupled ODEs, where the rate of change of each species' concentration is a function of the concentrations of other species and a set of kinetic parameters.

Fundamental Reaction Rate Definition: The rate of a chemical reaction is determined by measuring the amount of products formed or reagents consumed over time in a controlled reactor (e.g., batch, continuous stirred-tank) [16]. For a biochemical reaction network (CRN), this translates into a kinetic law (rate law) for each reaction, such as Michaelis-Menten for enzymatic reactions or mass action kinetics for elementary steps [16] [17].

Generalized Model Formulation:

For a network with m species and r reactions, the system dynamics are:

dX/dt = N * v(X, k)

Where:

Xis them-dimensional vector of species concentrations.Nis them x rstoichiometric matrix, encoding how each reaction consumes and produces each species.v(X, k)is ther-dimensional vector of reaction rates (fluxes).kis the vector of unknown kinetic parameters (e.g.,k_cat,K_M, forward/backward rate constants).

Example: Michaelis-Menten Kinetics

For the canonical enzyme-catalyzed reaction E + S ⇌ ES → E + P, the Michaelis-Menten model makes several assumptions: initial velocity conditions, steady-state for the ES complex, and substrate concentration much greater than enzyme concentration [17]. The resulting rate law is:

v = (V_max * [S]) / (K_M + [S])

where V_max = k_cat * [E_total] and K_M = (k_off + k_cat)/k_on. The parameters to estimate are k_cat and K_M [17].

Diagram 1: Michaelis-Menten Reaction Mechanism. Visualizes the elementary steps of enzyme catalysis, highlighting the associated kinetic parameters (k_on, k_off, k_cat) that must be defined in the original model [17].

Sourcing and Curating Prior Knowledge

Constructing the original model is a bottom-up process that begins with draft reconstruction from diverse data sources [4]:

- Literature & Databases: Extract known interactions, stoichiometries, and putative rate constants from resources like the Biomodels database [4], BRENDA, or published kinetic studies.

- Omics Data: Transcriptomics or proteomics can inform which pathways are active. Thermodynamic data (e.g., reaction reversibility, Gibbs free energy) constrain feasible flux directions [15].

- Manual Curation: A critical step to insert missing information, resolve inconsistencies, and remove irrelevant interactions based on the specific biological context [4].

Identifying and Characterizing Measured Species

The selection of which species to measure is not arbitrary; it is a strategic decision that dictates the feasibility and success of the subsequent Kron reduction and parameter estimation.

Criteria for Selecting Measured Species

The goal is to choose a set of species whose time-course data will provide maximal information for constraining the model's unknown parameters.

- Critical Nodes: Species that are hubs in the network (e.g., key metabolites like ATP, signaling molecules like cAMP) or the direct inputs/outputs of the process being studied.

- Observability: The selected species should collectively make the system's internal state "observable." In practice, they should be distributed across different pathways and time scales.

- Experimental Feasibility: A species must be reliably quantifiable with available assays. This is the primary practical constraint.

Experimental Techniques for Measurement

The choice of technique depends on the species' chemical nature (metabolite, protein, mRNA) and required temporal resolution.

- Metabolomics: LC-MS or GC-MS for quantifying metabolite concentrations over time.

- Proteomics & Phosphoproteomics: To track protein abundance or post-translational modification states (e.g., phosphorylation).

- Biosensors: Genetically-encoded FRET-based biosensors for real-time, live-cell measurement of specific ions or metabolites.

- Advanced Genotypic Identification: For studies involving microbial communities or host-pathogen systems, accurately identifying the measured species' biological source is paramount. CRISPR-Cas13a-based SHERLOCK assays provide a rapid, sensitive, and extraction-free method for genetic species identification directly in field or lab samples, crucial for ensuring data integrity [18].

Protocol: Designing a Time-Series Measurement Experiment

This protocol outlines the steps for generating the partial experimental dataset required for Kron reduction-based parameter estimation.

Objective: To collect quantitative, time-series concentration data for a predefined subset of species from a perturbed biochemical system.

Materials:

- Biological System: Cell culture, purified enzyme mix, tissue sample.

- Perturbation Agent: Drug, nutrient shift, genetic inducer/repressor.

- Quenching Solution: Cold methanol or specialized solution to instantly halt metabolism.

- Extraction Buffers: Appropriate for target analytes (metabolites, proteins, RNA).

- Analytical Platform: Mass spectrometer, HPLC, fluorescence plate reader, qPCR machine.

- Internal Standards: Isotopically labeled analogs for absolute quantification (e.g., for MS).

Procedure:

- System Preparation: Bring the biological system to a defined baseline steady-state or initial condition.

- Perturbation & Time-Course Sampling: a. Apply the perturbation at time t=0. b. At predetermined time points (e.g., 0, 15 sec, 30 sec, 1 min, 5 min, 15 min, 60 min), rapidly withdraw aliquots and immediately quench metabolism. c. For genetic species identification in mixed samples (e.g., microbiome studies), simultaneously preserve a separate aliquot for SHERLOCK analysis using an extraction-free protocol [18].

- Sample Processing: a. Extract analytes from quenched samples. b. Derivatize if necessary for detection. c. Add internal standards.

- Analysis & Data Normalization: a. Run samples on the analytical platform. b. Quantify peak areas/concentrations relative to standards. c. Normalize data to protein content, cell count, or a stable endogenous control.

- Data Curation: Format the final dataset as a matrix, with rows for time points and columns for the measured species concentrations.

Integration with the Kron Reduction Parameter Estimation Workflow

The outputs of Steps 1 and 2 become the direct inputs for the mathematical procedure of Kron reduction.

Diagram 2: Kron Reduction Parameter Estimation Workflow. Illustrates the sequential process where defining the model and identifying measured species are prerequisites for the reduction and estimation steps [4].

- From Original to Reduced Model: The original model (

dX/dt = N v(X, k)) is partitioned into measured (X_m) and unmeasured (X_u) species. Kron reduction mathematically eliminatesX_u, producing a new, smaller system of ODEs:dX_m/dt = N_red * v_red(X_m, k_red), wherek_redis a function of the original parametersk[4]. - Solving the Well-Posed Problem: With experimental data for

X_m(t)and a model fordX_m/dt, standard least squares optimization (weighted or unweighted) is used to findk_redthat minimizes the difference between model prediction and data [4]. - Case Study Evidence: Research demonstrates this method's efficacy. For example, in estimating parameters for a Trypanosoma brucei trypanothione synthetase model from partial data, the application of weighted least squares with Kron reduction resulted in a low training error of 0.70 [4].

Table 1: Performance of Kron Reduction with Least Squares Estimation

| Case Study Network | Unweighted Least Squares Training Error | Weighted Least Squares Training Error | Preferred Method (via Cross-Validation) |

|---|---|---|---|

| Nicotinic Acetylcholine Receptors [4] | 3.22 | 3.61 | Unweighted |

| Trypanosoma brucei Trypanothione Synthetase [4] | 0.82 | 0.70 | Weighted |

Table 2: Key Research Reagent Solutions for Kinetic Modeling & Measurement

| Item | Function/Description | Relevance to Step 1 |

|---|---|---|

| SKiMpy / ORACLE Toolbox [15] | A computational toolbox for building, reducing, and sampling kinetic models. The ORACLE framework can generate populations of kinetic parameters for training. | Used to construct the original mathematical model and generate initial parameter sets for analysis. |

| MATLAB Kron Reduction Library [4] | A custom library for performing the Kron reduction of ODE-based kinetic models and subsequent parameter estimation. | Essential software for executing the mathematical core of the workflow after model definition. |

| CRISPR-Cas13a (SHERLOCK Assay) [18] | A specific, sensitive, isothermal nucleic acid detection system for genetic identification. Enables extraction-free, rapid species confirmation. | Critical for accurately identifying the biological source of measured samples in complex environments, ensuring data integrity. |

| DNeasy Blood & Tissue Kit [18] | Standardized silica-membrane column for high-quality genomic DNA extraction. | Provides purified DNA as input for downstream genotypic identification or sequencing. |

| Recombinase Polymerase Amplification (RPA) Kit [18] | Isothermal, rapid DNA amplification technology. Used in SHERLOCK for pre-amplifying target sequences. | Enables sensitive detection of genetic markers from low-abundance samples without complex thermocycling. |

| ILLMO Software [19] | Interactive statistical software for modern model comparison, effect size estimation with confidence intervals, and analysis of ordinal (e.g., Likert scale) data. | Useful for the statistical design of experiments and robust comparison of model predictions against experimental data post-estimation. |

| Qubit dsDNA Assay [18] | Highly specific fluorescent assay for accurate DNA quantification. | Ensures precise input amounts for genetic assays and sequencing library preparation. |

Foundational Assumptions and Best Practices

Table 3: Key Assumptions in Common Kinetic Modeling Frameworks

| Assumption | Description | Implication for Model Definition & Measurement |

|---|---|---|

| Michaelis-Menten Steady-State [17] | The enzyme-substrate complex [ES] is constant over the measurement period. | Valid for initial velocity measurements where [S] >> [E]. Defines the form of the rate law. |

| Well-Mixed System (Spatial Homogeneity) | Concentrations are uniform throughout the reaction volume (e.g., in a stirred cuvette or CSTR) [16]. | Justifies the use of ODEs without spatial terms. Measurements should be designed to maintain homogeneity. |

| Mass Action Kinetics | The reaction rate is proportional to the product of the concentrations of the reactants. | Often assumed for elementary steps. Defines the structure of the v(X, k) function in the ODEs. |

| Time-Scale Separation (for Model Reduction) | Some reactions are much faster than others, allowing quasi-steady-state approximations. | Can simplify the original model before Kron reduction. Guides the choice of time points for sampling. |

Best Practices Summary:

- Start Simple: Begin with a core, well-characterized sub-network before expanding to a larger model.

- Document Data Provenance: Meticulously record the source of every interaction and parameter in the original model.

- Pilot Experiments: Conduct small-scale time-course experiments to verify assay performance and inform the design of the full experiment (e.g., defining critical early time points).

- Validate Assumptions: Test for conditions like constant enzyme concentration or substrate excess during experiments to ensure the chosen kinetic law is valid.

- Embrace Iteration: Model definition and parameter estimation are iterative. Initial results often necessitate refining the original model structure and repeating the cycle [4].

The Kron reduction method serves as a critical technique for transforming ill-posed parameter estimation problems into well-posed ones within complex network systems. In the broader context of thesis research on parameter estimation, this mathematical tool enables the systematic simplification of high-dimensional models—such as those describing electrical power grids or biochemical reaction networks—while preserving the essential dynamics between observed variables [4]. The core challenge in parameter estimation for these systems often stems from incomplete experimental data, where only a subset of species or node states can be measured [4]. Traditional direct estimation becomes computationally infeasible or mathematically non-unique under these conditions. Kron reduction addresses this by eliminating unobserved or internal states from the network model through a structured matrix operation, resulting in a reduced model whose variables correspond directly to the available measurements. This process not only retains the physical interpretability of parameters—a significant advantage over purely numerical projection methods [20]—but also ensures that the kinetics or dynamics governing the original system are preserved in the simplified model [4]. Consequently, applying Kron reduction creates a computationally tractable and observable framework, forming a foundational step for subsequent robust parameter identification and model validation in scientific research.

Foundational Application Notes

Kron reduction is a graph-theoretic Schur complement operation applied to the weighted Laplacian matrix of a network. Its primary function is to eliminate a subset of nodes while preserving the exact electrical or dynamic relationships between the remaining nodes [21]. This property makes it invaluable for creating simplified, observable networks for parameter estimation.

2.1. Core Principles and Mathematical Basis

The method operates on the admittance matrix (Y-bus) in power systems or the stoichiometric matrix in chemical reaction networks. For a network partitioned into retained (A) and eliminated (B) nodes, the reduced admittance matrix is calculated as:

Y_reduced = Y_AA - Y_AB * (Y_BB)^-1 * Y_BA [9].

This formulation ensures equivalence at the terminals of the retained nodes. A key advantage in parameter estimation contexts is its structure-preserving nature; unlike balanced truncation or modal approximation, Kron reduction maintains the physical meaning of variables and parameters in the reduced model [20] [4]. This is crucial for researchers who must interpret estimated parameters within a real-world biological or physical context.

2.2. Key Applications in Research Fields

- Power Systems Engineering: Kron reduction is extensively used for network simplification in transient stability assessment and smart grid monitoring [21]. It facilitates accelerated simulation of future grids with high penetrations of renewable, power-electronics-interfaced resources by reducing model order while capturing essential fast transients [20].

- Systems Biology and Chemical Kinetics: For chemical reaction networks (CRNs) governed by mass action kinetics, Kron reduction produces a reduced CRN whose variables are a subset of the original species. Critically, the reduced network also adheres to mass action kinetics, allowing parameters in the reduced model to be expressed as functions of the original parameters [4]. This enables parameter estimation from partial concentration data.

- General Network Analysis: The technique provides a graph-theoretic foundation for simplifying interconnected linear systems across various domains, preserving the resistive distance (effective resistance) between remaining nodes [21].

Quantitative Comparison of Kron Reduction Applications

The utility of Kron reduction varies across disciplines, with differing priorities for accuracy, preservation properties, and computational gain. The following table summarizes its application in two primary research domains relevant to parameter estimation.

Table 1: Comparative Analysis of Kron Reduction Applications

| Application Domain | Primary Objective | Key Metric for Fidelity | Typical Reduction Ratio | Preserved Properties |

|---|---|---|---|---|

| Power System Modeling (e.g., IEEE 14-bus) [20] [9] | Accelerate transient simulation & real-time control | Voltage profile deviation, power loss error [9] | 14 buses → 7 buses (50%) [9] | Terminal electrical behavior, network topology |

| Kinetic Model Parameter Estimation (e.g., CRNs) [4] | Enable estimation from partial observable data | Dynamical difference (e.g., settling time), fit to concentration data [4] | Varies by network complexity | Mathematical structure, mass action kinetics |

Experimental Protocols for Network Reduction and Estimation

This section provides detailed, actionable protocols for applying Kron reduction in two key research scenarios: power system simplification and parameter estimation for kinetic models.

4.1. Protocol for Optimal Bus Elimination in Power Systems This protocol, based on the IEEE 14-bus system benchmark, details a loss-aware reduction strategy to maintain model fidelity [9].

- Objective: To systematically eliminate passive (non-generator, non-critical load) buses from a power network to reduce computational complexity while minimizing error in voltage profiles and power loss calculations.

- Materials & Initialization:

- Obtain the full network's bus admittance matrix (Y_bus).

- Define the set of buses to retain (A: typically generator, critical load, and observation points) and to eliminate (B).

- Calculate the initial power flow to establish baseline voltage and loss metrics.

- Procedure:

- Partition the Y_bus matrix according to sets A and B:

Y_bus = [[Y_AA, Y_AB], [Y_BA, Y_BB]]. - Compute the Kron-reduced admittance matrix for the retained buses:

Y_reduced = Y_AA - Y_AB * inv(Y_BB) * Y_BA[9]. - Re-calculate the power flow using the

Y_reducedmatrix. - Quantify the deviation by comparing the voltage magnitudes and angles at retained buses, and total system losses, against the baseline full-model results.

- Iterate the elimination process (Steps 1-4) for different subsets of eliminated buses (B). Prioritize elimination based on electrical centrality (e.g., low connectivity, passive nodes).

- Integrate Kron’s Loss Equation (KLE) as a sensitivity metric to guide the selection of which bus to eliminate next, favoring those with minimal impact on calculated system losses [9].

- Partition the Y_bus matrix according to sets A and B:

- Output Analysis: Identify the optimal reduction threshold where the increase in voltage deviation or loss error exceeds a pre-defined tolerance (e.g., 2%). The sequence leading to this threshold represents the optimal loss-aware reduction strategy.

4.2. Protocol for Parameter Estimation in Chemical Reaction Networks This protocol uses Kron reduction to fit kinetic models to partial time-series concentration data [4].

- Objective: To estimate unknown kinetic parameters in a mass-action model when experimental concentration data is available only for a subset of chemical species.

- Materials & Initialization:

- Define the full kinetic model as a system of ODEs:

dx/dt = S * v(x, k), wherexis the full state vector,Sis the stoichiometric matrix, andvare reaction rates with unknown parametersk. - Assemble experimental concentration time-series data for the subset of observable species,

x_obs(t).

- Define the full kinetic model as a system of ODEs:

- Procedure:

- Apply Kron Reduction to the Model: Eliminate the ODEs corresponding to unobserved species from the system. This is performed mathematically on the network’s Laplacian-like structure, resulting in a reduced model

dx_obs/dt = f_R(x_obs, k_R), wherek_Rare parameters that are functions of the originalk[4]. - Solve the Reduced Parameter Estimation Problem: Using the available data

x_obs(t), estimate the parametersk_Rby minimizing the sum of squared residuals between the data and the reduced model's predictions. A weighted least squares technique is often employed [4]. - Recover Original Parameters: Solve the inverse mapping problem to find the values of the original parameters

kthat are consistent with the estimatedk_R. This may involve solving a secondary optimization problem: minimizing the difference in a key dynamical property (e.g., a metric related to settling time) between the full model (with parametersk) and the validated reduced model (with parametersk_R) [4]. - Validate the Identified Model: Simulate the full original model using the estimated parameters

kand compare its prediction for the observed speciesx_obs(t)against the experimental data to assess global fit.

- Apply Kron Reduction to the Model: Eliminate the ODEs corresponding to unobserved species from the system. This is performed mathematically on the network’s Laplacian-like structure, resulting in a reduced model

- Output Analysis: The final output is a complete, parameterized kinetic model. The success of the estimation is evaluated via the training error from the least squares step and the predictive capability of the full validated model [4].

Table 2: Summary of Key Reduction Protocols

| Protocol Step | Power System Focus [9] | Kinetic Model Focus [4] |

|---|---|---|

| 1. Preparation | Form Y_bus; run baseline power flow. |

Define full ODE model; compile partial dataset x_obs(t). |

| 2. Reduction Action | Compute Y_reduced via Schur complement. |

Derive reduced ODEs f_R for x_obs via Kron reduction. |

| 3. Core Estimation | N/A (structure is preserved). | Estimate reduced parameters k_R via (weighted) least squares. |

| 4. Validation | Compare voltage/power loss vs. full model. | Recover original k; simulate full model for comparison. |

The Scientist's Toolkit: Essential Research Reagents & Materials

For Power System Network Reduction:

- IEEE Standard Test Systems (e.g., 14, 39, 118-bus): Function: Benchmark networks with known topology and parameters for developing and validating reduction algorithms [20] [9].

- Power Flow Simulation Software (e.g., MATPOWER, PSSE): Function: Solves the AC power flow equations to generate baseline and post-reduction voltage, angle, and loss data for accuracy comparison.

- Computational Environment (MATLAB/Python with NumPy/SciPy): Function: Provides the linear algebra capabilities (matrix inversion, partitioning, multiplication) required to implement the Kron reduction formula efficiently [9].

For Kinetic Model Parameter Estimation:

- Biochemical Reaction Network Database (e.g., BioModels): Function: Repository of curated, published models providing standard systems (like nicotinic acetylcholine receptors) for testing parameter estimation methods [4].

- Ordinary Differential Equation (ODE) Solver Suite: Function: Integrates systems of ODEs (both full and reduced models) for simulation and generation of predicted concentration time-series during the fitting process.

- Optimization Toolbox (e.g., for Least Squares): Function: Performs the numerical minimization required to fit model parameters (

k_R) to experimental data, often using gradient-based or global optimization algorithms [4].

Visual Workflows for Kron Reduction Processes

Diagram 1: Workflow for Optimal Power System Bus Elimination This diagram illustrates the iterative, loss-aware protocol for reducing a power network [9].

Diagram 2: Workflow for Kinetic Model Parameter Estimation This diagram outlines the three-step process of model reduction, parameter fitting, and validation for biochemical systems [4].

The estimation of unknown parameters in complex dynamical systems, such as biochemical reaction networks, is a fundamental challenge in computational biology and pharmacology. This step is critical for transforming a conceptual model into a predictive, quantitative tool. Within the context of Kron reduction method research, parameter fitting via (Weighted) Least Squares Optimization provides a mathematically robust and computationally efficient solution to an otherwise ill-posed problem [4]. When experimental data is incomplete—a common scenario in drug development where measuring all molecular species is technically or ethically impossible—direct parameter estimation becomes infeasible [4]. The integration of Kron reduction with weighted least squares (WLS) elegantly addresses this by systematically reducing the model to only the observed variables, creating a well-posed estimation framework [4].

The core principle involves minimizing the difference between experimental observations and model predictions. For a parameter vector θ, the WLS objective function is formulated as: S(θ) = Σ wi (yi - f(ti, θ))^2, where *yi* are experimental data points, f(t_i, θ) are the corresponding model predictions, and w_i are weights assigned to each residual [4]. The weighting is crucial; it allows the modeler to balance the contribution of different data points based on their estimated reliability or variance, preventing high-variance observations from disproportionately skewing the fit [22]. This is particularly valuable when integrating data from multiple sources or with heteroscedastic noise [23].

Kron reduction serves as a powerful pre-processing step for this optimization. It is a model reduction technique that preserves the kinetic structure (e.g., mass-action kinetics) of the original network while eliminating unobserved state variables [4]. The method operates on the network's graph Laplacian matrix, performing a Schur complement to produce a reduced model whose dynamics are confined to the subset of measurable species [24]. The parameters of this reduced model are functions of the original, unknown parameters. Therefore, fitting the reduced model to the available partial data via WLS provides a tractable pathway to estimate the original system's parameters [4]. This methodology is not only applicable to idealized systems but is also robust enough to handle realistic scenarios with noisy and limited data, as demonstrated in applications ranging from receptor pharmacology to synthetic enzyme pathways [4].

Table 1: Core Concepts in Kron Reduction & WLS Parameter Estimation

| Concept | Definition | Role in Parameter Estimation |

|---|---|---|

| Ill-posed Problem | A problem where the solution is not unique or does not depend continuously on the data, often due to insufficient observations [4]. | Direct parameter estimation from partial species concentration data is ill-posed. |

| Kron Reduction | A graph-based model reduction technique that computes a Schur complement of the network Laplacian matrix, preserving kinetics [4] [24]. | Transforms an ill-posed estimation problem into a well-posed one by reducing the model to only observed variables. |

| Weighted Least Squares (WLS) | An optimization method that minimizes the weighted sum of squared differences between observed and predicted values [4]. | Finds the parameter values that best fit the Kron-reduced model to the experimental data. |

| Dynamic Weighting | A strategy to adaptively assign weights based on the reliability (e.g., variance) of detected vs. inferred data points [22]. | Balances contributions from different data sources (e.g., measured vs. imputed concentrations) to improve robustness. |

Experimental Protocols and Methodologies

The following protocol details a three-step methodology for parameter estimation in kinetic models of chemical reaction networks using Kron reduction and weighted least squares optimization, as established in recent research [4].

Protocol: Parameter Estimation via Kron Reduction & WLS

Objective: To estimate the unknown kinetic rate constants of a biochemical reaction network from incomplete, time-series concentration data of a subset of molecular species.