Mastering ReKinSim: A Comprehensive Tutorial for Reaction Kinetics Simulation in ADC and Drug Development

This comprehensive tutorial provides researchers, scientists, and drug development professionals with a complete guide to using the ReKinSim reaction kinetics simulator.

Mastering ReKinSim: A Comprehensive Tutorial for Reaction Kinetics Simulation in ADC and Drug Development

Abstract

This comprehensive tutorial provides researchers, scientists, and drug development professionals with a complete guide to using the ReKinSim reaction kinetics simulator. Beginning with foundational concepts in kinetic modeling and simulation principles, the article progresses through practical methodologies for building and parameterizing bioconjugation models, with a focus on antibody-drug conjugate (ADC) processes. It addresses common troubleshooting scenarios, optimization strategies for yield and purity, and concludes with robust validation techniques and comparative analysis against established tools and experimental data. By integrating theoretical knowledge with practical application, this guide empowers users to leverage in silico simulations for accelerated process development, scale-up prediction, and enhanced mechanistic understanding in biomedical research.

Understanding Reaction Kinetics Simulation: Core Principles and ReKinSim's Role in Biopharmaceutical Development

This article details the evolution of kinetic modeling in bioconjugation chemistry, with a focus on antibody-drug conjugate (ADC) process development. It contrasts traditional empirical statistical approaches with advanced mechanistic kinetic models that provide deeper chemical insights and superior predictive power. The discussion is framed within the context of utilizing the ReKinSim reaction kinetics simulator as a flexible and efficient tool for implementing these models. We present detailed protocols for generating kinetic data through fed-batch conjugation and for constructing and validating models, supported by structured tables of quantitative data and clear visualizations of workflows and reaction pathways. The integration of kinetic modeling into a Quality by Design (QbD) framework is highlighted as essential for robust, efficient therapeutic development [1] [2] [3].

Bioconjugation, the chemical linking of biomolecules to functional payloads, is the core manufacturing step for a growing class of biologics, most notably antibody-drug conjugates (ADCs). The success of an ADC hinges on conjugating a specific number of cytotoxic payload molecules onto a monoclonal antibody, defining the final drug-to-antibody ratio (DAR) and drug load distribution (DLD), which directly influence therapeutic potency and toxicity [1]. The conjugation reaction typically generates a complex mixture of species, and controlling this heterogeneity is a major process development challenge [3].

Regulatory encouragement of Quality by Design (QbD) principles demands a move from purely empirical process development to one based on profound process understanding. Kinetic modeling serves as a cornerstone of this approach [1]. While empirical models (e.g., from Design of Experiments, DoE) can correlate inputs to outputs, they offer limited extrapolation and no insight into the underlying chemical mechanism. In contrast, mechanistic kinetic models, built on systems of differential equations that describe the fundamental reaction steps, provide a quantitative understanding of the process. This enables in silico screening and optimization, which is invaluable for minimizing the use of costly and toxic payloads and ensuring process robustness [2] [3]. This article outlines the journey from empirical to mechanistic modeling, providing practical guidance framed within the context of implementing these models using simulation tools like ReKinSim [4].

Empirical and Statistical Modeling Approaches

Empirical approaches rely on observed data patterns without requiring a priori knowledge of the underlying chemical mechanism. They are often used for initial process characterization and screening.

- Design of Experiments (DoE): DoE is a statistical method used to systematically explore the effect of multiple process parameters (e.g., reactant concentrations, temperature, pH, reaction time) on Critical Quality Attributes (CQAs) like average DAR. It aims to identify optimal conditions with a reduced number of experiments compared to one-factor-at-a-time studies [1] [3].

- Statistical Correlations & Multivariate Analysis: These methods establish quantitative relationships between process parameters and outcomes. For example, a correlation might be developed between the initial molar drug-to-antibody ratio and the final average DAR. While useful for interpolation within the studied range, their predictive power falters when extrapolating to new conditions, as they do not account for the dynamic, time-dependent nature of the reaction [2] [3].

Table 1: Comparison of Empirical/Statistical Modeling Approaches in Bioconjugation

| Approach | Primary Function | Key Advantages | Major Limitations | Typical Use Case in Bioconjugation |

|---|---|---|---|---|

| Design of Experiments (DoE) | Identify significant process factors and their interactions; find optimal conditions. | Reduces total number of experiments needed; efficient screening tool. | Provides no mechanistic insight; models are often limited to interpolation within design space. | Initial screening of reaction parameters (pH, temp, excess) to identify a feasible operating window [1]. |

| Multivariate Regression | Establish quantitative input-output correlations (e.g., [Drug] vs. final DAR). | Simple to implement; useful for summarizing trends in historical data. | Cannot predict time-course profiles; poor at extrapolation; assumes fixed relationship. | Building a preliminary model for DAR based on historical batch data [3]. |

| High-Throughput Screening (HTS) | Generate large datasets across many conditions rapidly (e.g., in microplates). | Accelerates data generation; enables exploration of vast parameter spaces. | Data may be noisier; scale-down models must be representative; still requires a modeling framework for analysis. | Rapidly testing a library of different payloads or engineered antibody variants [3]. |

Mechanistic Kinetic Modeling: Principles and Development

Mechanistic modeling describes the system of elementary or pseudo-elementary reactions that constitute the overall conjugation process. The model consists of a set of ordinary differential equations (ODEs) that track the concentration of each species over time.

Fundamental Components of a Mechanistic Model

- Reaction Mechanism: A set of chemical equations defining how reactants convert to products. For a site-specific cysteine conjugation aiming for DAR 2, the simplest mechanism is two sequential steps:

mAb + Drug → mAb-Drug1mAb-Drug1 + Drug → mAb-Drug2[3]. - Rate Laws: An equation for each reaction step defining its rate as a function of reactant concentrations and a rate constant (k). For a bimolecular reaction, this is often a second-order rate law:

Rate = k * [mAb] * [Drug]. - Rate Constants (k): The model parameters that quantify the speed of each step. They are typically determined by fitting the model ODEs to time-course experimental data.

Model Development and Selection Workflow

Developing a robust model is an iterative process. A key challenge is selecting the correct model structure from several plausible candidates [3].

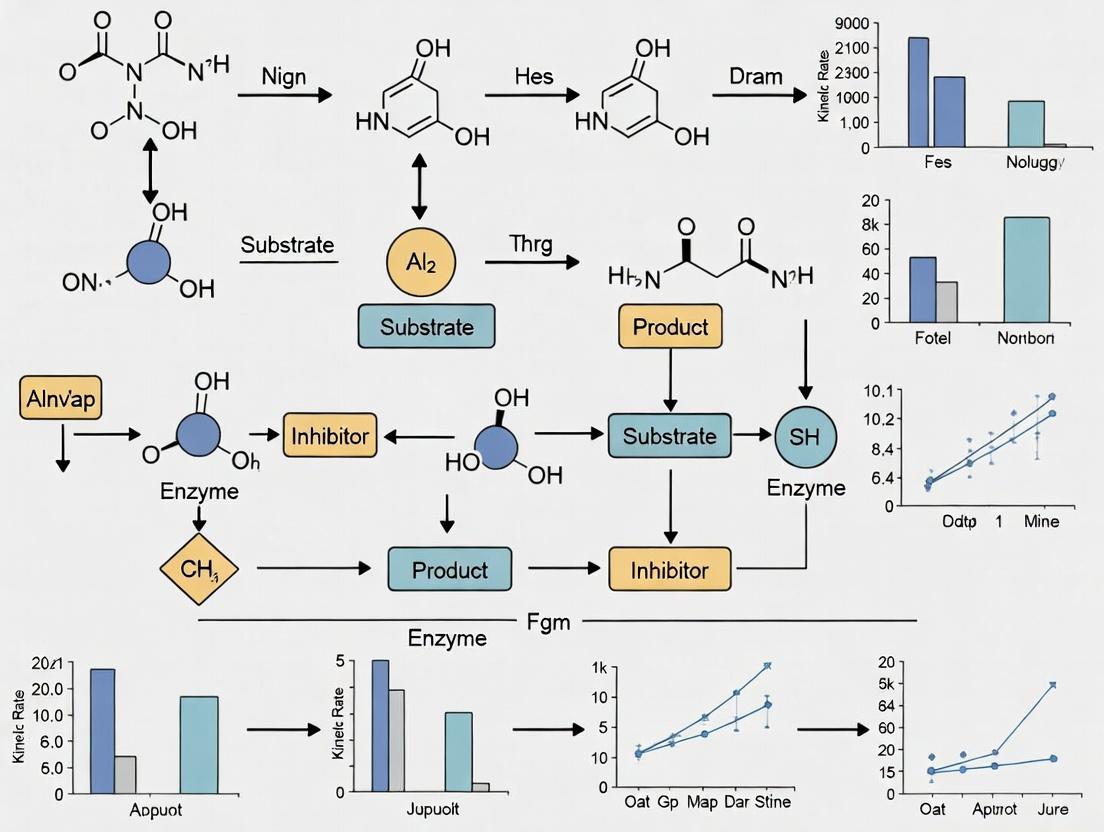

Diagram: Iterative Workflow for Mechanistic Kinetic Model Development

Advanced Model Considerations

Real-world systems often require more complex models. For interchain disulfide conjugation (targeting DAR 8), the antibody has multiple reactive sites (typically 8 cysteines), leading to a vast array of possible intermediate species. Models must account for different reaction rates depending on the site or the evolving chemical environment of the antibody. Studies have shown that the binding of the first drug molecule can influence the rate of binding of the second, an effect that must be captured in the model structure [1] [3]. Fed-batch experiments, where payload is added gradually, are particularly useful for decelerating the reaction and elucidating such complex mechanisms [1].

Table 2: Types of Mechanistic Kinetic Models for Bioconjugation

| Model Type | Description | Complexity | Application Example |

|---|---|---|---|

| Sequential Independent | All conjugation sites are identical and react independently at the same rate. | Low | Simple site-specific conjugation (2 identical engineered cysteines) [3]. |

| Sequential Influenced | The reaction rate for a site changes based on the occupancy of other sites (neighbor effect). | Medium | Interchain cysteine conjugation where modification alters antibody flexibility/reactivity [1] [3]. |

| Parallel-Serial Network | Accounts for multiple distinct types of reactive sites (e.g., on light vs. heavy chains) with different intrinsic rates. | High | Detailed modeling of lysine-based conjugation or complex interchain conjugation trajectories [1]. |

| Integrated Side Reactions | Includes pathways for payload degradation or hydrolysis in solution. | High | Modeling reactions where payload stability is a limiting factor [2]. |

Integration with the ReKinSim Simulation Platform

The ReKinSim (Reaction Kinetics Simulator) framework is a computational environment designed for describing biogeochemical reactions and fitting them to experimental data [4]. Its features align powerfully with the needs of mechanistic bioconjugation modeling:

- Generic ODE Solver: It provides a mathematical tool for solving sets of unlimited, arbitrary, non-linear ODEs, which is essential for complex conjugation networks [4].

- Flexible Model Definition: Users can define any kinetic model by writing the set of relevant chemical reactions, and ReKinSim automatically handles the translation into ODEs [4].

- Nonlinear Data-Fitting Module: An integrated, user-friendly module for parameter estimation allows model calibration against experimental time-course data [4].

- Computational Efficiency & Integration: Designed for efficiency, it can be easily integrated with other computational environments and data sources, supporting advanced workflows like global sensitivity or identifiability analysis [4].

Experimental Protocols for Kinetic Data Generation

Generating high-quality, time-course concentration data is critical for model calibration and validation. The following protocol is adapted from recent studies on cysteine-based ADC conjugation [1].

Protocol: Fed-Batch Conjugation for Detailed Kinetic Analysis

Objective: To perform a controlled antibody-drug conjugation reaction with gradual payload feeding, enabling detailed sampling for kinetic profiling.

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in Experiment | Example/Specification |

|---|---|---|

| Engineered mAb | The protein substrate for conjugation. | IgG1 with engineered cysteines (for DAR 2) or native interchain disulfides (for DAR 8). |

| Maleimide-Payload | The conjugation reagent. | Cytotoxic drug (e.g., "Drug1") or fluorescent surrogate (e.g., NPM) [1]. |

| TCEP (Tris(2-carboxyethyl)phosphine) | A reducing agent to cleave native interchain disulfide bonds. | Required for DAR 8 conjugation to generate free thiols [1] [3]. |

| DHAA (L-dehydroascorbic acid) | A re-oxidizing agent to re-form non-conjugated disulfides after reduction. | Used in DAR 2 processes to re-oxidize non-engineered cysteines [1]. |

| Conjugation Buffer | Maintains optimal pH and environment for the reaction. | Typically phosphate or borate buffer, pH 6.5-7.5. |

| RP-UHPLC System | The analytical tool for quantifying conjugated species. | System with C4 or C8 column under reducing conditions to separate and quantify conjugated light/heavy chains [1]. |

Materials & Setup:

- Purified monoclonal antibody (mAb) at known concentration.

- Maleimide-functionalized payload dissolved in DMSO or buffer.

- Reaction buffer (e.g., 50 mM phosphate, 5 mM EDTA, pH 7.0).

- Quenching solution (e.g., excess L-cysteine or N-acetylcysteine).

- HPLC vials and analytical instrumentation (RP-UHPLC with UV/Vis detector).

- Temperature-controlled reactor with stir plate and programmable syringe pump for fed-batch addition.

Procedure:

- Antibody Preparation (Modality Dependent):

- For DAR 2 (site-specific): If necessary, reduce interchain disulfides with TCEP, followed by buffer exchange and selective re-oxidation of non-engineered cysteines with DHAA [1].

- For DAR 8 (interchain): Fully reduce the antibody with excess TCEP, followed by buffer exchange to remove the reductant and generate free thiols [1].

- Reaction Initiation:

- Place the prepared mAb solution in the temperature-controlled reactor at the target temperature (e.g., 25°C).

- Initiate the reaction by adding a small initial bolus of the payload stock solution (e.g., 10% of total target) to achieve a low starting molar excess (e.g., 1x).

- Fed-Batch Phase:

- Immediately start the syringe pump to feed the remaining payload solution at a constant, slow rate over several hours (e.g., 6-24 hours). This gradual feeding decelerates the reaction, allowing for more data points during the crucial initial phase and preventing the obscuring of intermediate kinetics [1].

- Sampling:

- At predetermined time intervals (e.g., 0, 1, 2, 5, 10, 20, 40, 60, 120, 180... minutes), withdraw a small aliquot from the reaction mixture.

- Immediately mix the aliquot with the quenching solution to stop the conjugation reaction.

- Sample Analysis:

- Analyze quenched samples via reducing RP-UHPLC. The reducing agent (e.g., dithiothreitol) in the analysis breaks the antibody into light and heavy chains, which are separated by chromatography. The UV signal allows quantification of unconjugated and drug-conjugated light and heavy chain species, providing a detailed conjugation trajectory [1].

- Data Compilation:

- For each time point, calculate the concentrations of key species: unconjugated mAb, mono-conjugated species (mAb-Drug1), and di-conjugated species (mAb-Drug2), or their chain-specific equivalents.

Protocol: Model Calibration and Validation Workflow

Objective: To fit a candidate mechanistic model to experimental data, select the best model structure, and validate its predictive performance.

Procedure:

- Model Implementation: Code the ODEs for the candidate reaction mechanisms (e.g., in ReKinSim, MATLAB, or Python).

- Parameter Estimation (Calibration): Use a nonlinear regression algorithm to find the set of rate constants (k) that minimize the difference between the model simulations and the experimental time-course data from a calibration dataset [3].

- Model Selection: Employ cross-validation. The calibration dataset is split into several groups. The model is repeatedly fitted to all but one group and then used to predict the held-out group. The model with the lowest cross-validation error (e.g., RMSECV) and highest predictive coefficient (Q²) is selected [3].

- Model Validation: Test the final selected model's predictive power against a completely independent validation dataset. This dataset should include conditions within and, if possible, outside the calibration range. A high R² of prediction (e.g., >0.97) indicates a robust, reliable model [3].

- In Silico Application: Use the validated model to run simulations for process optimization (e.g., screening initial concentrations to achieve a target DAR profile with minimal payload excess) [1] [3].

Diagram: A Simple Two-Step Sequential Mechanism for Site-Specific Cysteine Conjugation

The transition from empirical correlations to mechanistic kinetic modeling represents a paradigm shift in bioconjugation process development, enabling a deeper, more predictive understanding aligned with QbD principles. For complex reactions like ADC conjugation, mechanistic models unravel the intricacies of reaction networks, allow for precise in silico optimization to conserve valuable reagents, and enhance process robustness. The successful implementation of this approach relies on well-designed fed-batch experiments to generate rich kinetic data, rigorous model selection and validation protocols, and powerful, flexible simulation tools like ReKinSim. Integrating these elements provides researchers and process developers with a formidable digital toolkit to accelerate the development of next-generation bioconjugate therapeutics.

Core Concepts in Reaction Kinetics

This section outlines the fundamental mathematical and computational concepts that form the basis for analyzing and simulating complex reaction systems, which are central to the development and application of the ReKinSim reaction kinetics simulator.

Rate Laws and Reaction Orders

The rate of a chemical reaction quantifies how quickly reactants are converted into products and is mathematically expressed by a rate law [5]. For a reaction involving reactants A and B, the rate law is: rate = k[A]^m[B]^n, where k is the rate constant, [A] and [B] are concentrations, and the exponents m and n are the reaction orders with respect to each reactant [5]. The overall reaction order is the sum of these individual orders. Reaction order defines the dependence of rate on concentration: doubling the concentration of a first-order reactant doubles the rate, while doubling a second-order reactant quadruples the rate [5].

Complex reactions involve multiple elementary steps, and their overall rate law cannot be deduced directly from the stoichiometric equation [6]. Instead, it is determined by the mechanism's rate-determining step (RDS), which is the slowest elementary step and acts as the bottleneck for the entire process [6].

Table: Characteristics of Common Reaction Orders

| Reaction Order | Rate Law | Integrated Rate Law | Half-Life (t₁/₂) | Linear Plot | k units |

|---|---|---|---|---|---|

| Zero-Order | -d[A]/dt = k |

[A] = [A]₀ - kt |

[A]₀/(2k) |

[A] vs. t | M/s |

| First-Order | -d[A]/dt = k[A] |

[A] = [A]₀e^(-kt) |

ln(2)/k |

ln[A] vs. t | s⁻¹ |

| Second-Order | -d[A]/dt = k[A]² |

1/[A] = 1/[A]₀ + kt |

1/(k[A]₀) |

1/[A] vs. t | M⁻¹s⁻¹ |

Ordinary Differential Equations (ODEs) for Complex Mechanisms

The time-dependent change in concentration for each species in a multi-step mechanism is described by a system of Ordinary Differential Equations (ODEs). Each ODE is constructed from the sum of the rates of all steps that produce or consume that species.

For complex mechanisms, the steady-state approximation is a critical tool for simplifying these ODE systems [6]. It applies to highly reactive intermediates, assuming their concentration remains constant because their rate of formation is equal to their rate of consumption. This allows their concentration to be expressed in terms of reactant concentrations and rate constants, which can be substituted into the rate law of the RDS to derive a manageable overall rate expression [6].

Numerical Integration of Kinetic ODEs

For all but the simplest reaction systems, the coupled ODEs are non-linear and cannot be solved analytically. Numerical integration is required to compute the concentration profiles over time. This is the core computational function of kinetics simulators like KINSIM and its conceptual successor, ReKinSim [7].

The process involves defining the mechanism (steps and initial rate constants), setting initial concentrations, and using an algorithm (e.g., Runge-Kutta) to iteratively calculate concentrations forward in time. The simulated time course can then be directly compared to experimental data, allowing researchers to test proposed mechanisms and refine estimated rate constants [7].

Experimental Protocols for Kinetic Analysis

Protocol: Determining Reaction Order and Rate Constant

Objective: To experimentally determine the order of reaction with respect to a reactant and calculate the rate constant.

Principles: The order is found by observing how the initial reaction rate changes when the initial concentration of the target reactant is varied, while others are held in large excess [5]. The rate constant is derived from the slope of the appropriate linear plot based on the determined order [5].

Procedure:

- Prepare Reaction Mixtures: Create a series of solutions where the concentration of the reactant of interest (

[A]₀) varies (e.g., 0.5x, 1x, 2x). Ensure other reactants are in at least a 10-fold excess to create pseudo-order conditions [5]. - Initiate Reaction & Monitor: Rapidly mix reagents to start the reaction. Use a suitable spectroscopic technique (UV-Vis absorbance, fluorescence) to monitor the change in concentration of a reactant or product over time [5].

- Calculate Initial Rates: For each run, determine the initial rate from the steepest slope at

t→0of the concentration-time curve. - Determine Order: Plot log(initial rate) vs. log(

[A]₀). The slope of the line equals the ordermwith respect to A [5]. - Determine Rate Constant: Based on the order, plot the corresponding linear form (e.g., ln[A] vs. t for first-order). The absolute value of the slope is the rate constant

k[5].

Protocol: Stopped-Flow Kinetics for Fast Reactions

Objective: To measure the kinetics of reactions occurring on timescales from milliseconds to seconds.

Principles: Stopped-flow instruments automate rapid mixing and immediate data acquisition, minimizing the dead time (the delay between mixing and first measurement) to ~1 ms or less, which is critical for fast reactions [5].

Procedure:

- Load Syringes: Fill two drive syringes with the reactant solutions. Fill a third stop syringe with a stopping buffer or water [5].

- Initiate Rapid Mixing: Activate the drive ram to push reactants through a mixing chamber and into the observation cell, which displaces the contents into the stop syringe [5].

- Trigger Data Collection: When the stop syringe piston hits a mechanical stop, it triggers the spectrometer to begin collecting data (absorbance or fluorescence) in real-time [5].

- Data Analysis: Fit the resulting single-exponential or multi-exponential trace to extract observed rate constants. Vary reactant concentrations to elucidate the mechanism and determine elementary rate constants.

Visualizing Kinetic Concepts and Workflows

Simulation Workflow for Complex Reaction Kinetics

Stopped-Flow Instrument Data Collection Workflow

The Scientist's Toolkit: Essential Reagents & Materials

Table: Key Research Reagent Solutions and Instrumentation for Kinetic Studies

| Item | Function in Kinetic Experiments |

|---|---|

| Stopped-Flow Spectrometer | Enables measurement of rapid reaction kinetics (ms-s) by automating mixing and data collection with minimal dead time [5]. |

| UV-Visible Spectrophotometer | Standard instrument for monitoring concentration changes via absorption of light; can be coupled with stopped-flow or used for slower reactions [5]. |

| Fluorescence Spectrometer | Provides highly sensitive detection for reactions involving fluorescent reactants or products; often used in stopped-flow mode [5]. |

| Temperature-Controlled Cuvette Holder | Maintains constant temperature during reaction, crucial as rate constants are highly temperature-dependent. |

| High-Purity Buffer Systems | Maintain constant pH and ionic strength, ensuring reaction rate changes are due to variables under study and not environmental shifts. |

| Substrate/Enzyme Stock Solutions | Precisely prepared, aliquoted stocks ensure reproducibility when diluted to start reactions. |

| Quench Solution (e.g., strong acid/base) | Rapidly halts a reaction at specific time points for analysis by HPLC or other endpoint methods. |

| Kinetics Simulation Software (e.g., ReKinSim, KINSIM) | Solves systems of ODEs for proposed mechanisms, allowing visual fitting of models to experimental data and extraction of rate constants [7]. |

Antibody-Drug Conjugates (ADCs) represent a transformative class of targeted oncology therapeutics, designed to deliver highly potent cytotoxic agents directly to tumor cells by linking them to monoclonal antibodies via specialized chemical linkers [8]. This architecture aims to maximize efficacy while minimizing the systemic toxicity associated with traditional chemotherapy [9]. The global ADC market is projected to exceed $16 billion by 2025, reflecting rapid clinical adoption and intense investment [10]. However, ADC development is fraught with unique and profound challenges that stem from their inherent structural and functional complexity.

The core challenge is the "tripartite optimization" of the antibody, linker, and payload—components with often conflicting physicochemical and biological requirements [8]. A change in the Drug-to-Antibody Ratio (DAR), linker stability, or payload potency can unpredictably alter pharmacokinetics (PK), efficacy, and toxicity profiles [11] [9]. Traditionally, navigating this complexity has relied on empirical, trial-and-error approaches, leading to high attrition rates, prolonged development timelines, and significant costs [10] [8].

Simulation and modeling have therefore emerged as critical, enabling tools. By applying Quality by Design (QbD) principles—a systematic, risk-based approach to development—simulation allows researchers to proactively identify Critical Quality Attributes (CQAs) and control Critical Process Parameters (CPPs) [10]. This article details how kinetic simulation, particularly within the context of ReKinSim research, provides a foundational framework for de-risking ADC development, reducing costs, and ensuring patient safety through predictive, in silico experimentation.

Core Challenges in ADC Development Addressed by Simulation

The development pathway for ADCs is punctuated by specific, interlinked challenges where simulation offers decisive advantages.

- Payload-Linker Optimization and Heterogeneity: The conjugation process must balance multiple factors. The linker must be stable in circulation to prevent premature, toxic payload release, yet efficiently cleavable inside the target cell [8]. Traditional random conjugation methods produce heterogeneous mixtures with variable DARs and attachment sites, leading to inconsistent PK/PD and batch-to-batch variability [12]. Simulation can model conjugation kinetics and linker stability under physiological conditions to guide the design of more homogeneous, site-specific conjugates [10] [11].

- Predicting Pharmacokinetics/Pharmacodynamics (PK/PD) and Toxicity: The therapeutic index of an ADC is narrow. Off-target toxicity can arise from payload release in circulation, binding to antigens on healthy tissues ("on-target, off-tumor"), or nonspecific uptake [8] [9]. Mechanistic simulation models can integrate data on target expression in tumors versus healthy tissues, antibody affinity, internalization rates, and payload efflux mechanisms to predict exposure-response relationships and identify potential toxicity risks before clinical trials [11] [13].

- Scale-up and Manufacturing Consistency: Transitioning from lab-scale synthesis to commercial manufacturing introduces risks. Maintaining consistent DAR, purity, and stability at scale is technically demanding and costly [10]. Process modeling and simulation are central to QbD, enabling the definition of a design space for manufacturing processes that ensures consistent product quality despite scale-related variables [10].

Table 1: Quantitative Overview of Key ADC Development Challenges and Simulation Impact

| Development Challenge | Key Metric/Issue | Consequence of Failure | Simulation/QbD Mitigation Strategy |

|---|---|---|---|

| Conjugation & Heterogeneity | Variable Drug-to-Antibody Ratio (DAR); Random attachment sites [12] | Unpredictable PK/PD; Reduced efficacy; Increased toxicity [12] [8] | Kinetic modeling of conjugation; Design of site-specific platforms [10] [11] |

| Linker Stability | Premature payload release in systemic circulation [8] | Dose-limiting off-target toxicity [9] | Computational chemistry to model linker cleavage kinetics under physiological pH/ enzyme conditions [10] [8] |

| Target Selection | Low tumor specificity; Heterogeneous antigen expression [8] | On-target, off-tumor toxicity; Limited patient response [9] | Systems biology models integrating multi-omics data to prioritize selective, internalizing antigens [11] [8] |

| Manufacturing Scale-up | Batch-to-batch variability in critical quality attributes (CQAs) [10] | Product recalls; Regulatory delays; Cost overruns [10] | Process modeling to define design space and critical process parameters (CPPs) for consistent production [10] |

Simulation Methodologies and Application Notes

Kinetic Simulation with ReKinSim: A Thesis Research Framework

The ReKinSim (Reaction Kinetics Simulator) platform provides a generic mathematical environment for solving complex systems of non-linear ordinary differential equations, making it ideal for modeling the multi-step kinetics inherent to ADC behavior [4]. Within thesis research, ReKinSim can be applied to move beyond descriptive biology to a quantitative, predictive understanding of ADC mechanisms.

A primary application is parameter estimation for payload release kinetics. By defining a reaction network that includes linker cleavage (e.g., via lysosomal proteases or acidic pH) and subsequent intracellular payload diffusion, ReKinSim can fit model outputs to time-course experimental data (e.g., from in vitro assays measuring intracellular payload concentration). This inverse-fitting capability allows researchers to extract critical rate constants that are otherwise difficult to measure directly [4] [7].

Furthermore, ReKinSim can model the complete ADC cellular disposition pathway: antigen binding, receptor internalization, endosomal trafficking, linker cleavage, payload activation, and drug efflux. Simulating this pathway helps identify the rate-limiting steps that govern overall ADC potency and enables in silico testing of how engineering changes (e.g., a more stable linker or a different antibody affinity) would impact the system's output [4].

Flowchart: ReKinSim Simulation Workflow for ADC Kinetics.

Advanced Mechanistic Modeling: QSP and PBPK

Beyond reaction kinetics, two advanced simulation paradigms are essential for ADC development.

Quantitative Systems Pharmacology (QSP) models combine systems biology with pharmacology to simulate how an ADC perturbs a biological network. A platform QSP model for ADCs can incorporate details on tumor growth dynamics, target expression heterogeneity, immune effector functions, and bystander killing effects [11]. These models are used for feasibility analysis, asking questions such as: "What target receptor expression level and antibody affinity are required for efficacy?" or "At what level does expression on healthy tissues drive toxicity?" [11]. This allows for virtual screening of target candidates and ADC designs before resource-intensive experimental work begins.

Physiologically Based Pharmacokinetic (PBPK) modeling builds a virtual representation of the human body with organ compartments connected by blood flow. For ADCs, a whole-body PBPK model can simultaneously describe the PK of the conjugated antibody, the released payload, and the naked antibody [13] [14]. These models are crucial for translational prediction, scaling preclinical results from rats or monkeys to human clinical outcomes [14]. They can also simulate the impact of patient factors (e.g., albumin levels, tumor burden) or dosing regimens on exposure, aiding in clinical trial design [13].

Table 2: Comparison of Primary Simulation Methodologies in ADC Development

| Methodology | Primary Scale | Key Inputs | Primary Outputs | Main Application in ADC Development |

|---|---|---|---|---|

| Kinetic Simulation (e.g., ReKinSim) | Molecular & Cellular | Reaction rate laws, initial concentrations, experimental time-course data [4] | Estimated rate constants, time-concentration profiles of all species [4] [7] | Quantifying linker cleavage & payload release kinetics; Modeling intracellular trafficking steps |

| Quantitative Systems Pharmacology (QSP) | Cellular, Tissue & Tumor | Target expression data, cell proliferation rates, in vitro potency (IC50), PK data [11] | Predicted tumor growth inhibition, dose-response curves, therapeutic index [11] | Early feasibility & target validation; Predicting efficacy/toxicity trade-offs; Bystander effect analysis |

| Physiologically Based PK (PBPK) | Whole Body (Organ-level) | Physiological parameters, antibody PK, payload ADME, deconjugation rates [13] [14] | Concentration-time profiles in plasma and key organs (tumor, liver, etc.) [14] | Preclinical-to-clinical translation; Simulating drug-drug interactions; Optimizing dosing regimens |

Detailed Experimental Protocols Enabled by Simulation

The following protocols exemplify how simulation directly guides and enhances critical ADC experiments.

Protocol 1: In Silico-Guided Design and In Vitro Evaluation of a Novel ADC Conjugate

- Objective: To design, synthesize, and evaluate a novel ADC using a site-specific conjugation platform and a TNM-based payload, with simulation guiding linker selection.

- Background: Site-specific conjugation (e.g., to engineered cysteines or lysines) improves homogeneity. Tiancimycin (TNM) is a potent anthraquinone-fused enediyne (AFE) payload [12].

- Simulation Pre-Work: Use kinetic simulation to model the stability of candidate linkers (non-cleavable, hydrazone, oxime) at plasma pH (7.4) and endosomal/lysosomal pH (5.0-6.0). Rank linkers based on predicted stability and release profiles.

- Experimental Steps:

- Biocatalytic Payload Synthesis: Scale-up fermentation of Streptomyces sp. to produce TNM C. Use the enzyme TnmH for site-specific functionalization at the C7 position to generate propargyl-TNM C, enabling click chemistry conjugation [12].

- Conjugation: Conjugate the functionalized payload via the pre-selected linkers to a dual-variable domain (DVD) antibody containing an engineered lysine for site-specific attachment [12].

- Characterization: Employ hydrophobic interaction chromatography (HIC) and LC-MS to confirm a homogeneous DAR of 2 and assess aggregation [10] [12].

- Validation: Perform in vitro cytotoxicity assays on target-positive (e.g., CD79b+ for B-cell lymphoma) and target-negative cell lines. Compare the experimental IC50 values and selectivity index with the simulations' predictions of potency and release efficiency [12].

Protocol 2: Integrated QSP-PBPK Modeling to Predict Clinical PK and First-in-Human Dose

- Objective: To translate preclinical data into a predicted clinical PK profile and a recommended starting dose for a novel ADC.

- Background: Clinical translation of ADCs is high-risk due to complex, non-linear PK driven by target-mediated drug disposition (TMDD) and deconjugation [13] [14].

- Simulation Workflow:

- Build Preclinical PBPK Model: Develop a rat PBPK model using data from biodistribution studies for the conjugated antibody, naked antibody, and released payload [14].

- Incorporate QSP Tumor Module: Link the PBPK model to a QSP tumor growth module parameterized with in vitro internalization data and in vivo xenograft efficacy data [11].

- Translate to Human: Allometrically scale physiological parameters. Incorporate human-specific target expression data (from biopsies or literature) and adjust deconjugation rates using in vitro human liver S9 or whole blood stability data [13] [14].

- Optimize & Predict: Use the integrated model to simulate Phase I dosing scenarios. The first-in-human dose is often predicted by identifying the dose that achieves a similar payload exposure in human tumors as the exposure associated with the minimum efficacious dose in animal models.

- Validation: Compare the model-predicted human PK profiles (for conjugate, total antibody, and payload) with Phase I clinical data as it becomes available, and iteratively refine the model [14].

Protocol 3: Characterization of ADC Binding, Internalization, and Payload Release Kinetics

- Objective: To generate quantitative data on key cellular kinetic parameters for input into ReKinSim and QSP models.

- Background: The rate of antigen binding, complex internalization, and intracellular payload release are critical determinants of ADC activity [9].

- Binding Affinity (SPR/BLI): Determine the antibody's binding kinetics (ka, kd, KD) to the recombinant antigen using surface plasmon resonance or bio-layer interferometry.

- Internalization Flow Cytometry:

- Label the ADC with a pH-insensitive fluorescent dye (e.g., Alexa Fluor 647).

- Incubate with target-positive cells at 4°C to allow binding without internalization.

- Shift to 37°C to initiate internalization. At time points, strip surface-bound ADC with an acidic glycine buffer.

- Analyze by flow cytometry to quantify remaining internalized fluorescence over time [15].

- Payload Release Assay (LC-MS/MS):

- Treat cells with the ADC and, at specified time points, lyse them.

- Use solid-phase extraction to isolate the released payload from cell lysates.

- Quantify the payload concentration using a validated LC-MS/MS method.

- Data Integration: The time-course data from steps 2 and 3 serve as the essential experimental dataset for fitting and validating a ReKinSim model of the complete cellular uptake and release pathway.

Table 3: Key Research Reagent Solutions for ADC Simulation & Experimental Work

| Item/Category | Function/Description | Example/Application in Protocols |

|---|---|---|

| Site-Specific Conjugation Kits | Enable generation of homogeneous ADCs with defined DAR (e.g., Thiomab/engineered Cys, enzyme-mediated) [10] [12] | Protocol 1: Conjugation to engineered lysine on DVD-IgG1 format [12]. |

| TNM-based Payload & Biocatalysis System | A potent, synthetically tractable enediyne payload platform. TnmH enzyme enables precise C7 functionalization for linker attachment [12]. | Protocol 1: Production of propargyl-TNM C as a conjugation-ready payload intermediate [12]. |

| Advanced Analytical Standards | Critical for characterizing CQAs. Includes DAR standards, payload metabolites, and stable isotope-labeled internal standards for LC-MS [10]. | Protocols 1 & 3: Quantifying DAR by HIC or LC-MS; measuring released payload in cells via LC-MS/MS [10] [12]. |

| Fluorescent & Cytotoxic Payload-Linker Derivatives | Tool compounds for tracking ADC fate (fluorescence) and measuring potency (cytotoxicity) in parallel assays [15]. | Protocol 3: Alexa Fluor 647-labeled ADC for internalization studies [15]. |

| QSP/PBPK Platform Software | Commercial or open-source software (e.g., Certara's platform, PK-Sim/MoBi) containing pre-validated systems or physiological templates for ADC modeling [11] [13]. | Protocol 2: Building and translating integrated QSP-PBPK models for clinical prediction [11] [13] [14]. |

| ReKinSim or KINSIM Software | Flexible kinetic simulation environments for solving systems of ODEs and fitting parameters to experimental time-course data [4] [7]. | Core Thesis Tool: Modeling intracellular ADC kinetics and estimating rate constants from Protocol 3 data [4]. |

Flowchart: The ADC Design-Simulation Iterative Cycle.

Applying ReKinSim in Thesis Research: A Practical Framework

Within a thesis on ReKinSim tutorial research, ADC development provides a rich, real-world application domain. The research can be structured to demonstrate how kinetic simulation moves from a descriptive tool to a predictive engine for QbD.

A foundational thesis project could involve developing and validating a public, annotated ReKinSim model for a canonical ADC mechanism. This model would include reactions for binding, internalization, trafficking to lysosomes, linker cleavage, and payload diffusion to the nucleus. By parameterizing this model with public data from a well-characterized ADC like T-DM1, the research would create a benchmark and educational resource for the community.

The core of the thesis could then focus on applying this framework to a novel, unresolved kinetic question. For example: "Does payload efflux via P-glycoprotein (P-gp) from resistant cells act as a significant sink that alters the apparent kinetics of linker cleavage in intracellular compartments?" [9]. The research would involve:

- Extending the benchmark ReKinSim model to include P-gp efflux reactions.

- Designing experiments (following Protocol 3) to generate time-course data in isogenic cell pairs (P-gp+ vs. P-gp-).

- Using ReKinSim's inverse-fitting capability to estimate the efflux rate constants from the data.

- Performing sensitivity analysis to determine if efflux is a critical parameter controlling overall ADC activity in resistant settings.

This work directly contributes to the QbD paradigm by identifying a new Critical Process Parameter (intracellular efflux rate) that could influence the design of next-generation payloads or combination therapies to overcome resistance.

Future Directions: AI Integration and Personalized Medicine

The future of ADC simulation lies in its integration with Artificial Intelligence (AI) and large-scale data, creating closed-loop "Design-Build-Test-Learn" (DBTL) cycles [8].

- AI-Enhanced Design: Generative AI models can propose novel linker-payload structures optimized for multiple objectives (stability, solubility, potency). These proposed structures can first be screened in silico using QSP models for efficacy/toxicity predictions and ReKinSim for kinetic feasibility before any chemical synthesis [8].

- Digital Twins for Patients: Advanced PBPK/QSP models, individualized with a patient's own multi-omics data (tumor antigen expression profile, liver enzyme levels), can function as "digital twins." These models could simulate a patient's response to different ADC dosing regimens, moving towards truly personalized oncology treatment plans [11] [8].

- Closing the Loop with Machine Learning: The experimental data generated from protocols guided by initial simulations become training data for machine learning algorithms. These ML models can learn to identify complex, non-linear relationships between ADC structural features and in vivo outcomes, further refining and accelerating the next round of design [8].

Flowchart: The AI-Augmented Design-Build-Test-Learn (DBTL) Cycle for ADCs.

The development of safe, effective, and affordable Antibody-Drug Conjugates is one of the most complex endeavors in modern biotherapeutics. The traditional empirical approach is no longer sufficient to navigate the intricate trade-offs between antibody targeting, linker stability, and payload potency. As detailed in these application notes and protocols, simulation is not a supplementary activity but a critical core competency for modern ADC development.

Through kinetic simulation (ReKinSim), Quantitative Systems Pharmacology (QSP), and Physiologically Based Pharmacokinetic (PBPK) modeling, the principles of Quality by Design can be rigorously implemented. This allows teams to shift resources from late-stage, high-cost failure to early-stage, in silico de-risking. By predicting clinical outcomes, optimizing manufacturing processes, and guiding personalized therapy, simulation directly addresses the imperatives of cost reduction, patient safety, and robust quality. The integration of these methodologies, particularly within thesis research that pushes the boundaries of kinetic modeling, will be instrumental in unlocking the full potential of ADCs and delivering next-generation therapies to patients in need.

This document provides comprehensive application notes and protocols for ReKinSim (Reaction Kinetics Simulator), a modeling framework for solving and inversely fitting complex systems of biogeochemical reactions [4]. Developed as a response to the limitations of existing kinetic simulation tools, ReKinSim offers a unique combination of flexibility in model definition, computational efficiency, and user-friendliness [4]. The core thesis of this research is that ReKinSim represents a significant advancement in kinetic parameter estimation by removing arbitrary constraints on reaction network complexity and seamlessly integrating environmental dynamics. It serves as an essential platform for researchers and drug development professionals to elucidate rate-determining steps, quantify kinetic parameters from experimental data, and predict system behavior under novel conditions. By providing a detailed overview of its interface, core functionality, and workflow, this tutorial aims to bridge the gap between theoretical kinetic modeling and practical laboratory application.

Platform Architecture and Interface

ReKinSim is built on a modular architecture designed for versatility and integration. Its primary interface is a script-based environment, typically accessed through computational platforms like MATLAB or Python, allowing users to define models programmatically. This design provides maximum flexibility for representing complex, non-linear interactions common in environmental and biochemical systems [4].

Table 1: Comparison of ReKinSim with Related Simulation Platforms

| Platform/Tool | Primary Focus | Key Limitation | ReKinSim's Advantage |

|---|---|---|---|

| KINSIM [16] | General chemical & enzyme kinetics | Fixed, limited reaction mechanisms; older architecture. | Unlimited, arbitrary ODE systems; modern, efficient solver [4]. |

| RecSim/RecSim NG [17] [18] | Recommender system ecosystems | Specialized for user-item-agent interactions, not chemical kinetics. | Generic framework for biogeochemical and kinetic reactions [4]. |

| Standard ODE Suites | General numerical solution | Lack of built-in, flexible inverse-fitting modules for parameter estimation. | Integrated, easy-to-use module for nonlinear data-fitting [4]. |

The interface is structured around three core modules:

- Model Definition Module: Users specify reactions, stoichiometry, rate laws, and initial conditions.

- Simulation Engine: A robust numerical solver for systems of ordinary differential equations (ODEs).

- Parameter Estimation Module: An optimization toolkit for fitting model parameters to experimental data.

Figure 1: ReKinSim's modular software architecture and data flow.

Core Functionality

ReKinSim's functionality is defined by its capacity to handle kinetic complexity and its integrated fitting approach, which directly supports the research thesis on elucidating controlling factors in environmental systems [4].

Table 2: Key Functional Capabilities of ReKinSim

| Functionality Category | Specific Capability | Application Example |

|---|---|---|

| Model Formulation | Define unlimited, arbitrary non-linear ODEs. | Modeling coupled biotic/abiotic transformation networks [4]. |

| Reaction Network Scope | Include any number/type of reactions; incorporate isotope fractionation, mass-transfer. | Studying masked isotope fractionation due to cell wall permeation [19]. |

| Inverse Modeling | Flexible non-linear data-fitting to estimate kinetic parameters. | Estimating degradation rate constants (k) and half-lives from concentration time series. |

| System Integration | Solve chemical kinetics alongside other environmental dynamics. | Coupling reaction kinetics with diffusion or sorption processes. |

The core solver employs advanced numerical integration techniques suitable for stiff ODE systems often encountered in reaction networks. Its parameter estimation module uses gradient-based or heuristic optimization algorithms to minimize the difference between simulated results and experimental observations, a critical step for model calibration and validation.

Complete Workflow Protocol

The following protocol outlines the standard workflow for using ReKinSim, from problem definition to analysis.

Protocol 1: End-to-End Kinetic Modeling with ReKinSim

Objective: To construct a kinetic model, calibrate it against experimental data, and use it for predictive simulation.

Materials: ReKinSim software (accessed via compatible computational environment); Experimental dataset (e.g., time-course concentration measurements).

Procedure:

Problem Definition & Conceptual Model:

- Define the system boundaries and key chemical or biological species.

- Draft the reaction network, including all hypothesized transformation pathways.

- Formulate explicit mathematical expressions for each rate law (e.g., mass-action, Michaelis-Menten).

Implementation in ReKinSim:

- In the script interface, declare all state variables (species concentrations) and their initial conditions.

- Program the system of ODEs as defined in Step 1, linking each derivative to the corresponding rate laws.

- Set the numerical integration parameters (simulation time span, solver tolerances).

Parameter Estimation (Model Calibration):

- Load the experimental dataset (e.g., a table of time vs. concentration).

- Identify which model parameters are unknown and must be fitted (e.g., rate constants

k). - Define an objective function (e.g., sum of squared errors) comparing simulation output to data.

- Execute the fitting routine to find the parameter set that minimizes the objective function.

- Assess the goodness-of-fit using statistical measures (e.g., R², confidence intervals on parameters).

Model Validation & Prediction:

- Validate the calibrated model using a separate, independent dataset not used for fitting.

- Perform sensitivity analysis to identify which parameters most influence key outputs.

- Run predictive simulations under new conditions (e.g., different initial concentrations, temperature).

Figure 2: The iterative workflow for kinetic parameter estimation using ReKinSim.

Experimental Protocols for Generating Calibration Data

The power of ReKinSim is realized when fitting models to high-quality experimental data. The following protocol, adapted from a study on atrazine biodegradation, exemplifies the generation of data for discriminating between kinetic and mass-transfer limitations [19].

Protocol 2: Isotope Fractionation Experiment for Identifying Rate-Limiting Steps

Objective: To determine whether pollutant biodegradation is limited by enzymatic kinetics or by mass transfer across the cell membrane, using Compound-Specific Isotope Analysis (CSIA).

Rationale: Enzymatic bond cleavage favors lighter isotopes (^12C over ^13C), leading to isotope fractionation. If mass transfer (e.g., diffusion across a cell wall) is slow relative to enzyme turnover, it becomes the rate-limiting step and masks this isotopic signal. The magnitude of the observable isotope enrichment factor (ε) reveals the nature of the rate-limiting step [19].

Materials:

- Target Chemical: Atrazine (or relevant substrate).

- Microbial Strains: Gram-negative (Polaromonas sp. Nea-C) and Gram-positive (Arthrobacter aurescens TC1) bacteria [19].

- Culture Medium: Minimal salts medium (MSM).

- Inhibitor: Potassium cyanide (KCN) solution for active transport inhibition.

- Equipment: HPLC-UV for concentration analysis; Gas Chromatograph-Isotope Ratio Mass Spectrometer (GC-IRMS) for CSIA; French pressure cell for preparing cell-free extracts; centrifuge.

Procedure:

- Cultivation: Grow bacterial strains in MSM with atrazine as the sole carbon/nitrogen source until mid-exponential phase [19].

- Experiment Setup:

- Whole-Cell Assays: Harvest, wash, and resuspend cells in fresh MSM with atrazine (~30 mg/L). Run degradation experiments in batch reactors [19].

- Cell-Free Extract Assays: Disrupt washed cells using a French press and filter to obtain cell-free extracts. Incubate extract with atrazine [19].

- Inhibition Assays: To whole-cell suspensions, add KCN to a final concentration of 1-2 mM to inhibit active transport processes [19].

- Sampling: Periodically collect samples from each assay. Terminate reactions immediately via sterile filtration (0.2 μm) [19].

- Chemical Analysis:

- Concentration: Analyze filtrate via HPLC-UV to determine atrazine concentration over time.

- Isotope Ratio: Extract atrazine from filtrate, purify, and analyze

^13C/^12Cratio via GC-IRMS [19].

- Data Processing:

- Plot remaining atrazine fraction (C/C₀) versus time.

- Plot the isotope ratio (

^13C/^12C) against the natural logarithm of the remaining atrazine fraction (ln(C/C₀)). - Determine the apparent isotope enrichment factor (ε) from the slope of the linear regression in the second plot (Rayleigh equation) [19].

Interpretation for ReKinSim Modeling:

- A large, negative ε value (e.g., -5.4‰) indicates that enzymatic transformation is rate-limiting, and the observed ε is close to the intrinsic enzyme value.

- A smaller apparent ε value (e.g., -3.5‰) suggests that mass transfer (e.g., diffusion through a Gram-negative outer membrane) is partially rate-limiting, masking the full enzymatic isotope effect [19].

- In ReKinSim, this is modeled by explicitly adding diffusion steps (governed by permeability constants) into the reaction network. The fitted permeability constant can be compared across experimental conditions (e.g., Gram-negative vs. Gram-positive, with/without inhibitor) to validate hypotheses.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Reagents and Materials for Kinetic Studies

| Item | Function in Kinetic Studies | Relevance to ReKinSim |

|---|---|---|

Isotopically-Labeled Substrates (e.g., ^13C-atrazine) |

Enable tracking of specific atoms through reaction pathways; essential for CSIA to measure isotope fractionation factors [19]. | Provides critical data (ε values) to discriminate between kinetic and transport limitations in a model. |

| Metabolic/Transport Inhibitors (e.g., KCN) | Selectively inhibit active transport or specific enzymatic pathways to isolate contributions of different processes [19]. | Used to generate contrasting datasets for model discrimination and to validate hypothesized mechanisms. |

| Cell Disruption Tools (French Press, Sonication) | Produce cell-free extracts to study enzyme kinetics without the complicating factor of cellular uptake [19]. | Generates data representing the "intrinsic" kinetic parameters, which can be compared to whole-cell data to fit membrane permeability constants. |

| Specialized Analytical Chemistry:• HPLC-UV/MS• GC-IRMS | Quantify chemical concentrations over time (HPLC) and measure precise isotope ratios (GC-IRMS) [19]. | Source of primary time-course data (concentration) and advanced mechanistic data (isotope ratios) for model calibration and validation. |

| Defined Mineral Salt Media | Provide a controlled, reproducible chemical environment for microbial growth and degradation experiments [19]. | Minimizes uncontrolled variables, ensuring that kinetic models are fitted to data reflecting the fundamental processes of interest. |

The ReKinSim (Reaction Kinetics Simulator) framework represents a significant advancement in modeling biogeochemical reactions and complex environmental systems [4]. This simulation environment serves as a generic mathematical tool for solving sets of unlimited, arbitrary, non-linear ordinary differential equations without limitations on the number or type of reactions or other influential dynamics [4]. For researchers, scientists, and drug development professionals engaged in a broader thesis on reaction kinetics simulator tutorial research, mastering ReKinSim provides essential capabilities for parameter estimation and nonlinear data-fitting that can transform experimental data into predictive models [20].

In pharmaceutical research, mechanistic systems modeling has emerged as a crucial approach for guiding drug discovery and development decisions [21]. These models help address a fundamental question in the drug development process: whether a proposed therapeutic target will yield the desired effect in clinical populations. With pharmaceutical companies investing substantially in research long before confirmatory human trial data are available, kinetic simulation platforms like ReKinSim offer a computational framework to reduce development uncertainty and improve return on investment [21]. The platform's flexibility allows integration of environmentally related processes alongside chemical kinetics, enabling researchers to elucidate the extent to which these processes are controlled by factors other than kinetics [4].

Defining Chemical Species and Reaction Mechanisms

Chemical Species Specification

The foundation of any kinetic simulation is the precise definition of all chemical entities participating in the system. In ReKinSim, species are defined not merely as participants in reactions but as state variables whose concentrations change according to kinetic laws. Each species requires specification of:

- Initial concentration (for each experimental condition)

- Physical state (aqueous, gaseous, surface-bound)

- Measurement units (ensuring consistency throughout)

- Observability (whether it can be measured experimentally)

For drug development applications, species often include therapeutic compounds, endogenous metabolites, enzyme complexes, and signaling molecules. The granularity of species definition should match the research question—molecular-level detail for enzyme mechanism studies versus pathway-level aggregation for systems pharmacology models [21].

Reaction Mechanism Formulation

Reaction mechanisms in ReKinSim are constructed as sets of elementary steps that collectively describe the transformation of chemical species. Each reaction requires definition of:

- Stoichiometry (reactants and products with coefficients)

- Rate law (mathematical expression relating rate to concentrations)

- Rate constants (with initial estimates and feasible bounds)

- Reversible/irreversible designation

The platform supports unlimited reaction types including biochemical transformations, isotope fractionation processes, and small-scale mass-transfer limitations [4]. For complex drug action models, mechanisms may incorporate target binding, signal transduction cascades, metabolic conversions, and transport processes across compartments [21].

Mathematical Representation

The collective behavior of defined species and reactions is represented mathematically as a system of ordinary differential equations (ODEs). For each species i, the rate of concentration change is given by:

d[Xi]/dt = Σ (production rates) - Σ (consumption rates)

where each rate term is determined by the kinetic laws of reactions involving that species. ReKinSim's computational engine solves these coupled ODEs numerically, accommodating the non-linear relationships inherent in biochemical systems [4].

Quantitative Parameters for Simulation Setup

Table: Essential Parameters for Initial Simulation Configuration

| Parameter Category | Specific Parameters | Typical Values/Ranges | Source Determination |

|---|---|---|---|

| Kinetic Constants | Forward rate constant (kf) | 10⁻³ to 10⁹ M⁻¹s⁻¹ (bimolecular) | Literature, analogous systems |

| Reverse rate constant (kr) | 10⁻⁶ to 10⁵ s⁻¹ (unimolecular) | Estimated from equilibrium | |

| Equilibrium constant (Keq) | 10⁻⁶ to 10⁹ | Direct measurement, computation | |

| Species Concentrations | Enzyme/protein | nM to µM range | Proteomics, assay quantification |

| Small molecules/metabolites | µM to mM range | Metabolomics, physiological data | |

| Drug compounds | pM to µM (dose-dependent) | Pharmacokinetic studies | |

| System Conditions | Temperature | 25-37°C (biological systems) | Experimental setting |

| pH | 6.5-7.5 (physiological) | Buffer conditions | |

| Ionic strength | 0.1-0.2 M | Buffer composition |

Experimental Protocol: From Mechanism Definition to Validated Simulation

Protocol 1: Building a Kinetic Model for Drug Target Evaluation

This protocol outlines the process of constructing a mechanistic systems model to evaluate potential drug targets, adapting approaches used in pharmaceutical research [21].

Materials and Software

- ReKinSim simulation environment [4]

- Experimental data on species concentrations over time

- Literature on reaction kinetics in the target pathway

- Parameter estimation algorithms (nonlinear minimization)

Step-by-Step Procedure

Define Therapeutic Context and Scope

- Identify the disease phenotype and clinical outcomes of interest

- Determine the biological pathway(s) linking potential drug targets to clinical effects

- Establish boundary conditions for the model (compartments, species included)

Assemble Known Mechanisms from Literature

- Conduct comprehensive literature review of reaction kinetics in the target pathway

- Extract kinetic parameters (kcat, KM, Ki values) with associated experimental conditions

- Note inconsistencies or gaps in available kinetic data

Implement Reaction Network in ReKinSim

- Define all chemical species with initial concentrations

- Implement reaction mechanisms with stoichiometry and rate laws

- Enter initial parameter estimates from literature

- Set parameter boundaries based on physiological plausibility

Calibrate with Experimental Data

- Import time-course data of species concentrations

- Configure nonlinear data-fitting module to estimate parameters

- Run parameter optimization minimizing difference between simulation and data

- Perform sensitivity analysis to identify most influential parameters

Validate with Independent Data

- Test model predictions against experimental results not used in fitting

- Evaluate goodness-of-fit metrics (R², AIC, residual analysis)

- Refine model structure if systematic discrepancies are observed

Simulate Therapeutic Interventions

- Implement drug actions (inhibition, activation, allosteric modulation)

- Run simulations across dose ranges and administration schedules

- Calculate therapeutic indices and predicted efficacy metrics

Expected Outcomes and Interpretation A validated kinetic model capable of predicting system responses to pathway perturbations. The model should provide quantitative estimates of target engagement required for efficacy and identify potential resistance mechanisms or off-pathway effects. For decision-making in drug development, models should generate testable hypotheses for subsequent experimental validation [21].

Protocol 2: Parameter Estimation for Complex Environmental Systems

This protocol specializes in estimating kinetic parameters for environmentally relevant systems, leveraging ReKinSim's capabilities for handling complex biogeochemical reactions [4].

Materials and Software

- ReKinSim with nonlinear minimization module [20]

- Field or laboratory data on species temporal profiles

- Supporting data on environmental conditions (temperature, pH, microbial counts)

Step-by-Step Procedure

Characterize Environmental System

- Define system boundaries and compartments (aqueous, solid, gaseous)

- Identify key chemical species and their measurement units

- Document environmental conditions during data collection

Postulate Reaction Mechanisms

- Propose biogeochemical transformations based on system knowledge

- Include mass transfer limitations if appropriate [4]

- Consider isotope fractionation processes for tracer studies

Implement and Test Model Structure

- Code reactions in ReKinSim with generic rate laws

- Test model structural identifiability using synthetic data

- Simplify mechanisms if parameters are non-identifiable

Multi-Experiment Parameter Estimation

- Import data from multiple experimental conditions

- Configure shared parameters across conditions

- Estimate parameters using weighted least squares approach

Uncertainty Quantification

- Calculate parameter confidence intervals from covariance matrix

- Perform Monte Carlo analysis to propagate parameter uncertainty

- Identify correlations between parameters

Validation and Application Apply model to predict system behavior under novel environmental conditions. Compare predictions with independent validation data. Use model to elucidate controlling processes (kinetic vs. mass transfer limitations) for system management decisions [4].

The ReKinSim Research Toolkit

Table: Essential Components for Reaction Kinetics Research

| Tool/Resource | Function/Purpose | Application in Research |

|---|---|---|

| ReKinSim Software Platform | Solves unlimited, arbitrary non-linear ODE systems; performs nonlinear data-fitting [4] | Core simulation environment for kinetic modeling of biogeochemical and biochemical systems |

| Systems Biology Markup Language (SBML) | Standard format for representing biochemical reaction networks | Enables model sharing, reproducibility, and integration with other computational tools [21] |

| Parameter Estimation Algorithms | Nonlinear minimization techniques for fitting models to experimental data | Determines kinetic constants from time-course concentration measurements [20] |

| Sensitivity Analysis Tools | Quantifies how model outputs depend on parameters | Identifies critical parameters requiring precise measurement; guides experimental design |

| ODE Solvers | Numerical methods for integrating differential equations | Computes species concentration profiles over time given kinetic parameters |

| Experimental Data Interfaces | Import/export functions for various data formats | Connects simulations with laboratory measurements from analytical instruments |

| Visualization Modules | Generates plots of concentrations, fluxes, and fits | Facilitates interpretation of simulation results and communication of findings |

| Model Reduction Utilities | Tools like CARM for creating reduced mechanisms from detailed ones [22] | Simplifies complex models for specific applications while preserving essential dynamics |

Workflow Visualization: From Mechanism to Simulation

Workflow for Reaction Mechanism Definition and Simulation

Integrating Kinetic Simulation into Drug Development Pathway

Applications in Pharmaceutical Research and Decision-Making

Table: Therapeutic Applications of Mechanistic Kinetic Models in Drug Development

| Therapeutic Area | Model Type/Platform | Drug Development Insight | Reference/Example |

|---|---|---|---|

| Type 2 Diabetes | PhysioLab platform (Entelos) | Simulated effects of insulin secretagogues on plasma glucose; predicted optimal dosing regimens | [21] |

| Rheumatoid Arthritis | PhysioLab platform (Entelos) | Evaluated combination therapies and identified biomarkers of response | [21] |

| Cancer | Genome-scale metabolic models | Identified metabolic vulnerabilities in tumor cells for targeted therapy | [21] |

| Cardiovascular Disease | HMG-CoA reductase inhibition model | Predicted LDL reduction from statin therapy and potential side effects | [21] |

| Central Nervous System | RHEDDOS platform (Rhenovia) | Simulated neurotransmitter dynamics for psychiatric and neurological disorders | [21] |

| Asthma | PhysioLab platform (Entelos) | Optimized corticosteroid dosing schedules and predicted patient subpopulation responses | [21] |

The application of kinetic simulation platforms like ReKinSim in pharmaceutical research enables quantitative prediction of drug effects before clinical trials, helping to prioritize the most promising candidates [21]. By creating mechanistic systems models that link molecular interventions to clinical phenotypes, researchers can simulate not only efficacy but also potential toxicity profiles and resistance mechanisms. These models become particularly valuable when they incorporate population variability in key parameters, allowing for prediction of subgroup responses and supporting personalized medicine approaches [21].

The computational efficiency and flexibility of ReKinSim specifically enables researchers to test multiple mechanistic hypotheses and rapidly refine models as new data become available [4]. This iterative process of model building, validation, and refinement creates a virtuous cycle where simulations guide experimental design, and experimental results improve model accuracy. For drug development professionals, this approach transforms kinetic simulation from an academic exercise into a practical tool for de-risking development portfolios and optimizing resource allocation [21].

Building and Applying Kinetic Models: A Step-by-Step Guide from Mechanism to Prediction

The systematic development of pharmaceutical compounds relies on a deep understanding of complex reaction networks. These networks encompass not only the desired multi-step conjugation pathway to the target molecule but also competing side reactions and processes leading to reagent deactivation [23]. Optimizing such networks is a central bottleneck in the Design-Make-Test-Analyse (DMTA) cycle of drug discovery [24]. Traditional empirical optimization is often inefficient due to the multidimensional parameter space and intricate kinetic dependencies.

This article frames the investigation of these networks within the context of the Reaction Kinetics Simulator (ReKinSim), a flexible modeling framework for solving arbitrary sets of non-linear ordinary differential equations representing kinetic systems [4] [25]. ReKinSim's core utility lies in its ability to integrate and inversely fit complex models to experimental data, allowing researchers to move beyond qualitative guesses to quantitative, predictive understanding [25]. By constructing digital twins of reaction networks, scientists can elucidate the extent to which processes are controlled by kinetics versus other factors, deconvolute simultaneous pathways, and predict optimal conditions before running resource-intensive experiments [4].

The foundational chemical concepts are critical for defining accurate models. A reaction mechanism is the sequence of molecular-level elementary steps that convert reactants to products [23]. In complex networks, intermediates created in one step are consumed in another, and the rate-determining step (the slowest elementary step) governs the overall reaction rate [23]. Side reactions and catalyst deactivation pathways operate as parallel or consecutive steps within the same network, competing for starting materials and intermediates. Visualizing these networks as graphs, where nodes represent chemical species and edges represent transformations, is pivotal for identifying critical compounds and transformations [26].

Table 1: Core Concepts in Complex Reaction Network Analysis

| Concept | Definition | Role in Network Modeling |

|---|---|---|

| Elementary Step | A single molecular event (unimolecular, bimolecular) [23]. | The fundamental building block of a kinetic model; its rate law is defined by molecularity. |

| Reaction Intermediate | A transient species formed in one step and consumed in a later step [23]. | A key node in the network; its concentration profile over time is simulated. |

| Rate-Determining Step | The slowest elementary step in a multi-step sequence [23]. | Controls the overall reaction rate; its kinetic parameters are often most critical to fit. |

| Side Reaction | An undesired parallel pathway consuming starting materials or intermediates. | Reduces yield and selectivity; must be included in the model for accurate prediction. |

| Reagent/Catalyst Deactivation | A process that irreversibly converts an active reagent or catalyst into an inactive form. | A sink term in the model; can dominate long-term reaction profiles and scalability. |

Diagram 1: Core Concepts in a Complex Reaction Network

ReKinSim Tutorial: Building a Network Model

This protocol details the process of constructing and fitting a kinetic model for a complex reaction network using the ReKinSim environment [4] [25].

Protocol: Defining Reactions and Initial Conditions

Objective: To translate a hypothesized chemical mechanism into a formatted input for ReKinSim.

Materials & Software:

- ReKinSim software suite [4] [25].

- Experimental data (e.g., time-course concentration profiles for key species).

- Hypothesized reaction mechanism.

Procedure:

- Network Schematic: Draw the complete reaction network. Identify all species: reactants (A, B), desired products (P), observable intermediates (Int), side products (S), and deactivated catalyst forms (D). Diagram 1 provides a generic template.

- Elementary Step Listing: List every hypothesized elementary step. Assign a descriptive label (e.g.,

R1,Oxidative_Addition) and a corresponding rate constant (k1,k_OA). - Rate Law Assignment: For each elementary step, write its differential rate law based on molecularity [23].

- Unimolecular (A → P):

rate = k * [A] - Bimolecular (A + B → P):

rate = k * [A] * [B]

- Unimolecular (A → P):

- ReKinSim Input File:

- In the reactions section, define each step in the format:

k1 : A + B -> Int1. - In the parameters section, provide initial guesses for every

kvalue. - In the initial conditions section, define starting concentrations for all species.

- In the reactions section, define each step in the format:

- Data Import: Format experimental time-course data in a column-based text file (time, concentration of species A, P, etc.) for model fitting.

Protocol: Parameter Estimation via Nonlinear Fitting

Objective: To find the kinetic parameters (rate constants) that best fit the experimental data.

Procedure:

- Load Model & Data: In ReKinSim, load the input file from Step 2.1 and the experimental data file.

- Configure Fitting: Select which parameters (

kvalues) to fit and define plausible upper/lower bounds. Select the dependent experimental data columns to fit against. - Execute Fit: Run the nonlinear minimization algorithm (e.g., Levenberg-Marquardt). ReKinSim will iteratively solve the ODE system and adjust parameters to minimize the sum of squared residuals between model and data [4].

- Analyze Output:

- Examine the final fitted parameter values and their estimated confidence intervals.

- Visualize the model simulation (lines) overlaid on the experimental data (points).

- Calculate goodness-of-fit metrics (e.g., R², root-mean-square error).

Troubleshooting:

- Poor Fit: The mechanism may be incorrect or incomplete. Re-evaluate the need for additional side or deactivation steps.

- Parameter Covariance: High covariance between parameters suggests the data is insufficient to uniquely define them; design new experiments to provide orthogonal information.

- Sensitivity Analysis: Use ReKinSim to perform a local sensitivity analysis to identify which parameters most strongly influence the concentration of the desired product.

Application Note: A Case Study in Aerobic Oxidation

Scenario: Optimizing a copper/TEMPO-catalyzed aerobic oxidation of alcohols to aldehydes—a network prone to side over-oxidation to acids and catalyst deactivation [27].

Experimental Protocol for Kinetic Data Generation

Objective: To generate high-quality time-course data for fitting a network model of the Cu/TEMPO oxidation.

Materials:

- Substrate (e.g., benzyl alcohol), Cu(I) catalyst (e.g., CuBr), TEMPO, solvent (MeCN), base (N-methylimidazole).

- Automated sampling system or ReactIR/Raman probe for in situ monitoring [27].

- Analytical equipment (GC, HPLC, UPLC).

Procedure:

- Reaction Setup: In a controlled environment (temperature, O₂ atmosphere), initiate the reaction by adding the substrate to a mixture of catalyst, TEMPO, and base in solvent [27].

- High-Frequency Sampling: At defined time intervals (e.g., 0, 1, 2, 5, 10, 20, 30, 60 min), withdraw aliquots or collect in situ spectral data.

- Quenching & Analysis: Immediately quench aliquots to stop the reaction. Analyze via GC/HPLC to quantify concentrations of alcohol substrate, aldehyde product, and carboxylic acid side product.

- Catalyst Stability Test: Run a separate, longer-duration experiment. Monitor aldehyde formation rate over time. A decay indicates catalyst deactivation.

Table 2: Example Kinetic Data from Aerobic Oxidation Screening [27]

| Time (min) | [Alcohol] (mM) | [Aldehyde] (mM) | [Acid] (mM) | Notes |

|---|---|---|---|---|

| 0 | 100.0 | 0.0 | 0.0 | Reaction start. |

| 5 | 85.2 | 12.1 | 0.5 | Fast initial conversion. |

| 15 | 52.3 | 42.5 | 2.1 | Aldehyde peaks. |

| 30 | 30.1 | 55.2 | 11.5 | Acid formation accelerates. |

| 60 | 15.5 | 50.8 | 30.4 | Significant over-oxidation; aldehyde concentration decreases. |

Constructing & Fitting the Network Model in ReKinSim

Hypothesized Network:

- Main Path: Alcohol + ActiveCat → Aldehyde + RegeneratedCat (

k_main) - Side Path: Aldehyde + ActiveCat → Acid + RegeneratedCat (

k_side) - Deactivation: ActiveCat → DeactivatedCat (

k_deact)

ReKinSim Analysis:

- Input the three reactions and initial guesses for

k_main,k_side,k_deact. - Load the time-course data from Table 2.

- Fit all three parameters simultaneously. The output might yield:

k_main = 0.15 min⁻¹,k_side = 0.03 min⁻¹,k_deact = 0.01 min⁻¹. - Insight: The model quantitatively shows that while the main reaction is fastest, the slow but persistent side and deactivation pathways significantly erode yield at >30 minutes. Optimization should focus on suppressing

k_side(e.g., modifying ligand) andk_deact(e.g., adjusting O₂ pressure).